Hardware Recommendations for Generative AI

Our hardware recommendations for generative AI workstations below are provided by our Puget Labs team. Check out their performance articles for more in-depth analysis of various workflows.

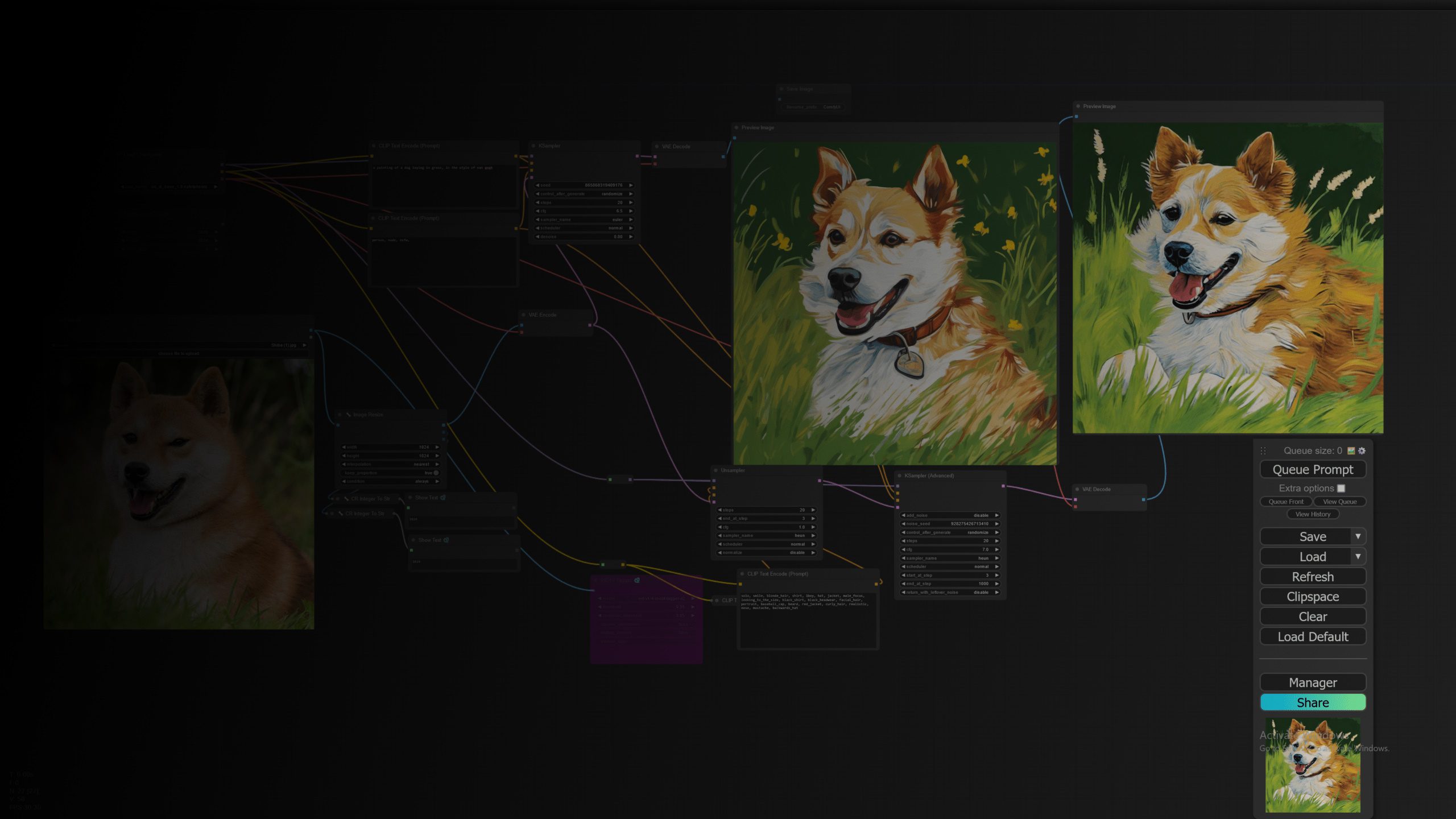

AI Image & Video Generation System Requirements

Quickly Jump To: Processor (CPU) • Video Card (GPU) • Memory (RAM)

In general, the CPU will not play a large role in running Generative models unless the CPU is being used instead of the GPU – which is not recommended! However, when your workflow involves more than just running Generative models, the CPU could have a large impact. For example, if part of your workflow involves data collection, manipulation, or pre-processing, the CPU may be a critical component in your work pipeline. Additionally, the choice of CPU platform affects things like overall system size, memory capacity and bandwidth, PCI-Express lane count, and I/O connectivity.

Processor (CPU)

In data science there is a significant amount of effort with movement and transformation of large data sets. The CPU, with its ability to access large amounts of memory, may dominate workflows in contrast to GPU compute in ML/DL. Multi-core parallelism will depend on the task, but parallelism in data processing is often very good.

What CPU is best for creating AI-generated images and videos?

Our Puget Labs team has tested generative AI models across a wide range of recent CPUs, including the Intel Core 14600K, 14700K, and 14900K, plus their Xeon W-3495X, as well as AMD’s Ryzen 7 7700X and Threadripper PRO 7985WX – and the choice of processor had no impact on image generation speed. All of these CPUs are more than capable of supporting a modern video card, which is where the bulk of this work takes place. If you want to run multiple models at the same time, then a CPU with more PCI-Express lanes like the Threadripper or Xeon will better handle the required number of graphics cards.

Do more CPU cores make generative AI workflows faster?

In a word: no. The heavy lifting of most AI applications is done on the graphics card (GPU) rather than the CPU.

Does generative AI work better with Intel or AMD CPUs?

For most consumer-level generative AI applications, the brand of CPU does not matter. However, software optimizations in niche applications may make them better suited to either Intel or AMD. Most users will know if their programs have such optimizations.

Video Card (GPU)

GPUs are the backbone of the vast majority of generative AI workloads, regardless of the type of output: image, video, voice, or text. Most projects are based around CUDA (NVIDIA), but plenty of projects support ROCm (AMD) as well.

What type of GPU (video card) is best for generative AI?

The most important factors when choosing a GPU for AI are:

- Total Memory (VRAM)

- Memory Bandwidth

- Interface width (bits) x clock speed (MHz)

- Floating Point Calculations (FP16 is most relevant)

- NVIDIA – Tensor Core Count & Tensor Core Generation (Latest: 4th)

- AMD – Compute Unit Count

What video cards are recommended for generative AI?

Our top recommendations at this time are NVIDIA’s GeForce RTX 4080 Super with 16GB of memory and the RTX 4090 with 24GB. If your projects need more memory, stepping up to the RTX 5000 Ada with 32GB or RTX 6000 Ada with 48GB is great, but adds a lot of cost.

How much VRAM (video memory) does generative AI need?

This is dependent on what models you are using, but here is a chart from a recent presentation our team did at NAB:

Will multiple GPUs improve generative AI performance?

Short answer: no. Long answer: multiple GPUs can be used to speed up batch image generation or allow multiple users to access their own GPU resources from a centralized server. Four GPUs gets you 4 images in the time it takes one GPU to generate 1 image, as long as nothing else in the system is causing a bottleneck. Four GPUs will not generate one image four times faster than one GPU.

Does generative AI run better on NVIDIA or AMD?

Right now, NVIDIA graphics cards have a general advantage over AMD. NVIDIA’s CUDA is better supported and their cards offer more compute power.

Does generative AI need a “professional” video card?

No, unless you are working on models and outputs large enough to need more VRAM than consumer (GeForce / Radeon) video cards offer.

Do I need NVLink when using multiple GPUs for generative AI?

No – and in fact, very few modern NVIDIA graphics cards even support NVLink anymore.

Memory (RAM)

How much RAM does generative AI need?

System memory is not a major factor for performance here, but we generally recommend at least twice the amount of total VRAM in the system as a safe amount. If you will also be using the system for other applications, take their requirements into consideration as well!