Table of Contents

Introduction

Topaz AI is a set of popular software tools that utilize AI and machine learning to enhance both images and video. On the photo and image side, Topaz offers Gigapixel AI to upscale images, Sharpen AI to sharpen images, and DeNoise AI to remove image noise. For videos, Topaz Video AI can do everything from upscaling, slow motion, deinterlacing, to reducing noise and generally improving video quality.

These applications are known for how exceptionally well they work, but they can be hardware intensive – requiring a powerful computer in order for them to complete in a reasonable amount of time. For example, even with the fastest workstation available today, Video AI may only be able to process a few frames per second of video footage. That can be fine if you are only using it occasionally, but if you use it for any significant amount of time, it can pay off to invest in hardware that will net you even a modest increase in performance.

To help people decide where to spend their budget when either upgrading or purchasing a new workstation for Topaz AI, we wanted to take a look at how different CPUs and GPUs perform. AI-based applications are often known for how much GPU power they require, but getting the right CPU can also make as much – or more – of a difference as the GPU.

Since this is the first time we are diving into Topaz AI, we are going to include fairly lengthy sections covering both our test methodology and information on the four hardware platforms and various GPUs we looked at. If you want to skip right to our testing results, feel free to do so!

Testing Methodology

Since the Topaz AI suite is something we have not published benchmark data for in the past, we wanted to detail how, and what, we are testing. For Gigapixel, DeNoise, and Sharpen AI, we started a number of images from Shotkit’s RAW photo database. These photos come from a number of different cameras, with a variety of subjects and conditions.

Big thank you to Shotkit for providing a terrific resource for anyone to download and examine RAW photos from various cameras!

From there, we wrote an automation script to load each set of photos (grouped by RAW file type) into the application, made sure everything was left on the default/auto settings, and timed how long it took to process and export each batch of photos. If you wish to duplicate our testing, below is the list of photos we used, and the AI model that was selected automatically. The timing we recorded was from the time we hit “Save” to when the last image finished processing.

All tests were run in GPU mode on the primary GPU with graphics memory consumption set to “High”. You can also run each application in CPU mode, but that is rarely done and does not provide any benefit from what we could tell. There is also a multi-GPU option that can use the discrete GPU and the integrated GPU on the Core i9 13900K, but from our testing, it did not provide any sort of performance benefit – and was in fact sometimes slower.

| Photo from Shotkit | Gigapixel AI (4x Scale) | DeNoise AI | Sharpen AI |

|---|---|---|---|

| Canon-5DMarkII_Shotkit.CR2 | Standard | Low Light | Motion Blur – Very Noisy |

| Canon-5DMarkII_Shotkit-2.CR2 | Standard | Low Light | Standard |

| Canon-5DMarkII_Shotkit-4.CR2 | Standard | Low Light | Out of Focus – Very Noisy |

| Canon-6D-Shotkit-5.CR2 | Standard | Low Light | Out of Focus – Very Noisy |

| Canon-6D-Shotkit-8.CR2 | Standard | Low Light | Standard |

| Canon-6D-Shotkit-11.CR2 | Standard | Low Light | Standard |

| Nikon-D4-Shotkit-2.NEF | Standard | RAW | Motion Blur – Very Blurry |

| Nikon-D600-Shotkit-2.NEF | Standard | RAW | Standard |

| Nikon-D600-Shotkit-4.NEF | Standard | RAW | Out of Focus – Very Noisy |

| Nikon-D750-Shotkit-2.NEF | Standard | RAW | Standard |

| Nikon-D3500-Shotkit-4.NEF | Standard | RAW | Out of Focus – Very Noisy |

| Nikon-D3500-Shotkit-5.NEF | Standard | RAW | Standard |

| Sony-a7c-Shotkit.ARW | Standard | RAW | Out of Focus – Very Noisy |

| Sony-a7c-Shotkit-3.ARW | Standard | RAW | Out of Focus – Very Noisy |

| Sony-a6000-Shotkit-2.ARW | Standard | RAW | Out of Focus – Very Noisy |

| Sony-RX1Mark2-Shotkit-2.ARW | Standard | RAW | Motion Blur – Very Blurry |

| Sony-RX100Mark6-Shotkit-2.ARW | Standard | RAW | Motion Blur – Very Noisy |

| Sony-RX100Mark6-Shotkit-4.ARW | Standard | RAW | Out of Focus – Very Noisy |

| Fujifilm-X100F-Shotkit-5.RAF | Low Res | Low Light | Out of Focus – Very Noisy |

| Fujifilm-X100V-Shotkit-3.RAF | Standard | Low Light | Out of Focus – Very Noisy |

| Fujifilm-XH1-Shotkit.RAF | Standard | Low Light | Out of Focus – Very Noisy |

| Fujifilm-XH1-Shotkit-5.RAF | Standard | Low Light | Motion Blur – Normal |

| Fujifilm-GFX-50S-Shotkit.RAF | Standard | RAW | Motion Blur – Very Blurry |

| Fujifilm-GFX-50S-Shotkit-2.RAF | Standard | RAW | Out of Focus – Very Noisy |

For Topaz Video AI, we used three different videos to test the following presets:

| Preset | Source Video | AI Model |

|---|---|---|

| 4x slow motion | H.264 4:2:0 8bit 1080p 59.94FPS | Chronos |

| Deinterlace footage and upscale to HD | AVI 4:2:2 8bit 720×470 29.97FPS Interlaced | Dione: DV 2x FPS |

| Upscale to 4K | H.264 4:2:0 8bit 1080p 59.94FPS | Proteus |

Since the photo-based applications do not provide easy-to-access per-image statistics (from an automation sense), we recorded performance as the number of images per minute that it was able to process. We could have just as easily recorded the simple time in seconds to complete each batch, but doing images/second allows us to take a geomean of all the results in conjunction with the Video AI FPS results in order to get an over-arching “Topaz AI Overall Score”.

Each application was given a score based on the simple geometric mean of each batch of tests (based on RAW format). The geometric mean of each of those was then calculated and multiplied by 10 (just to differentiate it from the app-specific scores) in order to generate the Overall Score.

Test Setup

Since this is the first time we are taking a close look at hardware performance with the Topaz AI suite, we decided to go relatively wide and cover a range of hardware. In the interest of time (and because we really didn’t know what we would find), we skipped some GPU and CPU models we normally would test and kept it to what we were able to run in a couple of days.

With the results we get in this testing, we hope to be able to fine-tune our testing in the future so that we can focus on the type of hardware that makes the biggest impact on performance in Topaz AI.

AMD Ryzen 5000 Platform

| CPU: AMD Ryzen 9 5950X 16 Core |

| CPU Cooler: Noctua NH-U12A |

| Motherboard: Gigabyte X570 AORUS ULTRA |

| RAM: 4x DDR4-3200 16GB (64GB total) |

| GPU: NVIDIA GeForce RTX 4080 16GB |

| Storage: Samsung 980 Pro 2TB |

| OS: Windows 11 Pro 64-bit (22621) |

AMD Ryzen 7000 Platform

| CPU: AMD Ryzen 9 7950X 16 Core AMD Ryzen 9 7900X 12 Core AMD Ryzen 7 7700X 8 Core |

| CPU Cooler: Noctua NH-U12A |

| Motherboard: Asus ProArt X670E-Creator WiFi |

| RAM: 2x DDR5-4800 32GB (64GB total) |

| GPU: NVIDIA GeForce RTX 4080 16GB |

| Storage: Samsung 980 Pro 2TB |

| OS: Windows 11 Pro 64-bit (22621) |

AMD TR PRO 5000 Platform

| CPU: AMD Threadripper PRO 5975WX 32-Core |

| CPU Cooler: Noctua NH-U14S TR4-SP3 (AMD TR4) |

| Motherboard: Asus Pro WX WRX80E-SAGE SE WIFI |

| RAM: 8x Micron DDR4-3200 16GB ECC Reg. (128GB total) |

| GPUs: NVIDIA GeForce RTX 4080 16GB |

| Storage: Samsung 980 Pro 2TB |

| OS: Windows 11 Pro 64-bit (22621) |

Intel Core 13th Gen Platform

| CPU: Intel Core i9 13900K 8+16 Core Intel Core i7 13700K 8+8 Core Intel Core i5 13600K 6+8 Core |

| CPU Cooler: Noctua NH-U12A |

| Motherboard: Asus ProArt Z690-Creator WiFi |

| RAM: 2x DDR5-4800 32GB (64GB total) |

| GPU: NVIDIA GeForce RTX 4080 16GB |

| Storage: Samsung 980 Pro 2TB |

| OS: Windows 11 Pro 64-bit (22621) |

GPU Testing Platform

Software

| Topaz Gigapixel AI 6.2.2 |

| Topaz DeNoise AI 3.7.0 |

| Topaz Sharpen AI 4.1.0 |

| Topaz Video AI 3.0.3.0 |

All driver/BIOS/Windows updates applied as of 11/30/2022

This is a much wider variety of hardware than what we normally look at since we are combining both CPU and GPU testing into a single article – and testing with four different Topaz AI applications at the same time! However, since we don’t quite know how these applications are going to behave with different CPUs, we wanted to cover most of our bases. In fact, until we completed our CPU testing, we didn’t even know which base platform and CPU we were going to use for the GPU portion of our testing.

A bit of a spoiler, but since the Core i9 13900K ended up giving the highest overall performance out of all the CPUs we tested, we used that as a base for our GPU testing. If we do multi-GPU testing in the future (which for most of these apps is still flagged as “experimental”), we will likely need to include a beefier platform like Threadripper Pro to make sure that we are not being CPU bottlenecked. But for a single GPU, the Core i9 13900K appears to be the best CPU overall and should allow each GPU to perform to the best of its ability.

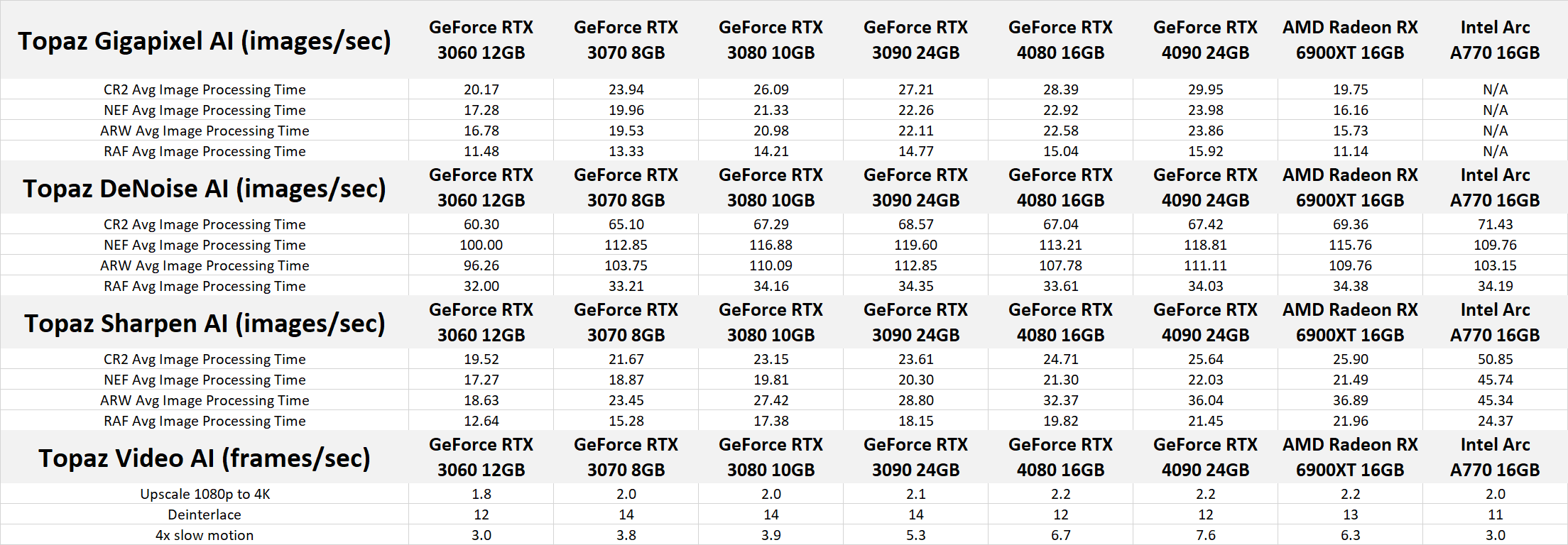

Raw Benchmark Data

Before diving into the analysis of our results, we wanted to include the raw data from our testing. Especially as this is a whole new test set for us, we wanted to be as transparent as possible about what we tested, and the results of those tests.

Topaz AI CPU Benchmark Results

To start off the analysis of our testing, we are going to look at the CPU performance in each Topaz AI application. Note that this is using the GPU for processing in each application, even though we are looking at CPU performance. We could switch to CPU mode, which would likely show a greater difference between each CPU, but that is rarely used due to how much faster it is to use the GPU for processing.

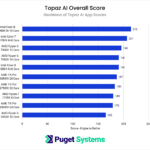

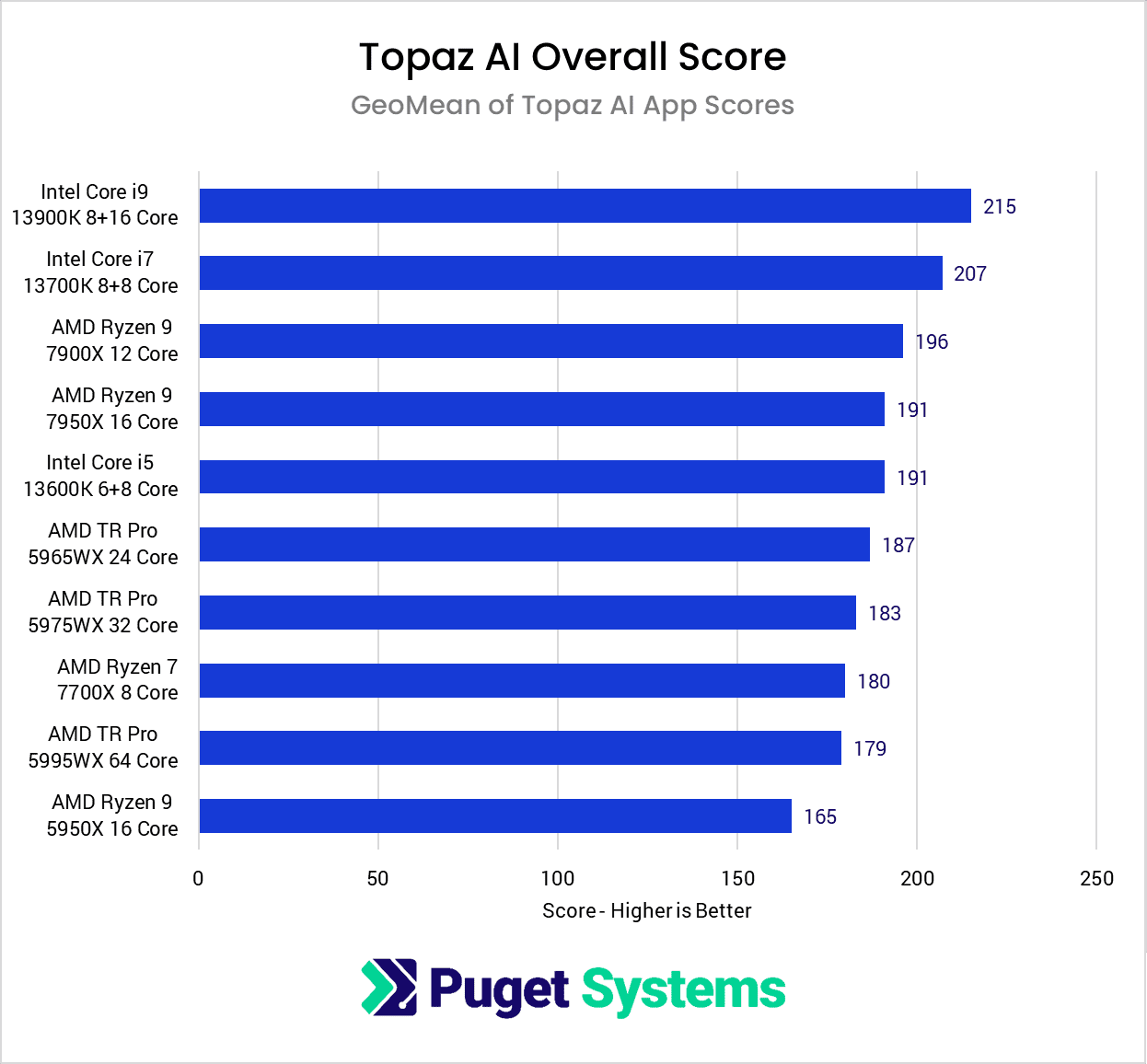

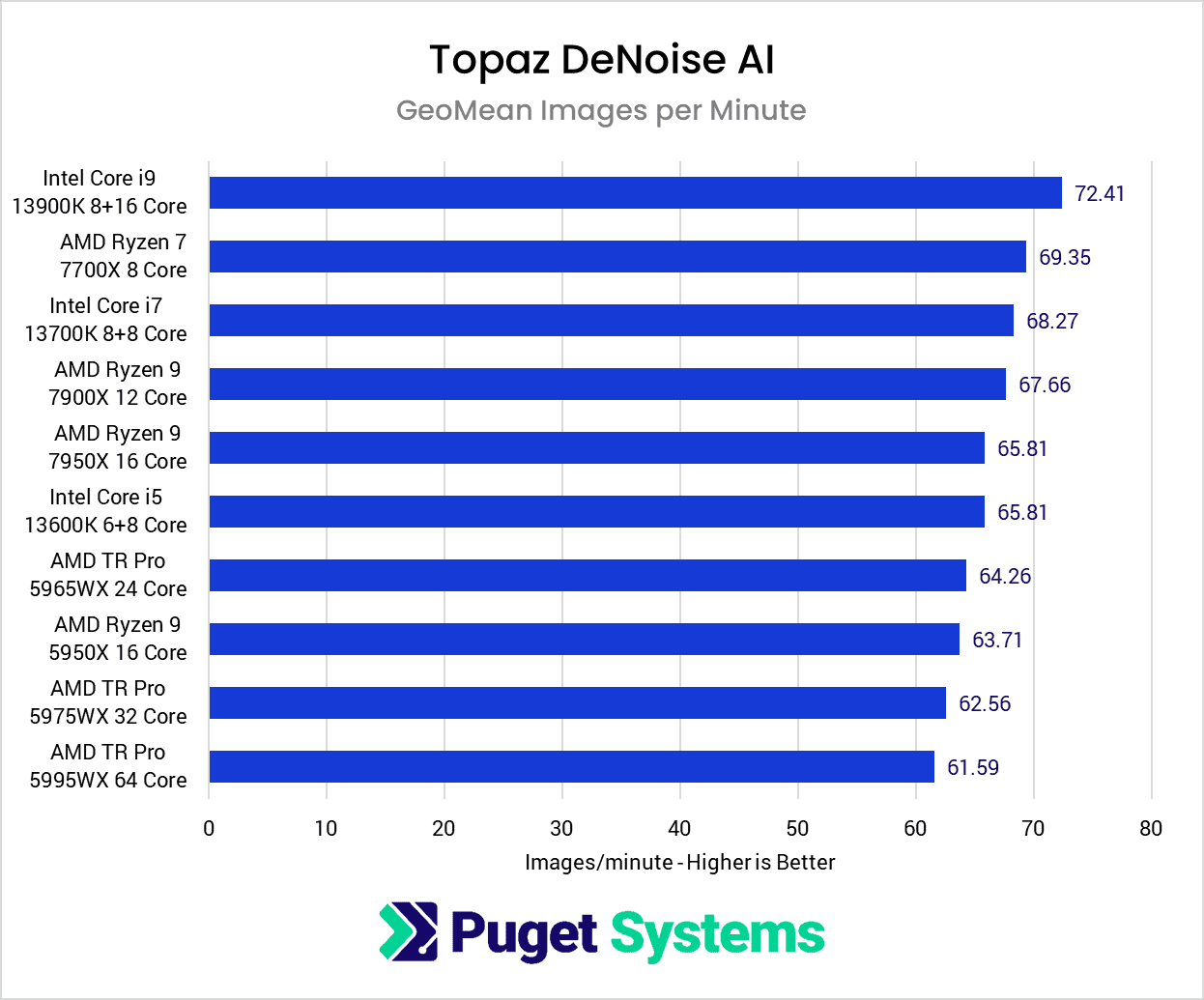

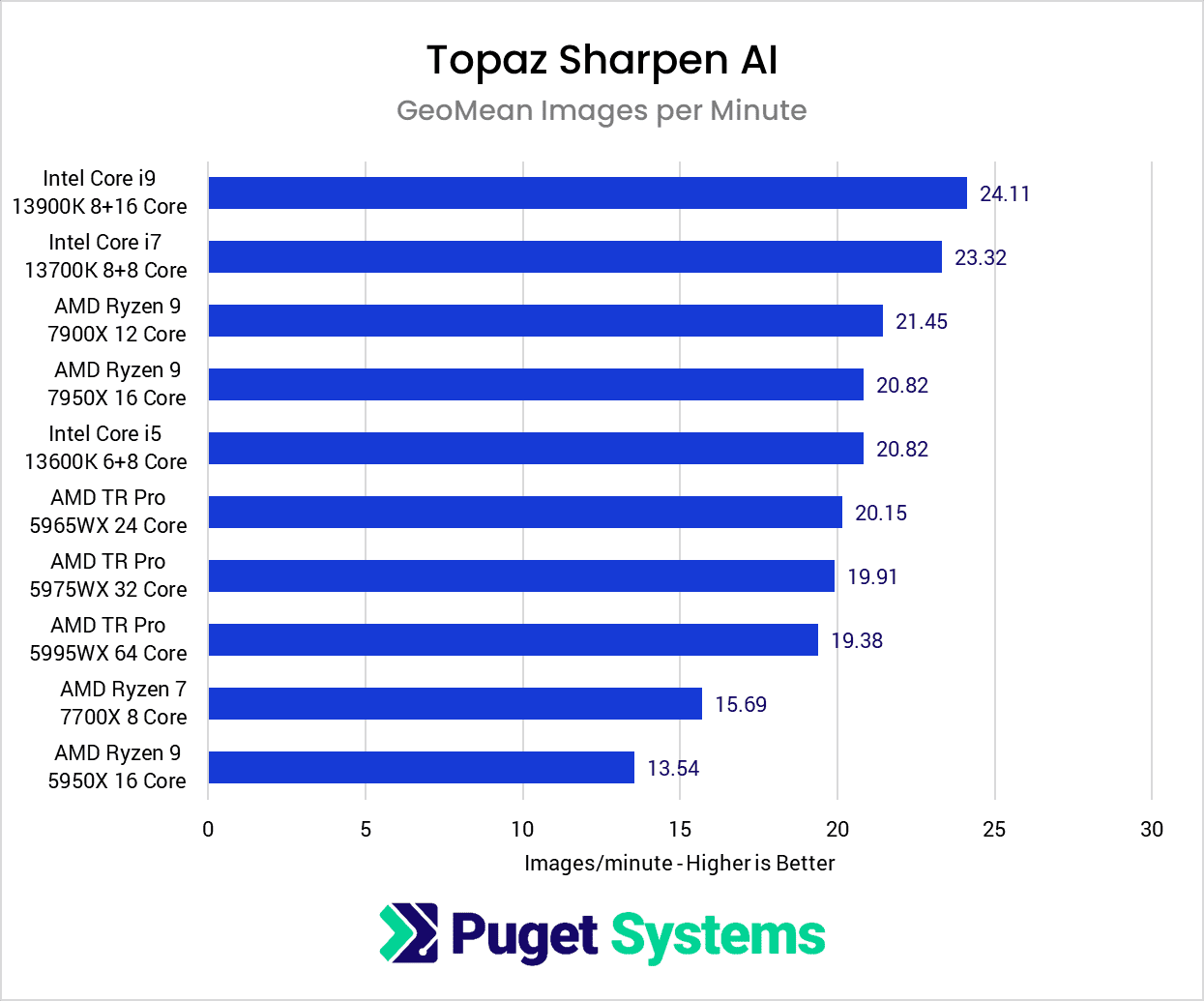

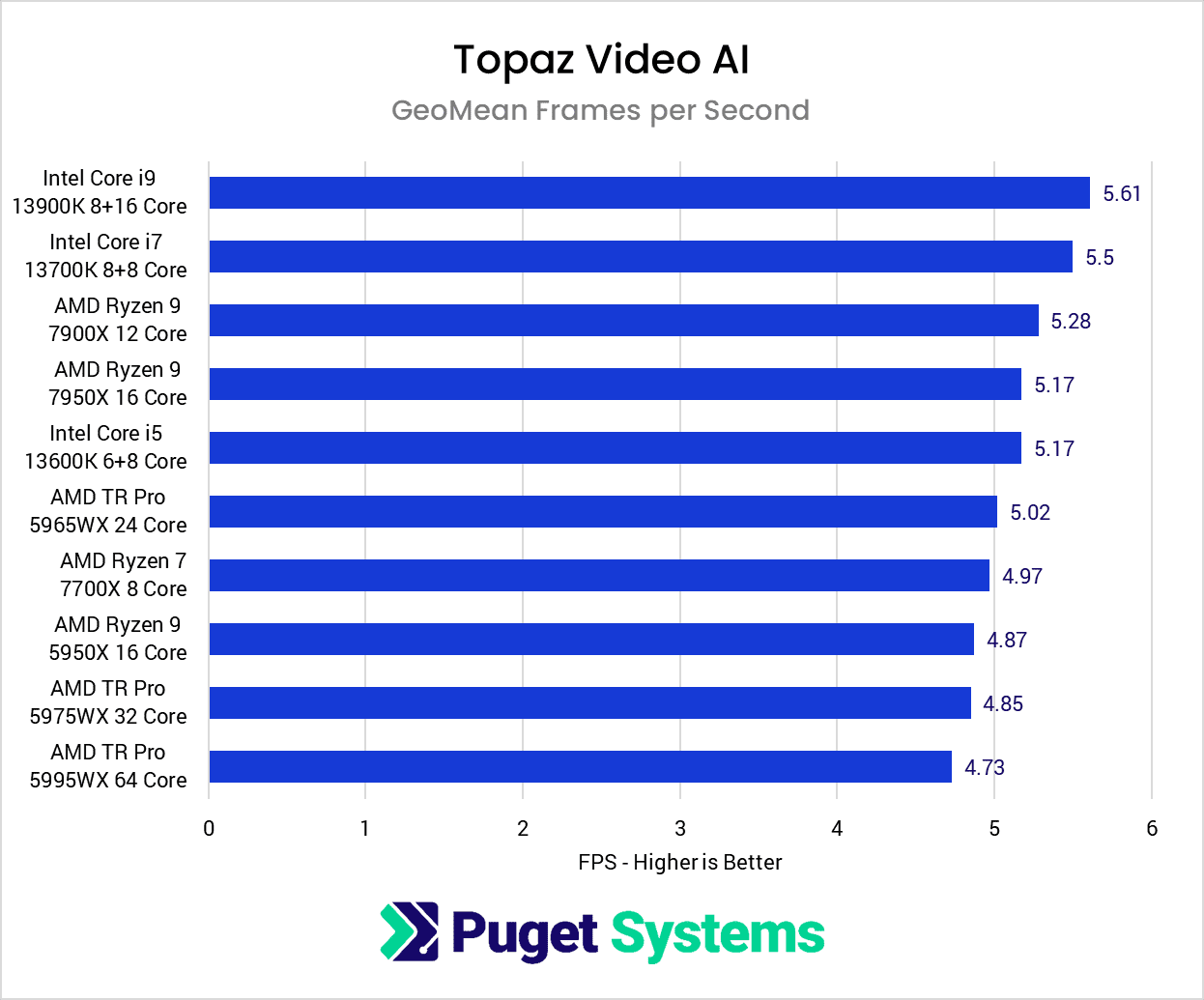

Looking at the combined results across all four Topaz AI applications we tested, Intel is the clear winner with its Core 13th Gen processors. The Core i9 13900K ended up scoring about 10% faster overall than the fastest AMD CPU (the Ryzen 7900X) and secured the top spot in every single benchmark.

The Core i7 13700K also did very well, although the AMD Ryzen 7700X did manage to sneak by it by about 1.5% for Topaz DeNoise AI. DeNoise is actually very interesting as the AMD Ryzen 7000 series tended to do better with the lower core count CPUs. We did CPU and GPU load logging during these tests and found that DeNoise is one of the more lightly threaded of these applications, so we may be looking at some sort of cache or turbo limitation that is allowing the 7700X to out-perform the 7900X and 7950X.

Overall, it was surprising how little the CPU seems to matter within a single family of products from Intel and AMD. Per-core performance seems to be the main name of the game for Topaz AI, which generally means going with the latest generation consumer-grade CPU if you want the best possible performance. Going with a higher-end model within those families, however, will only give you a marginal increase.

For example, going from the Core i5 13600K to the Core i9 13900K only nets about a 13% performance increase on average. And for AMD, going from the Ryzen 7 7700X to the 7900X (which was consistently faster than the 7950X) is only 9%. We didn’t get the chance to include the Ryzen 5 7600X this time around, but we suspect that it would only be a hair slower than the 7700X.

This is talking in generalities, and certain applications (Sharpen AI in particular) showed a much large benefit to using a better CPU than the other applications. Even in Sharpen AI, however, it is much more about getting the right family of processors (Core 13th Gen) than anything else.

Topaz AI GPU Benchmark Results

Moving on to the GPU-based results, we did all our testing with the Core i9 13900K as that was the fastest CPU for every Topaz AI application.

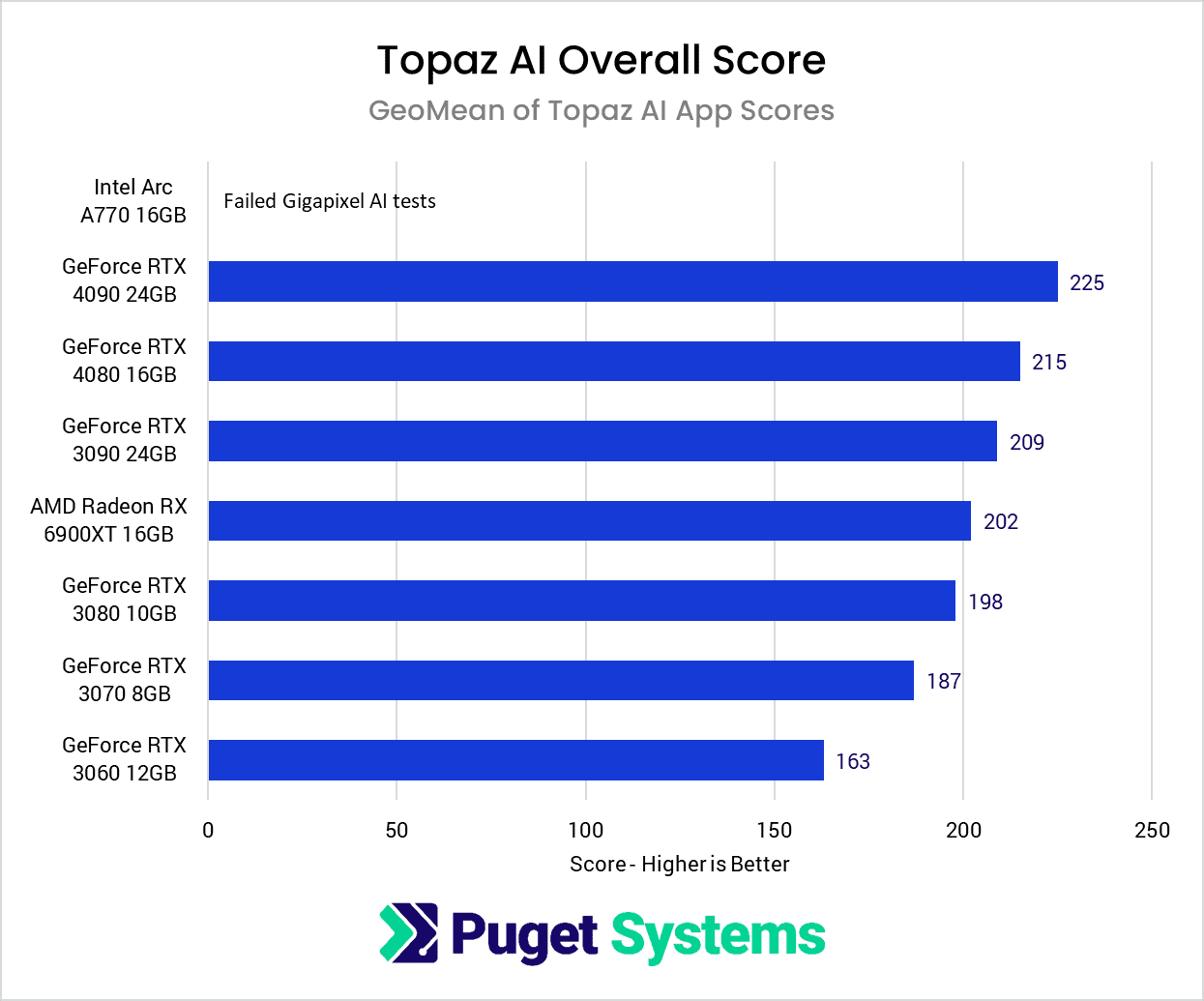

Starting off with the combined geometric mean across all four Topaz AI applications, the results are surprisingly uninteresting outside of the Intel Arc A770. For whatever reason, the A770 GPU consistently failed in Gigapixel AI, causing the application to crash when working with specific .CR2 image files. Because of this, we were unable to generate an overall score for that particular GPU.

Outside of the Arc card, each of the GPUs we tested performed almost exactly as you would expect taking into account the generation of each GPU, and its MSRP pricing. For example, the AMD Radeon 6900 XT performed right in between the NVIDIA GeForce RTX 3080 and 3090 ($699 and $1,499 respectively), which is right in line with its $999 MSRP. This gives AMD a slight edge due to the larger VRAM found on the 6900 XT, but Topaz AI doesn’t require a large amount of VRAM from what we could see in our testing, so that isn’t a terribly big deal for Topaz AI itself.

For NVIDIA, the RTX 40-series cards are certainly faster than the previous 30-series, with the RTX 4080 scoring just above the more expensive RTX 3090. We didn’t include the Ti variants this time around, but we suspect that the RTX 4080 would also be able to match the RTX 3090 Ti (at a much lower MSRP price point).

However, this is all taking about the geomean across each application. If we dive into individual apps, things get a bit more complicated.

For Gigapixel AI (chart #2), the AMD Radeon 6900 XT actually did very poorly – coming in at the very bottom of our chart. This is also where the Intel Arc card completely failed, so if image upscaling is the main thing you are looking for, NVIDIA is definitely the way to go.

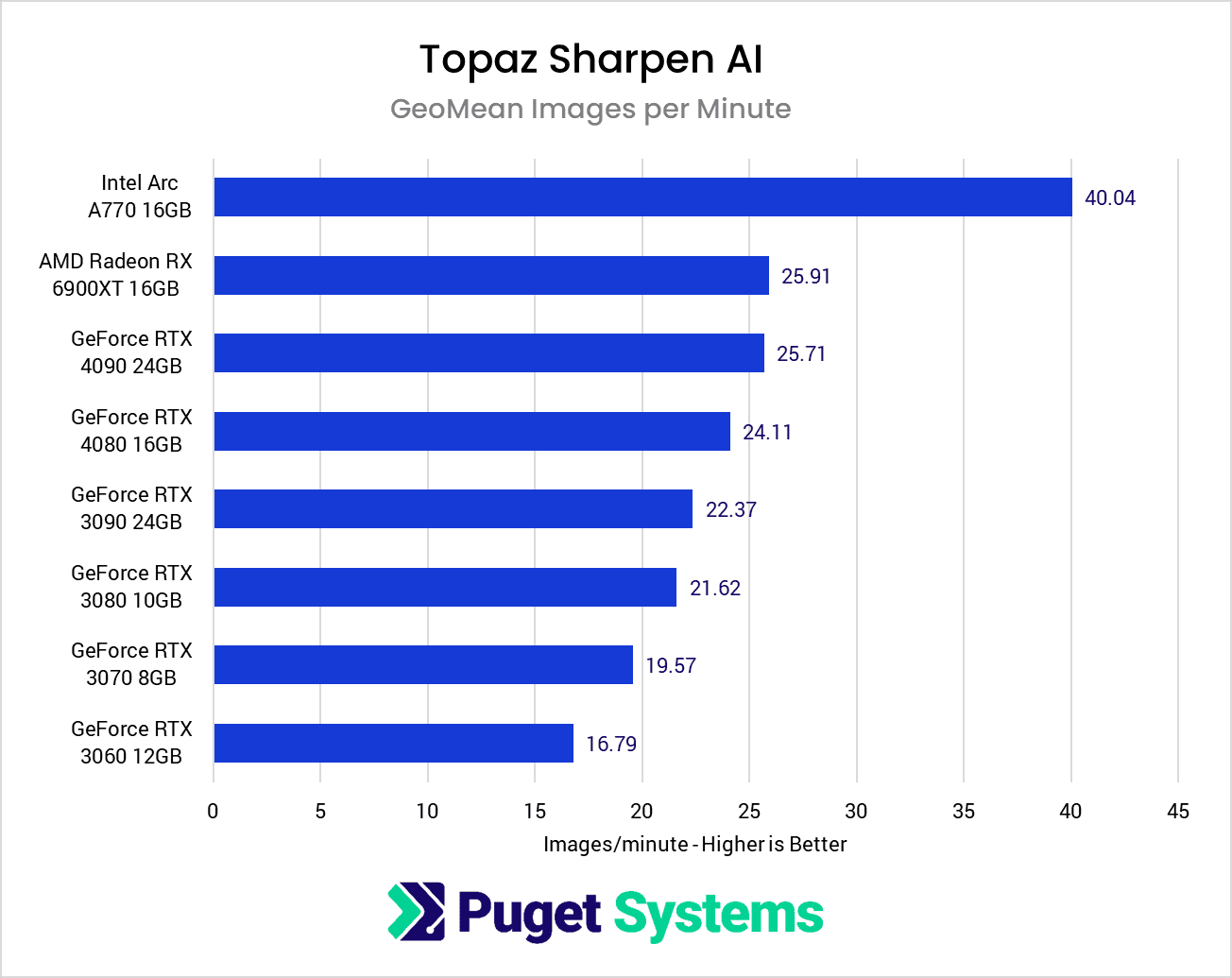

Sharpen AI (chart #3), on the other hand, is almost precisely the opposite. The Intel Arc A770 did amazing here, beating the next fastest GPU by 55%. We did a lot of double-checking to make sure the exported image was the same between the A770 and the other GPUs, and as far as we could tell, this is a completely valid result. At first, we suspected it had something to do with Intel Hyper Compute (where Topaz AI is specifically listed as being able to use the Arc dGPU can work in conjunction with the iGPU), but we got nearly identical performance even when we disabled the iGPU.

Beyond Intel’s amazing result for Sharpen AI, AMD also did very well with the Radeon 6900 XT almost exactly matching the GeForce RTX 4090. This makes us very excited to test the just-released AMD Radeon 7900 XTX to see how it compares.

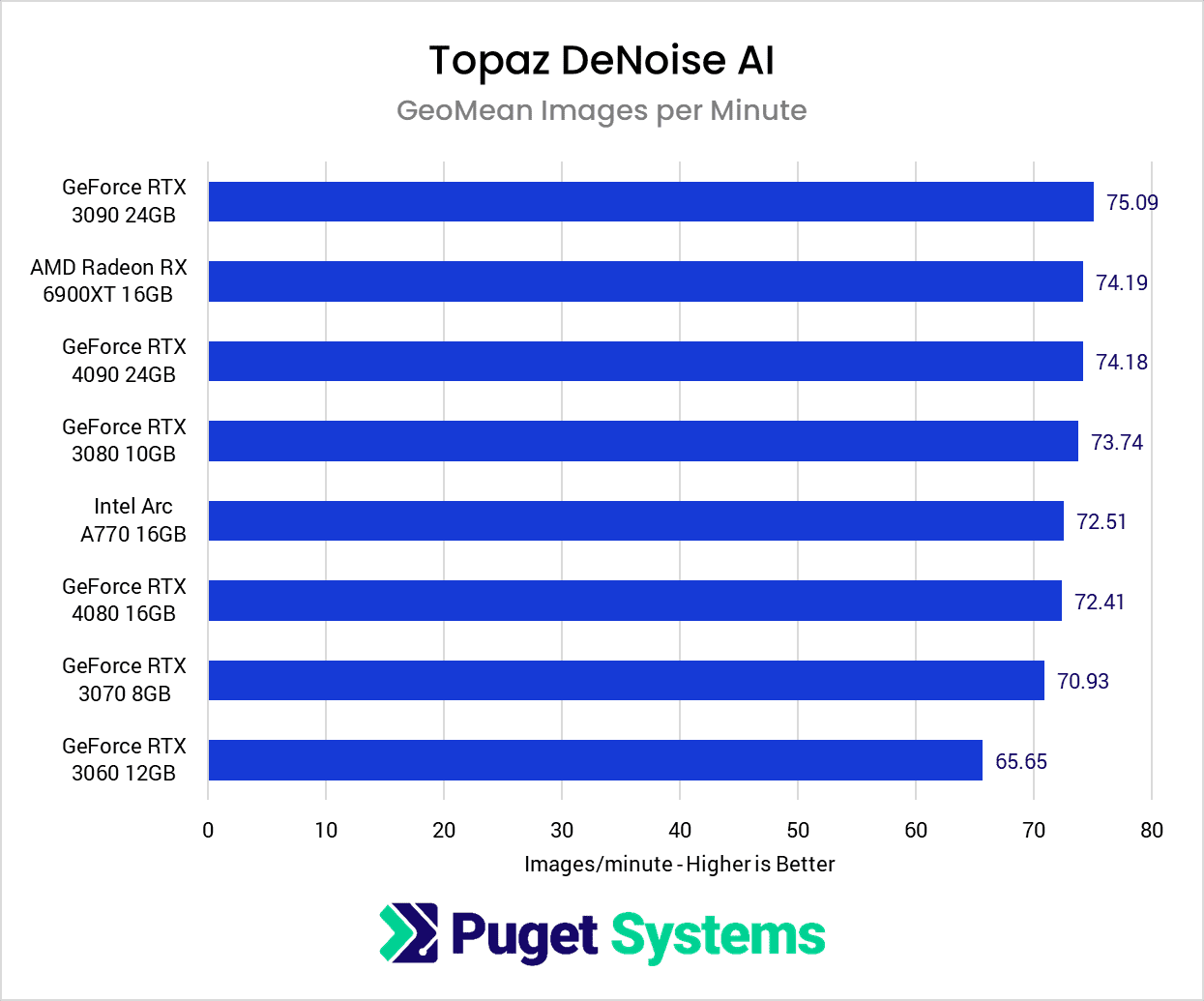

Topaz DeNoise AI (chart #4) is the one application where the GPU didn’t seem to matter all that much. There was a slight performance drop with the RTX 3060, but between all the other GPUs there was just a 6% difference in performance making it very difficult to tell which GPU is actually faster since we are getting to be within the margin of error for this kind of testing. Interestingly, DeNoise AI also showed some of the smaller performance deltas for the CPU portion of our testing, suggesting that the main bottleneck is something beyond the CPU and GPU.

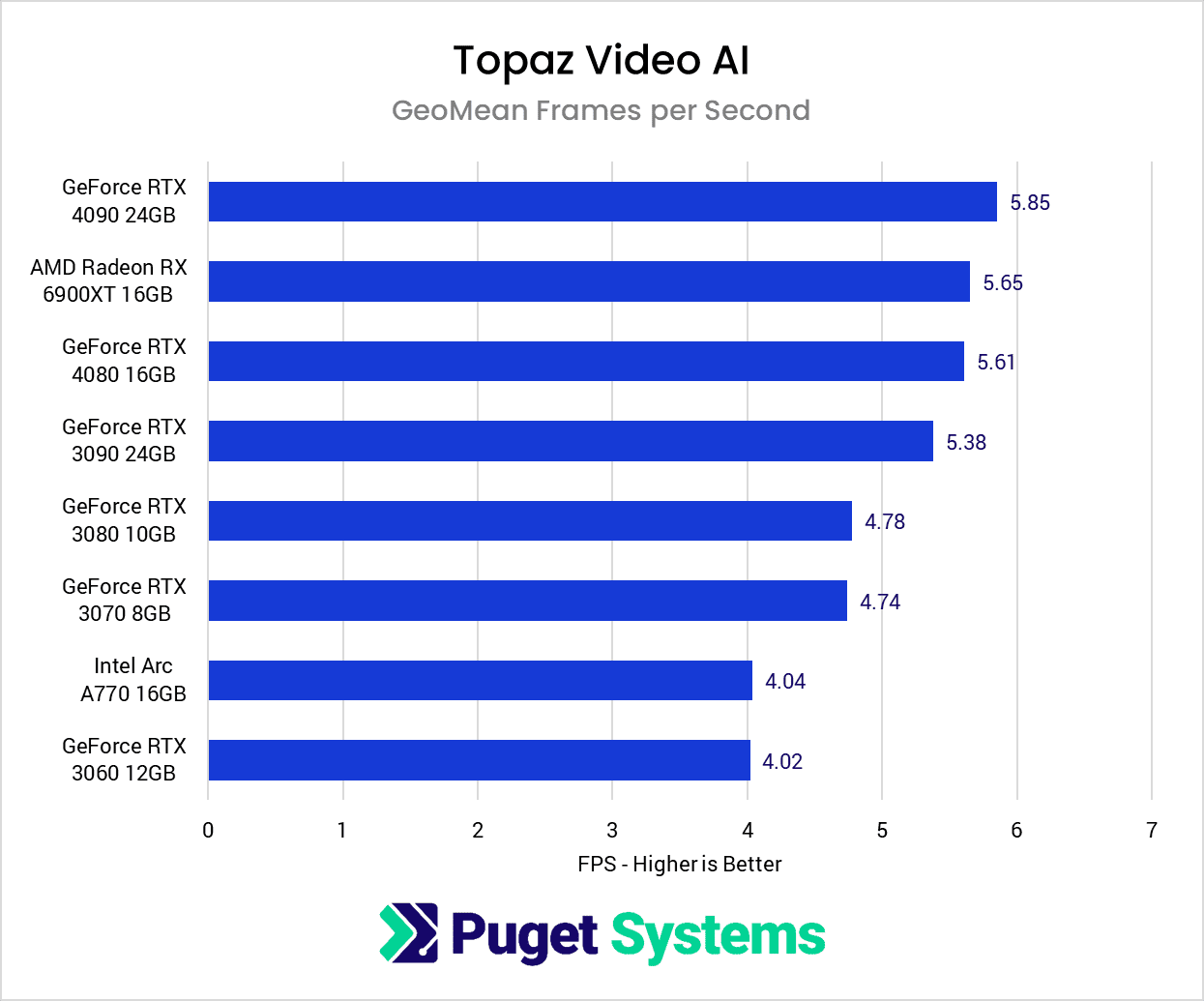

Last up is Topaz Video AI (chart #5). Here, the Intel Arc A770 performs more as expected, coming in on par with the GeForce RTX 3060. AMD, however, once again does very well, performing equal to the RTX 4080. The RTX 4090 can still give you a bit more performance, but only by a few percent which is going to be hard to notice in the real world.

Overall, the best GPU for Topaz AI is very difficult to say as it changes depending on which application you are using. The NVIDIA GeForce 30- and 40-series are consistently solid, but AMD can be on par or even faster in specific applications like Sharpen AI and Video AI. Intel Arc is even more polarized, with crashing issues in Gigapixel AI, but heads-and-shoulders better performance over NVIDIA and AMD in Topaz Shapen AI.

What is the Best CPU and GPU for Topaz AI?

Based on our testing in Topaz Gigapixel AI, Sharpen AI, Denoise AI, and Video AI, the best overall CPU for Topaz AI is Intel’s Core 13th Gen family of processors. Going between the i5, i7, and i9 models only net a relatively small 13% increase in performance. Still, if you are looking for the best possible CPU for Topaz AI, the Intel Core i9 13900K consistently gave us the best performance regardless of the base application. This could change if you want to use these applications in CPU processing mode, but that method is rarely used in favor of much faster GPU processing.

On the GPU side, however, things are not as clear-cut. The NVIDIA GeForce 30- and 40-series cards were consistently very good, especially in Gigapixel AI. On the other hand, the AMD Radeon 6900 XT did extremely well in Sharpen AI and Video AI, often matching or beating even more expensive GPUs from NVIDIA.

Intel was a mixed bag, giving impressive performance in Sharpen AI, but also wasn’t able to complete our tests at all in Gigapixel AI without crashing. This keeps us from recommending Arc for Topaz AI in general, but this is likely due to a 1st generation product bug that will hopefully get resolved with a simple driver update.

This is only our very first set of testing, and we plan on integrating these benchmarks into our regular hardware articles in the future. Our CPU results are about as clear as you can hope for from real-world testing like this, but we feel like there is still a lot to examine when it comes to GPUs. Most notably, we want to take a look at the recently launched AMD Radeon 7900XT(X), but we also want to examine multi-GPU functionality. That is fully supported on Video AI, but flagged as “experimental” for the photo-based applications, so we are very curious to see how it works.

Since this is the first time we are looking at Topaz AI, we highly encourage you to let us know your thoughts in the comments section below. Especially if these applications are a part of your normal workflow, we want to hear about how we can tweak this testing to make it even more applicable to you!