Table of Contents

Introduction

A month ago, NVIDIA began rolling out their GeForce RTX 40 series GPUs, starting with the RTX 4090 24GB. We have a full NVIDIA GeForce RTX 4090 24GB Content Creation Review article covering the launch of that card, but today we have the opportunity to take a look at the new GeForce RTX 4080 16GB.

While the RTX 4090 has the same 24GB of VRAM as the previous generation RTX 3090 and 3090 Ti, NVIDIA has increased the VRAM on the RTX 4080 all the way up to 16GB. That is a large bump over the 10-12GB on the RTX 3080 and 3080 Ti respectively and is one of the largest VRAM increases we have seen from NVIDIA in years. Regardless of any performance increase we may find with the RTX 4080, this alone makes it much more attractive for video editors working with 6K+ media, VFX artists rendering large scenes, as well as many real-time engine workflows.

If you want to see the full specs for the RTX 40 series GPUs we recommend checking out the NVIDIA GeForce RTX 40 Series product page. But at a glance, here are what we consider to be the most important specs:

| GPU | VRAM | Cores | Boost Clock | Power | MSRP |

|---|---|---|---|---|---|

| RTX 3060 | 12GB | 3,584 | 1.75 GHz | 170W | $329 |

| RTX 3060 Ti | 8GB | 4,864 | 1.67 GHz | 200W | $399 |

| RTX 3070 | 8GB | 5,888 | 1.70 GHz | 200W | $499 |

| RTX 3070 Ti | 8GB | 6,144 | 1.77 GHz | 290W | $599 |

| RTX 3080 | 10GB | 8,704 | 1.71 GHz | 320W | $699 |

| Radeon 6900XT | 16GB | 5,120 | 2.25 GHz | 300W | $999 |

| RTX 3080 Ti | 12GB | 10,240 | 1.67 GHz | 350W | $1,199 |

| RTX 4080 | 16GB | 9,725 | 2.51 GHz | 320W | $1,199 |

| RTX 3090 | 24GB | 10,496 | 1.73 GHz | 350W | $1,499 |

| RTX 4090 | 24GB | 16,384 | 2.52 GHz | 450W | $1,599 |

| RTX 3090 Ti | 24GB | 10,752 | 1.86 GHz | 450W | $1,999 |

NVIDIA increased the price of the RTX 4090 a bit over the previous generation RTX 3090, but kept it well below the RTX 3090 Ti. For the RTX 4080, however, you could either say that they increased it significantly over the RTX 3080 ($1,199 vs $699), or you could say that it is equivalent to the RTX 3080 Ti. The comparison versus the RTX 3080 Ti is likely the more accurate one, since even at the same $1,119 MSRP, you get 4GB more VRAM, 30W lower power draw (and heat output), in addition to the performance gains we will be examining in this article.

While not a part of the specs above, another change NVIDIA is making with the RTX 40 Series is to have dual NVIDIA Encoders (NVENC). This should allow these cards to be significantly faster when encoding supported versions of H.264 and HEVC media as long as the application has support.

Test Setup

Test Platform

Benchmark Software

| DaVinci Resolve 18.0.3 PugetBench for DaVinci Resolve 0.93.1 |

| Premiere Pro 22.6.2 PugetBench for Premiere Pro 0.95.5 |

| After Effects 22.4 PugetBench for After Effects 0.95.2 |

| Unreal Engine 4.26 |

| OctaneBench 2020.1.5 |

| RedShift 3.5.09 |

| V-Ray Benchmark 5.02.00 |

| Blender 3.3.0 |

To see how the RTX 4080 (and RTX 4090) performs, we will be comparing it to the full range of RTX 3000 series GPUs and the older NVIDIA GeForce RTX 2080 Ti. In addition, we will also include the AMD Radeon RX 6900XT to get a sense of performance versus NVIDIA’s main competitor. The test system we will be using is one of the fastest platforms currently available for most of the applications we are testing and is built around the AMD Threadripper Pro 5975WX in order to minimize any potential CPU bottlenecks.

For the tests themselves, we will be using many of our PugetBench series of benchmarks. Most of these benchmarks include the ability to upload the results to our online database, so if you want to know how your system compares, you can download and run the benchmark yourself. Our testing also includes several GPU rendering engines to show off the GPU rendering performance of these cards.

Video Editing: DaVinci Resolve Studio

For our first look at the new GeForce RTX 4080 16GB, we are going to examine performance in DaVinci Resolve Studio. More so than any other NLE (Non-Linear Editor) currently on the market, Resolve can make terrific use of high-end GPUs, and even multi-GPU setups. The four main areas the GPU is used for are processing GPU effects, debayering (and optionally decoding) RAW media, and H.264/HEVC decoding/encoding

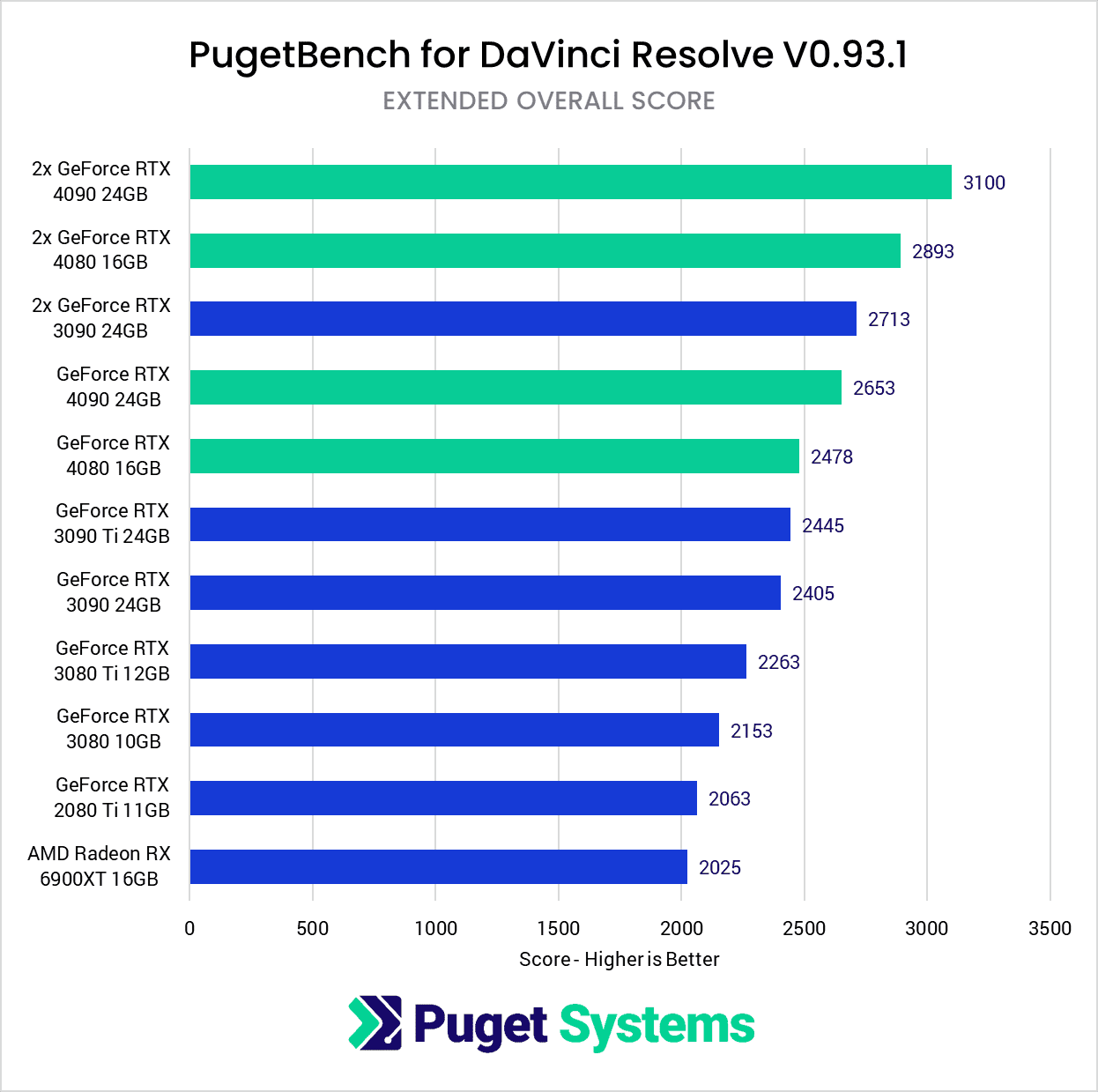

To start, we want to look at the overall score, which is a combination of everything we test for Resolve. This is often a good indicator of what kind of relative performance a “typical” (if such a thing exists) Resolve user may see with different hardware. Here, the RTX 4080 scores about 10% higher than the RTX 3080 Ti, and is about on par with the fastest previous-generation GPU – the RTX 3090 Ti.

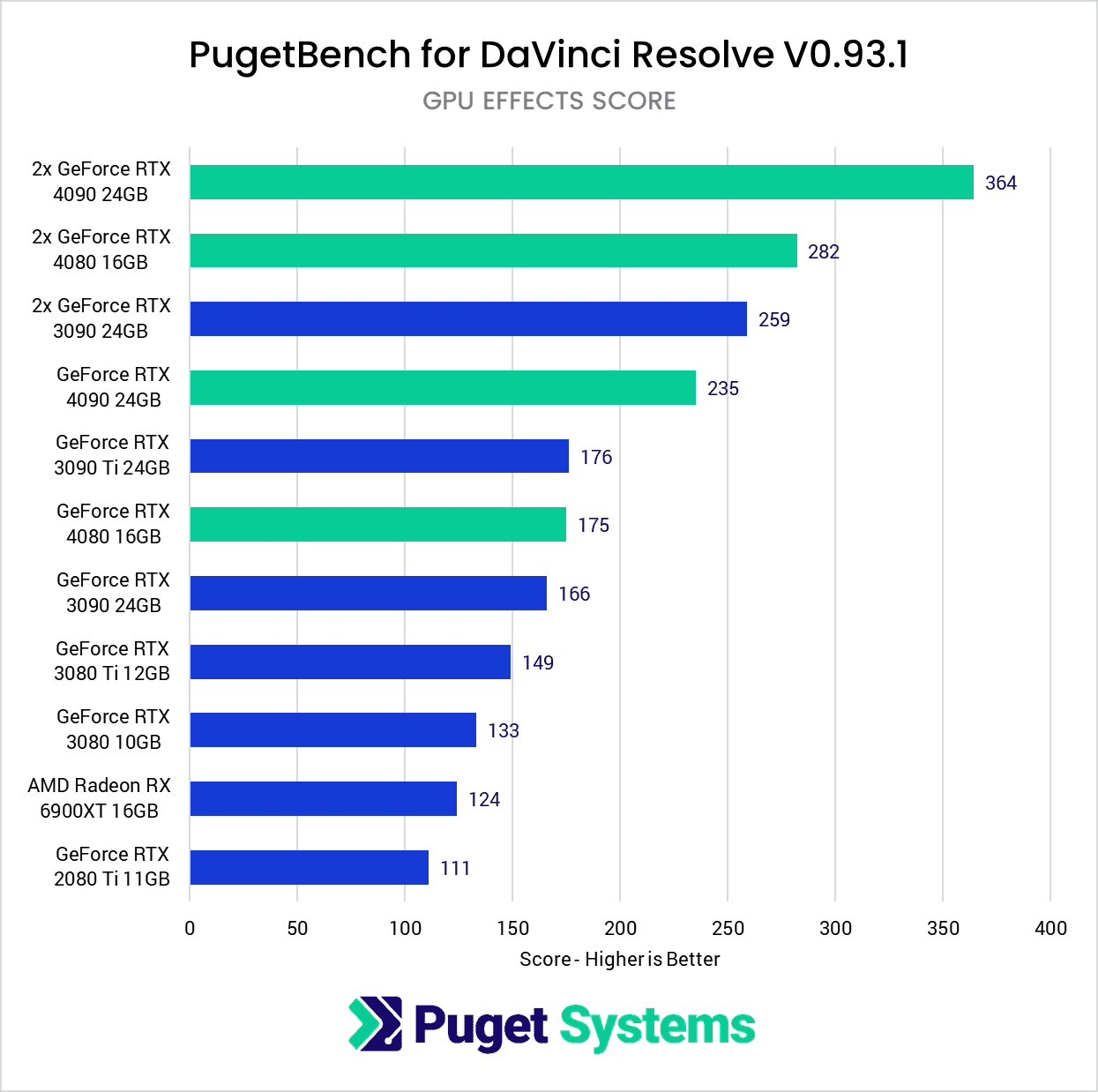

However, this is the overall score, which includes several tasks that are either purely CPU driven, or often bottlenecked by the CPU. If we look at the GPU Score (chart #2), we get to see how the GPUs perform for tasks like OpenFX and noise reduction where the performance of the GPU itself is typically the limiting factor. In this case, the RTX 4080 ended up being 18% faster than the RTX 3080 Ti, or again, on par with the RTX 3090 Ti.

This is also a good place to mention the older RTX 2080 Ti. We included this card in our testing to give a reference for how fast the RTX 4080 is compared to a bit older GPU, and for GPU effects, the RTX 4080 came in at about 58% faster than the RTX 2080 Ti. The RTX 4090 is even more impressive, coming in at just over 2x faster than the RTX 2080 Ti.

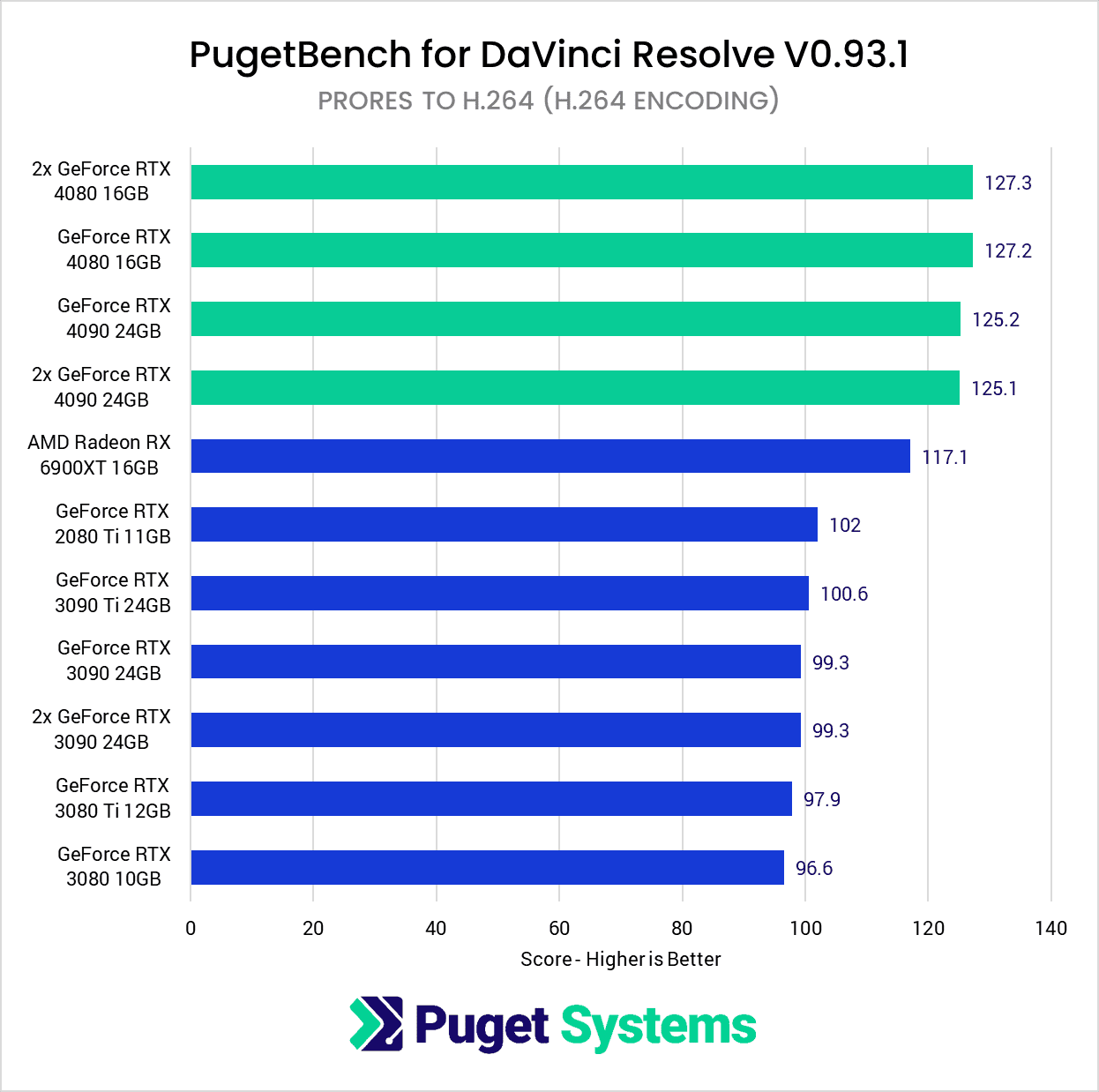

Last, we wanted to specifically look at H.264 encoding (chart #3). There is no easy way to test this in a complete vacuum (since you always have to decode or process a source clip), so we are going to be looking at the results for exporting a ProRes project to H.264 where the main bottleneck should be the H.264 encoding portion. The RTX 40 series includes dual encoders, which is supposed to dramatically reduce export times when using NVDEC.

The first thing to notice is that performance is identical with the RTX 4080 and 4090, and is not impacted by using multiple GPUs. Both RTX 40 series cards were able to complete our “ProRes to H.264” almost exactly 25% faster than any other of the NVIDIA GeForce cards we tested. It is also worth pointing out that this, and H.264 decoding, is the one area that AMD can keep up with NVIDIA. Everywhere else (RED/BRAW processing, GPU Effects, etc.), NVIDIA has a strong lead.

Video Editing: Adobe Premiere Pro

Adobe Premiere Pro may not utilize the GPU quite as much as DaVinci Resolve (and effectively doesn’t take advantage of multi-GPU setups at all), but having a strong GPU can still make an impact depending on your workflow.

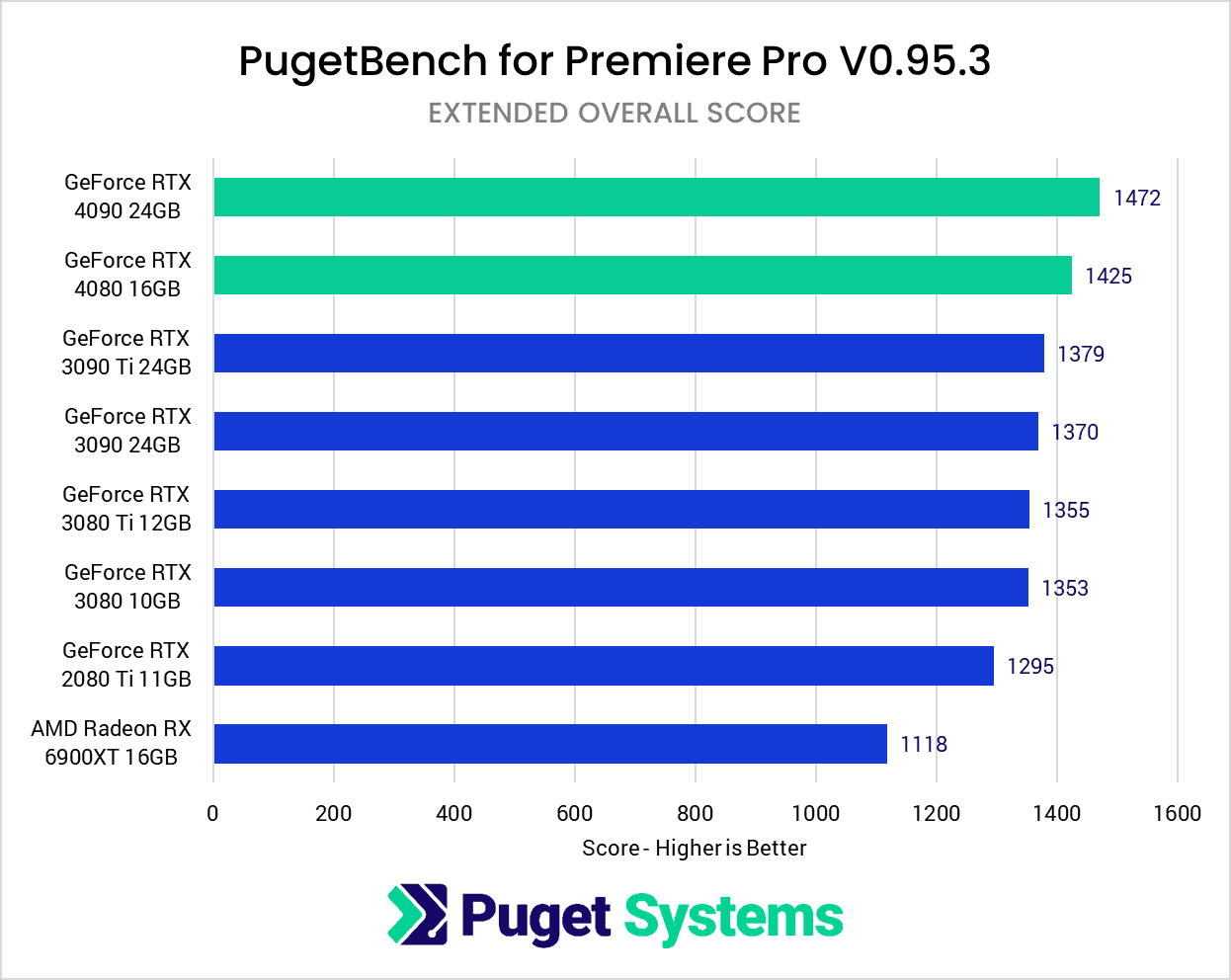

Once again, we are going to start with the Overall Score from our Premiere Pro benchmark, which shows the new RTX 4080 coming in at just a small 5% faster than the RTX 3080 Ti. This is still faster than even the RTX 3090 Ti by a few percent, but you will notice, that there isn’t much in the way of performance difference between the RTX 3080 to the RTX 3090 Ti. There is a clear benefit to going with NVIDIA over AMD, but most of the performance differences between the various NVIDIA GPU models are along the generational line. RTX 40 is faster than RTX 30, which is in turn faster than RTX 20 series. Most of this is because many of the tasks we test in our benchmark are impacted more by the CPU, or the performance of the NVENC/NVDEC (NVIDIA encoder and decoder) on the cards, rather than the raw performance of the GPU itself.

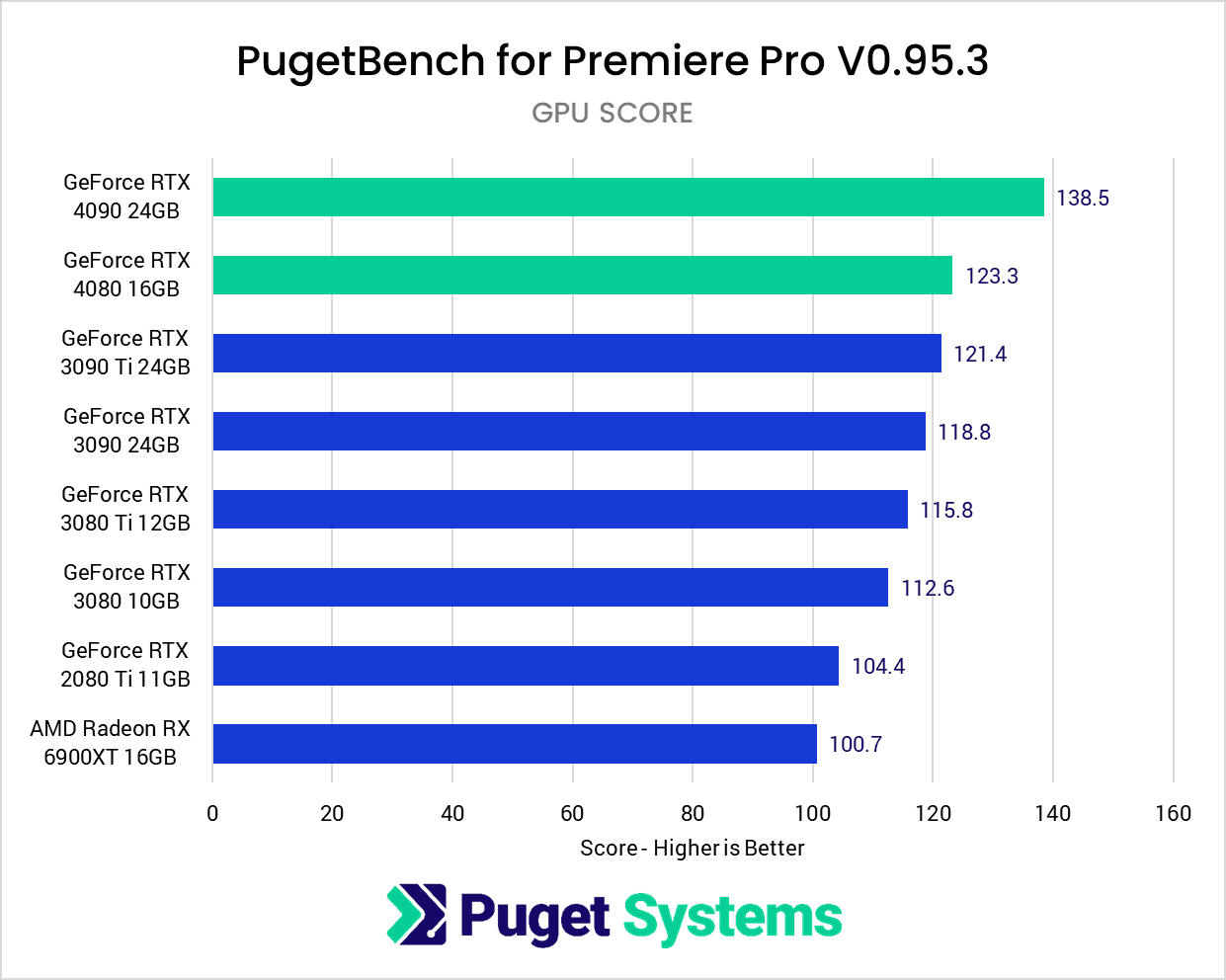

The one area where a more powerful GPU from within a single generation can get you a boost is for GPU-accelerated effects like Lumetri Color, most blurs, etc. (chart #2). For these tasks, the RTX 4080 is about 7% faster than the RTX 3080 Ti, or just a few percent faster than the RTX 3090 Ti. This isn’t as much of a gain as what we saw with the RTX 4090 (which is about 14% faster than the RTX 3090 Ti, or about 17% faster than the RTX 3090), but is about what we would expect in an application like Premiere Pro. Keep in mind that this is just with the base version of Premiere Pro. If you use plugins that take advantage of the GPU (such as many noise reduction plugins), the RTX 40 series could give you a much bigger boost than what we are showing here.

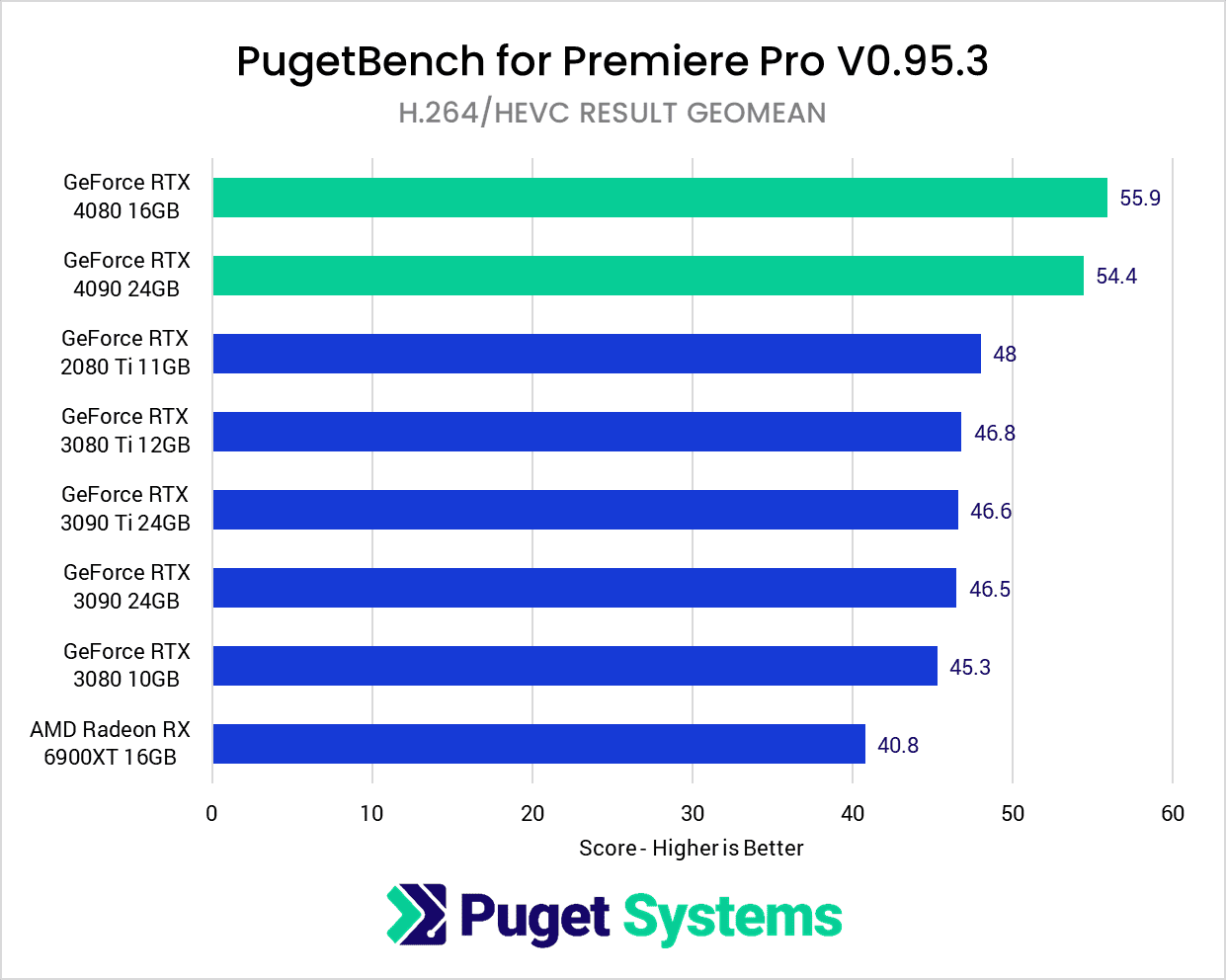

Lastly, on chart #3 we are looking at the geometric mean of all our H.264 and HEVC results. The current version of our Premiere Pro benchmark doesn’t pull out a single score based on the type of source codec, but this is something we are starting to do more and more often, and will likely be included natively in the next major update of our benchmark. As we mentioned earlier, performance for H.264/HEVC decoding/encoding is often more dependent on the generation of the GPU, rather than the raw performance since the NVENC and NVDEC portion of the GPU tends to be the same across all models. Still, there is definitely a small, but noticeable, performance gain from the RTX 40 series, with the new RTX 40 series scoring about 15% higher than the RTX 30 series cards.

We will point out real quickly that for the H.264/HEVC result, the RTX 4080 did score a few percent higher than the RTX 4090. The difference is really within the margin of error for this kind of test, but the RTX 4080 did also score slightly higher for the H.264 encoding test in DaVinci Resolve as well. Most likely we are just looking at a margin of error difference, but there is a small chance the pre-launch driver for the RTX 4080 included a few optimizations not found in the release driver for the RTX 4090.

Motion Graphics/VFX: Adobe After Effects

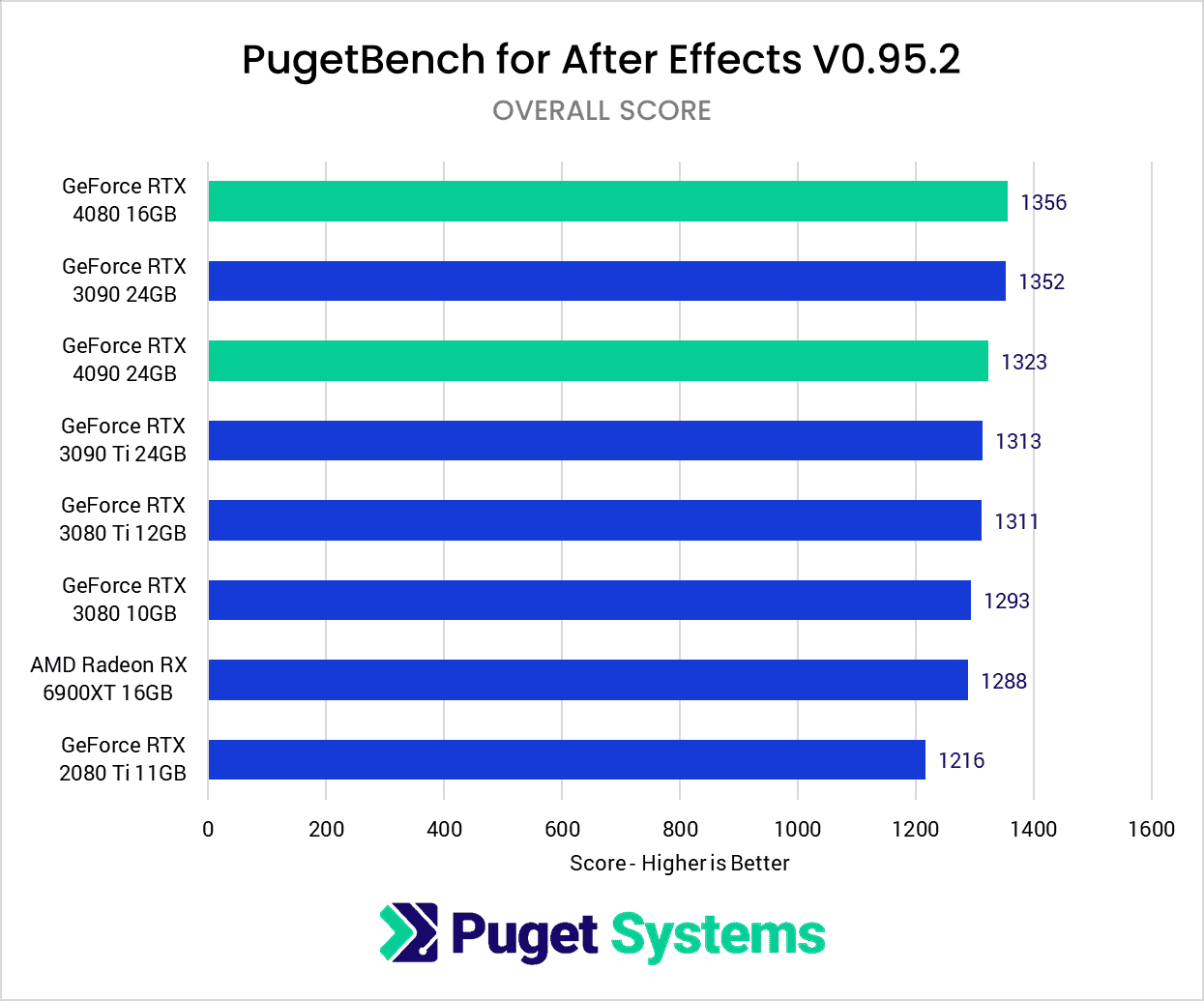

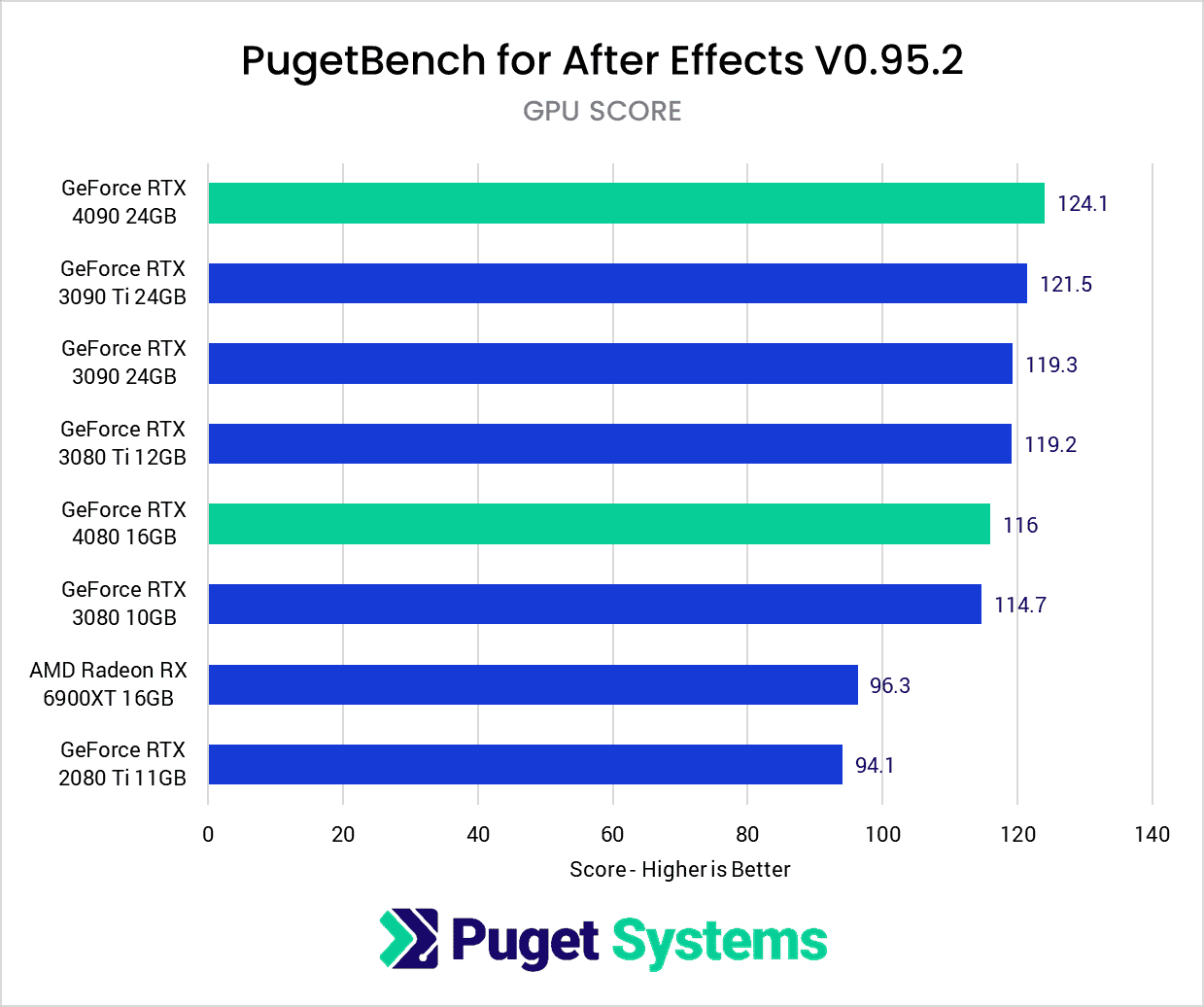

Adobe After Effects just barely makes the cut for our GPU testing in general, since the vast majority of projects in After Effects are going to be bottlenecked by the CPU. Still, the GPU does come into play at times, so for now, we are still including it.

In terms of overall performance, you are not going to notice too much of a difference between the RTX 30 series and the RTX 40 series. The RTX 4080 and 4090 are both within a few percent of the RTX 3080 Ti and higher cards, with the RTX 4080 coming out at the top of the chart, and the RTX 3090 beating the RTX 4090 by a fraction This doesn’t mean that the 3090 is necessarily faster than the RTX 4090, however, since the margin of error for this kind of real-world testing is about 5%. Rather, it means that any mid/high-end RTX 30 or RTX 40 series GPU should perform pretty much on par for most users.

Just like with Premiere Pro, however, we can drill down to the “GPU Score” portion of our benchmark. This is testing projects specifically designed to put as much load on the GPU while minimizing the load on the rest of the system. This is a borderline synthetic set of tests since no one really uses After Effects in this manner but does provide a “best case” situation for what a GPU upgrade can get you in the base version of After Effects without factoring in plugins, GPU-based rendering engines, etc.

Even for the GPU score (chart #2), we are looking at margin-of-error-level differences. The RTX 4080 is within the margin of error compared to the RTX 3080 Ti, as is the RTX 4090 compared to the RTX 3090 Ti and RTX 3090. With the powerful AMD Threadripper PRO 5975WX processor we are using, we can see a benefit going from the RTX 2080 Ti or AMD Radeon 6900XT to the RTX 3080 and above, but beyond that, we are once again CPU bottlenecked even in this extreme situation.

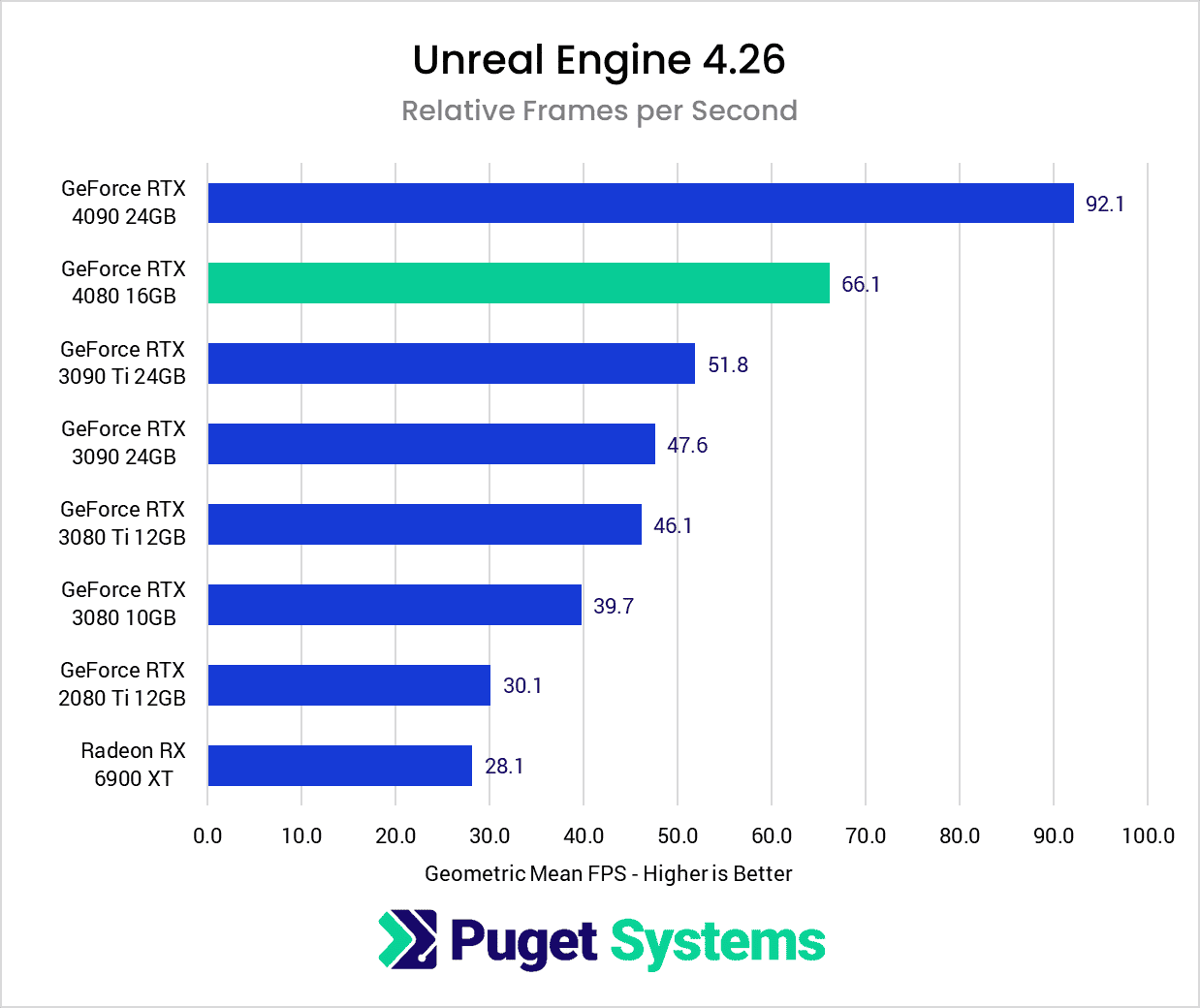

Game Dev/Virtual Production: Unreal Engine

Moving on to Unreal Engine, things get a bit more nuanced. While there are a few niche use cases that can take advantage of multiple GPUs (more on this later) this benchmark focuses on single GPU performance – specifically for real-time graphics. This score looks at three different Unreal Engine scenes, with and without ray tracing, and at a variety of resolutions. For this testing, we are not using DLSS. Currently, only the 4090 and 4080 support DLSS 3.0, while the 3000 series cards use DLSS 2.0, so it’s hard to get a good apples-to-apples comparison. Instead, we are looking at the raw horsepower. Furthermore, not all professionals will be using DLSS in their daily workflow as it does have an impact on the final pixels that each user will need to check to see if it is acceptable for their project.

Overall, the RTX 4080 sees a roughly 67% increase in framerates across all scenes compared to the RTX 3080, and 43% when compared to the RTX 3080 Ti. For those working at a fixed 30-60 FPS for virtual production, this means you can do a lot more in your scene at the same framerate. If you were riding that edge on a 3000 series GPU, you’ll have plenty of headroom with the RTX 4080, allowing you to push the realism even further. The increase in VRAM to 16GB is a very welcome addition, however, any in the Virtual Production space will prefer the RTX 4090 because of its 24GB VRAM. Others will wait for the new RTX 6000 Ada in order to have access to Quadro Sync and 48GB of VRAM.

One important note about this generation of video cards (both RTX 40 Series and the “Ada Lovelace” Quadro cards) is that Nvidia has removed NVLink. Currently, that is required if you want to use multiple GPUs for tasks like GPU lightmass, inner/outer frustum in nDisplay, and to use multiple GPUs for the path tracer in Unreal 5.1. Technically, nDisplay does not require NVLink, but the performance hit when not using it is so great that everyone I’ve talked to, including people at Epic, says it is not worth attempting.

There are some potential fixes, such as Epic recently adding SMPTE 2110 in 5.1, improved PCIe handling (though that may not come about until PCIe 5.0), or some other solution. There aren’t many people that would be using a GeForce card for this anyway as you lose the Quadro Sync option, so there is still time to find a workable solution. Worst case scenario, the RTX 4090 is about the same performance as two RTX 3090s, but without the hassle of getting dual GPUs working.

GPU Rendering: V-Ray

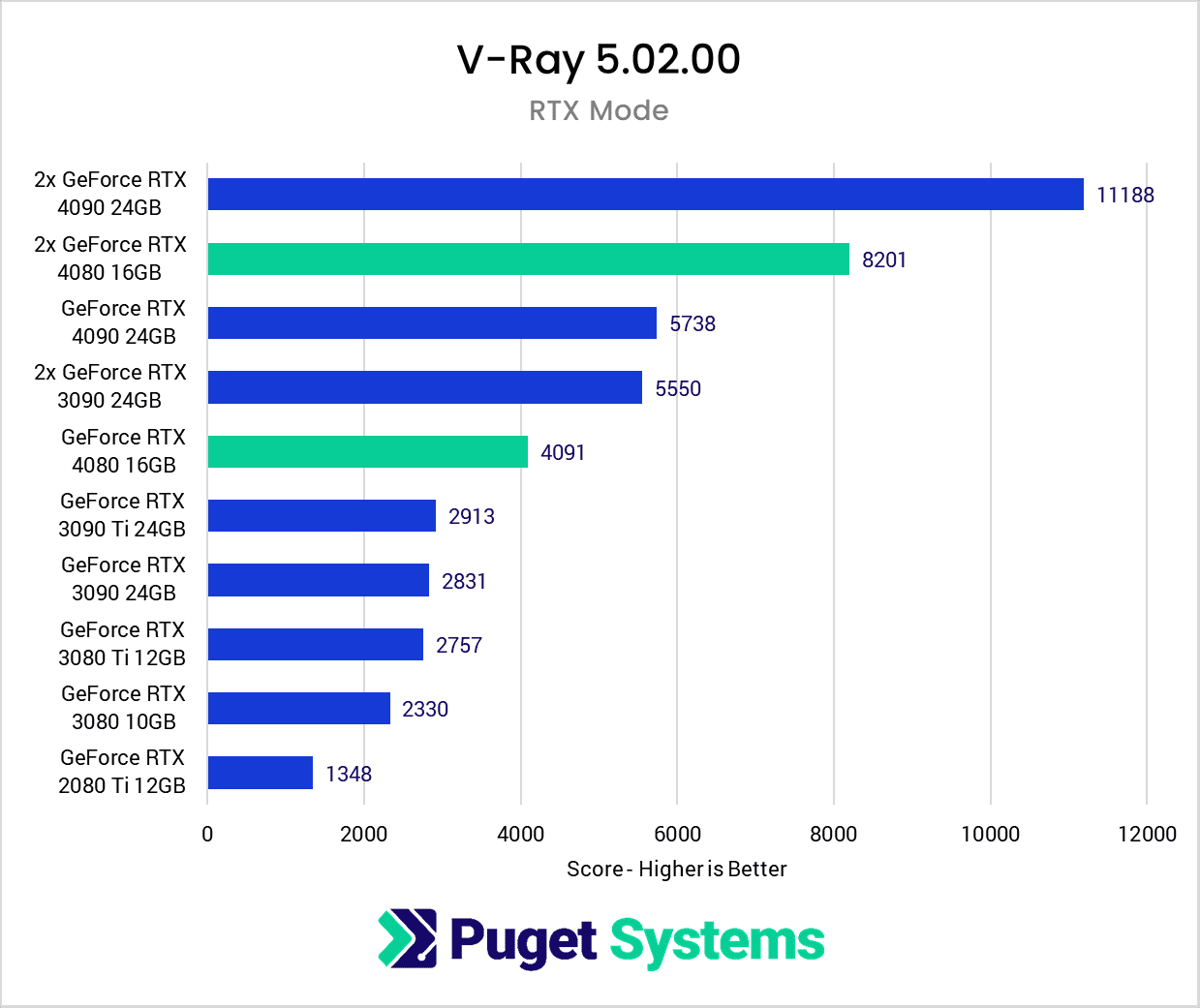

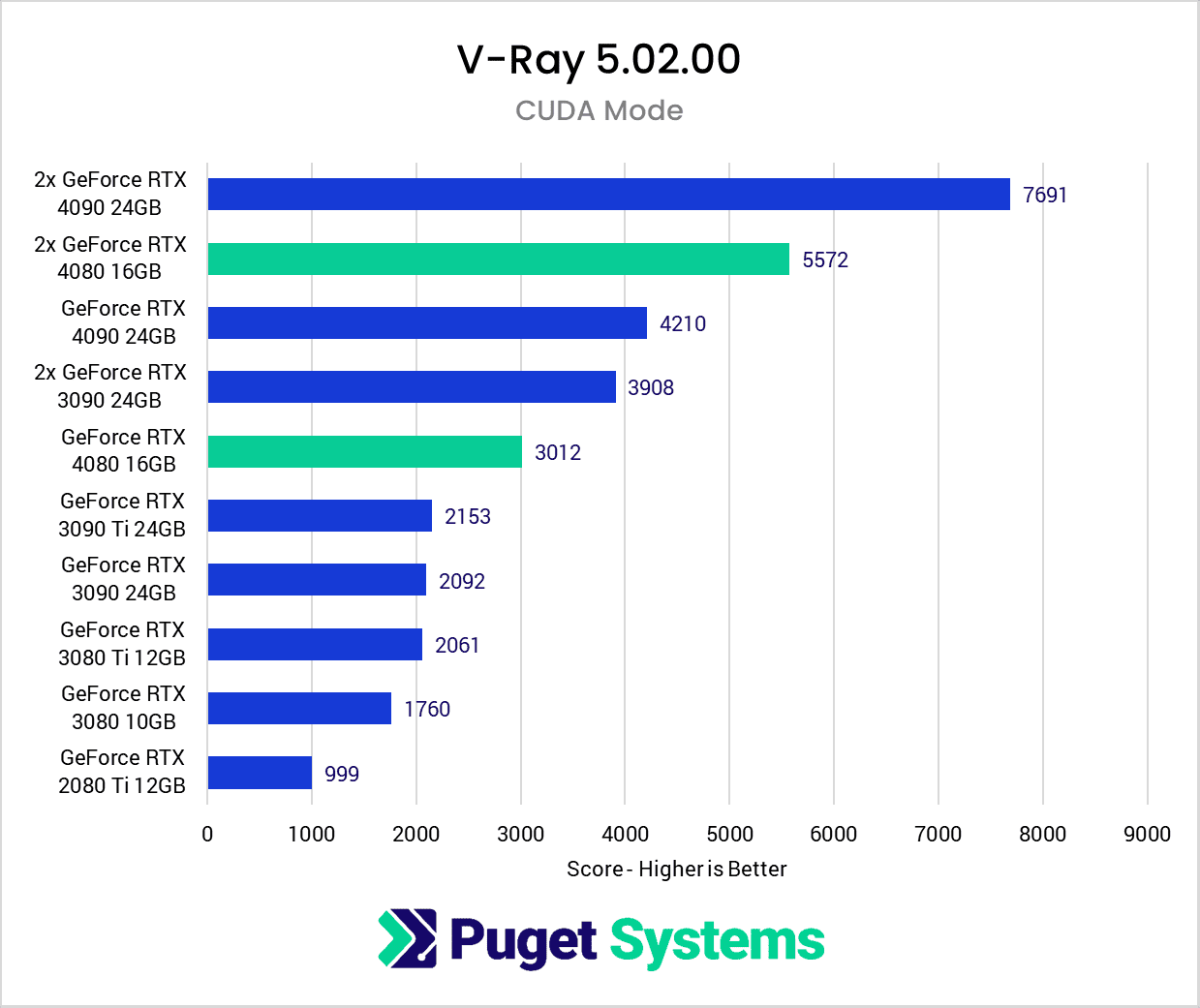

New generations of video cards are always an exciting time for GPU rendering, as it is where we typically see the most significant performance increase. Starting off our GPU rendering benchmarks is V-Ray from Chaos, which scales very well with both GPU processing power and adding multiple GPUs.

As you can see from the results, the new NVIDIA GeForce RTX 4080 is roughly 75% faster than the RTX 3080 and 40% faster than even the RTX 3090 Ti, but trails behind the more powerful RTX 4090 by 30%. And if you are using a bit older a GPU, the new RTX 4080 is a little more than 3 times faster than RTX 2080 Ti, which is only 4 years old. If you tend to upgrade every other generation, this will be a monumental leap in performance.

We were fortunate enough to also test with multiple RTX 4080 GPUs. Currently, we can only do two RTX 4080s in a desktop system due partly to physical space, but more importantly because of power draw. The results show that the RTX 4080 scales well in V-Ray with two of the cards being roughly twice as fast as one.

One big point to note is that the RTX 4080 does not offer NVLink. If you use very large scenes and needed NVLink to pool VRAM, that is not going to work with the new RTX 40 series. You will have to wait for the RTX 6000 Ada to get a card with 48GB of VRAM.

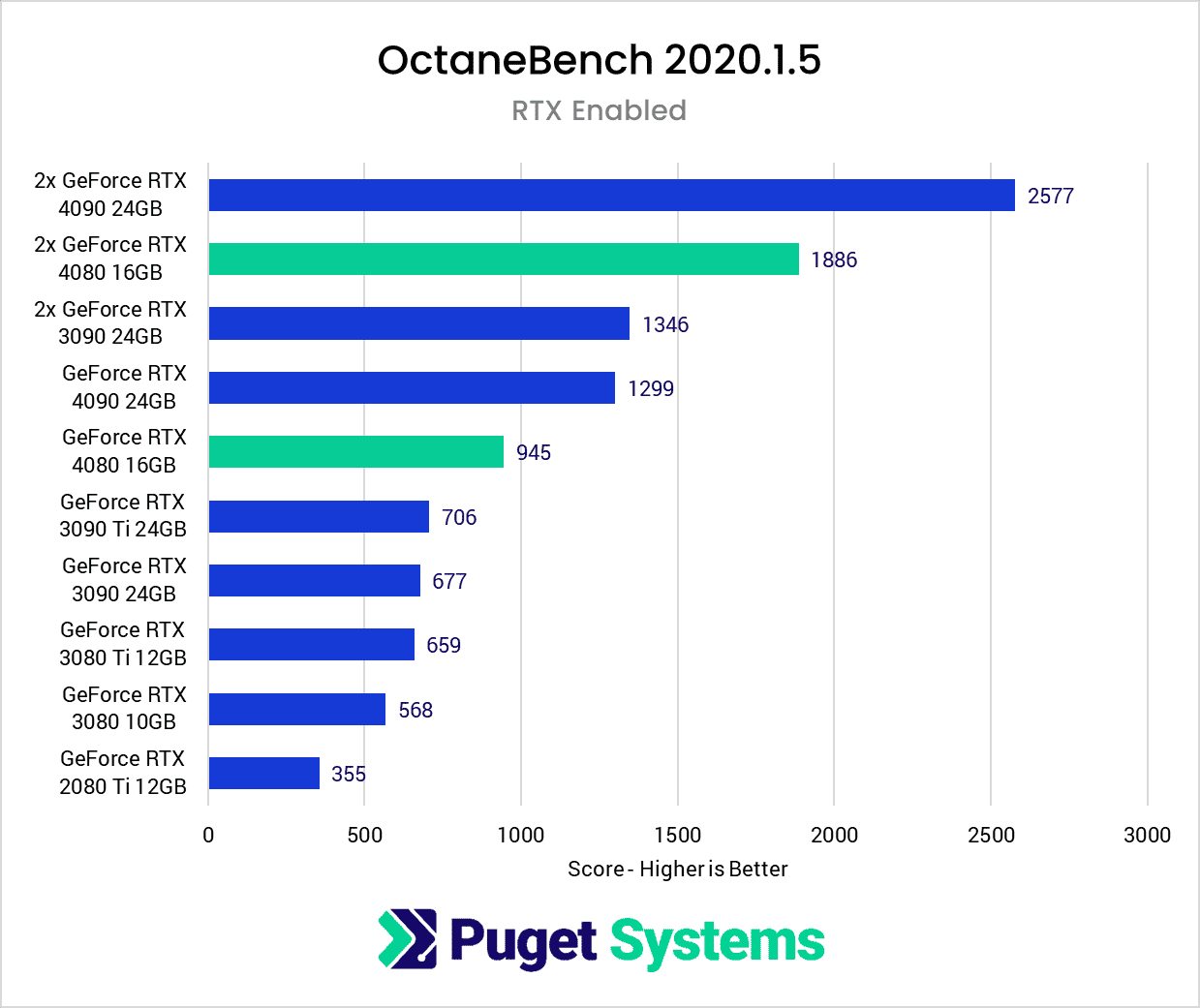

GPU Rendering: OctaneRender

Moving on to OctaneBench from Otoy, we see that the new Nvidia GeForce RTX 4080 is 67% faster than the previous generation’s RTX 3080. However, it is 30% slower than the recently released RTX 4090. And if you do opt for a dual RTX 4080 system, the RTX 4080 scales almost perfectly, allowing for really impressive render times.

Otoy has made some tremendous improvements recently, and these results are primarily for the standard rendering system. You should see similar performance improvements to their real-time preview, or when using Octane within and DCC, Unreal Engine, or plugins such as EmberGen. Also, Otoy is working on improving out-of-core performance, and other ways of getting large scenes to perform better on GPUs if you don’t have enough VRAM. This should help mitigate the lack of NVLink and not being able to pool VRAM.

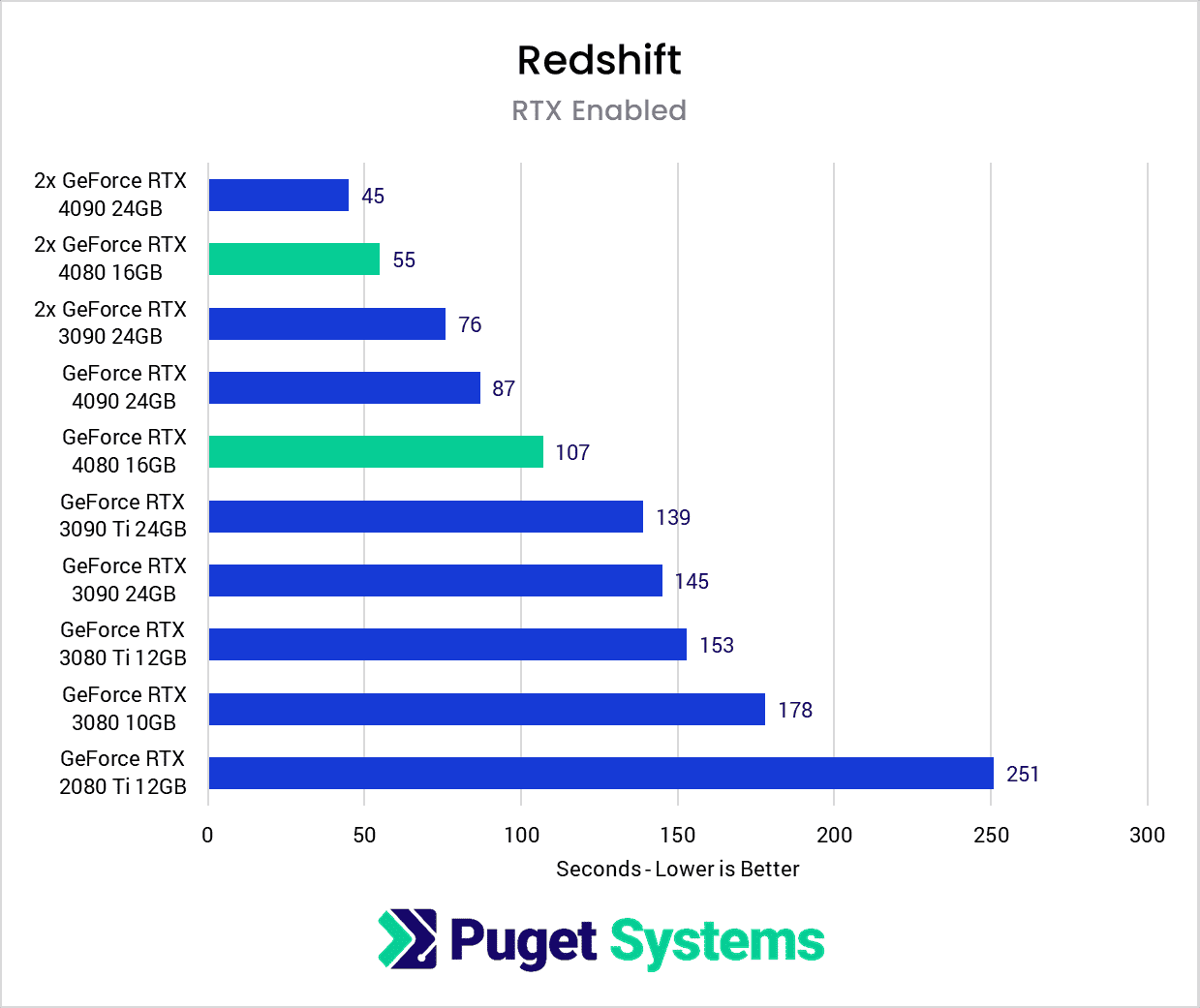

GPU Rendering: Redshift

Up next in our GPU rendering suite is Redshift from Maxon. Here we don’t see as big of an improvement, with the RTX 4080 only being about 60% faster than the RTX 3080. To a certain extent, this may be a limitation of Redshift’s benchmark. Both Octane and V-Ray look at how much work can be accomplished in a given amount of time, whereas Redshift renders a single frame and reports how long it took. This was a fine approach for a long time, but modern GPUs are getting so fast that the overhead of initiating the render is going to start to impact the final time. We’ve gone from 251 seconds for the RTX 2080 Ti, to 145 seconds for the RTX 3090, to only 107 seconds for the RTX 4080. Amazingly dual RTX 4080s completed the render in 55 seconds. Again, there is no NVLink, so you will be limited to the VRAM of a single card.

We must also mention that while these are the results from Redshift, Maxon has begun adding GPU-based physics simulations to Cinema 4D as well. These new features were too new for us to be able to test before the RTX 4080’s launch, but we hope to have some specific data soon. We fully expect to see similar results, however, with the RTX 4080 performing anywhere from 60-90% faster than the RTX 3080.

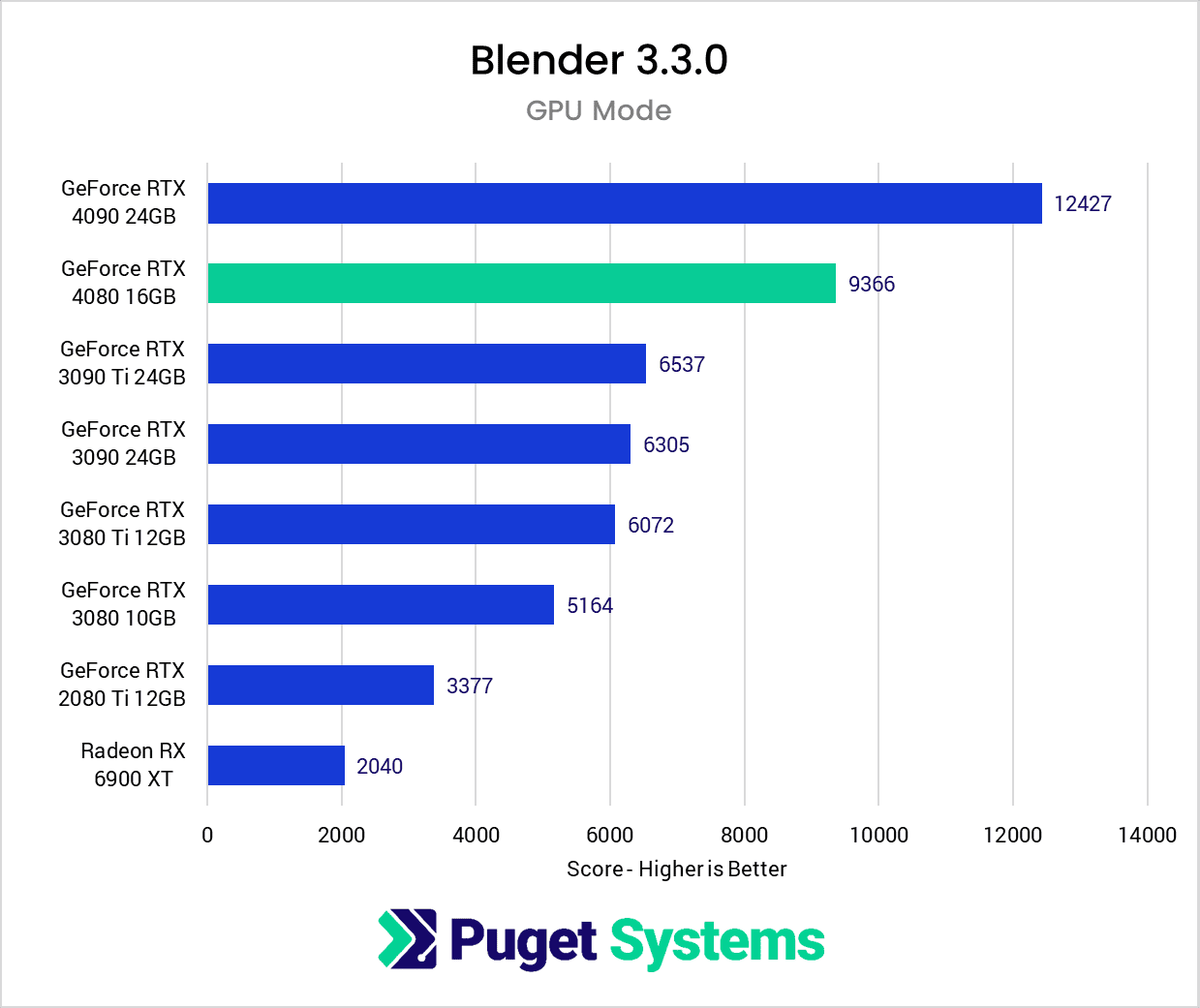

GPU Rendering: Blender

Rounding out our GPU rendering tests, we turn to Blender. One thing to note is that the official Blender benchmark does not support multiple GPUs, even though Blender itself does. Because of this, we opted to stick to Blender’s official benchmark to allow anyone to be able directly to compare their system to the cards we tested. This is also the only renderer we tested that supports AMD video cards, so we threw the AMD Radeon 6900XT in for reference.

Like many of the other rendering engines, the new RTX 4080 scores nearly 80% faster than the RTX 3080, and 43% faster than the RTX 3090 Ti, yet 25% slower than the RTX 4090. Taken as a whole, the new RTX 4080 is very good when it comes to GPU rendering. In most cases, it is nearly 70% faster than the previous RTX 3080, and more than 30% faster than the best 30-series video card.

.

How Well Does the NVIDIA GeForce RTX 4080 16GB Perform for Content Creation?

NVIDIA tends to give us great performance gains whenever they launch a new series of GPUs, and the new RTX 4080 is no different. Not only does it have more VRAM than the previous generation (16GB versus 12GB), but for applications that can benefit from having a more powerful GPU, the RTX 4080 can give you a massive boost to performance.

For video editing, the big winner is DaVinci Resolve Studio, as it makes more use of the GPU (and multi GPU configurations) than any other NLE currently on the market. In the instances where the GPU is the primary bottleneck (OpenFX and noise reduction), the RTX 4080 is nearly 20% faster than the previous generation RTX 3080 Ti. This isn’t as much of a gen-over-gen increase as the RTX 4090 (which is around 40% faster than the previous generation RTX 3090), but it is still a good increase. And compared to an older card like the RTX 2080 Ti, you are looking at about a 60% increase in performance.

Resolve is also one of the few video editing applications that can take advantage of multiple GPUs, and we found that going from one RTX 4080 to two gave us a 61% increase in performance. This is right in line with what we see from the RTX 4090, or previous generation cards like the RTX 3090, so it is good to see that there don’t appear to be any issues with dual RTX 4080 configurations.

Unreal Engine, being a real-time engine, benefits even more from powerful GPUs. Testing across a variety of scenes, from basic game environments to high-end Virtual Production sets, with and without ray tracing, the RTX 4080 averaged around 67% higher FPS. For those in ArchViz, that translates to faster render times or smoother VR experiences. Users in Virtual Production who are capped at 30 or so FPS will be able to do more on-screen, making their sets even more realistic.

GPU rendering in Octane, Redshift, V-Ray and Blender sees roughly a 70% improvement in performance over the RTX 3080 and more than 30% faster than the fastest GPU from the previous generation – the 3090 Ti. All of these renderers also benefit from using multiple GPUs, and it is typical to see an 80-90% speed up by adding a second GPU. We would see even more performance by using more than two GPUs, but you will almost always be limited to just 1-2 cards due to the massive size and power constraints of these new cards.

One of the concerns for this generation is the lack of NVLink. With it, GPU rendering would use this feature to pool the VRAM of two video cards to be able to hold larger, more complex scenes. Exceeding the VRAM of a GPU would fall back to the system RAM, dramatically decreasing performance, or crashing the system. Without NVLink, VRAM pooling isn’t even an option. The RTX 4080 at least got a VRAM upgrade to 16GB, but the lack of NVLink on the RTX 40 series means you will need to jump up to the Quadro line of cards if you need more than 24GB of VRAM.

This missing NVLink is going to be an even bigger concern for many in Virtual Production, especially those filming on large LED volumes. Currently, they use multiple GPUs so one video card can be dedicated to the inner frustum, and then transfer that frame to the other GPU to display on the wall. Not everyone in this space opts for this workflow, as it can be tricky to get set up, but when it works, it allows for much better performance. There are other options on the horizon, but nothing concrete at the time of writing. Not many users of this workflow would use a GeForce card anyway due to its lack of Quadro Sync support, but it is worth being aware of ahead of the RTX 6000 refresh.

Beyond NVLink, another concern we have about the RTX 4090 is simply how much power it demands (and how much heat output that will translate to). The physical design of the card is going to make using more than two RTX 4090 cards impossible without liquid cooling, but even then, you will find yourself to be power limited very quickly. The Founders Edition cards we are using straight from NVIDIA required a total of four(!) 8-pin PCIe plugs, which combined with the one plug required for our WRX80 motherboard, meant that we were using every single available PCIe power cable from our 1600W power supply to test dual RTX 4090 cards.

NVLink concerns aside, however, there is no question that the GeForce RTX 4080 16GB is an extremely capable GPU. Anytime we see this large of a performance increase over the previous generation, even in just a few workflows, it is hard not to be impressed. In the end, whether the RTX 4080 (or the RTX 4090 for that matter) is going to be worth the investment for you is going to come down to your workflow, and what kind of ROI (return on investment) you might expect given the time savings it would be able to give you. But, if you are often limited by the performance of the GPU in your system, the RTX 40 series in general is almost certain to be a solid investment.

If you are looking for a workstation with the NVIDIA GeForce RTX 4080 16GB, you can visit our solutions page to view our recommended workstations for various software packages, our custom configuration page, or contact one of our technology consultants for help configuring a workstation that meets the specific needs of your unique workflow.