Table of Contents

Update 7/25/2024 (Intel Arc A770 GPU Performance):

Our initial results for the Intel Arc A770 GPU showed lower-than-expected performance due to issues with the driver version we were using (31.0.101.5593). We tested with other drivers, including versions 31.0.101.5594 and 31.0.101.5592, but neither showed any difference, so we opted to publish with those results.

Intel has since released driver version 32.0.101.5762, which addresses these performance problems in DaVinci Resolve. We have updated our charts and text to reflect this improved driver. Please see the revised results below.

Introduction

Recently, we launched an updated version of our “PugetBench for DaVinci Resolve” benchmark featuring a completely overhauled test suite. Not only did we greatly expand the number of video codecs we test (RED, BRAW, ARRIRAW, Cinema RAW Light ST, and Sony X-OCN), but we also added more GPU-based OpenFX tests. In addition, based on the work BlackMagic has been doing to integrate AI into DaVinci Resolve, we added a whole suite of tests looking at the various AI features in Resolve, including Super Scale, Face Refinement, Magic Mask, Video Stabilization, Smart Reframe, and more!

Because the benchmark has changed so much, we decided it would be a good time to do an in-depth analysis of various modern (and older-generation) consumer GPUs, including NVIDIA GeForce RTX, AMD Radeon, and Intel Arc. This is also a good time to revisit multi-GPU scaling since we have never tested that aspect of Resolve for the AI features in particular.

We will note that BlackMagic does have a public beta of their upcoming DaVinci Resolve v19 available, but we are going to stick with the full release 18.6.6 version. The beta has a number of performance improvements, but we have seen fairly large performance shifts across different beta builds in the past and prefer to wait until a new version has been fully released before using it in our testing.

Test Setup

Test Platform

| CPU: AMD Ryzen Threadripper PRO 7975WX |

| CPU Cooler: Asetek 836S-M1A 360mm |

| Motherboard: ASUS Pro WS WRX90E-SAGE SE BIOS version: 0404 |

| RAM: 8x DDR5-5600 16 GB (128 GB total) Running at 5200 Mbps |

| PSU: Super Flower LEADEX Platinum 1600W |

| Storage: Samsung 980 Pro 2TB |

| OS: Windows 11 Pro 64-bit (22631) |

Benchmark Software

| DaVinci Resolve Studio 18.6.6.7 PugetBench for DaVinci Resolve 1.0.0 |

Tested GPUs

| 1-3x NVIDIA GeForce RTX™ 4090 FE 24GB |

| NVIDIA GeForce RTX™ 4080 SUPER FE 16GB |

| NVIDIA GeForce RTX™ 4080 FE 16GB |

| ASUS ROG Strix GeForce RTX™ 4070 Ti SUPER 16GB |

| ASUS ROG Strix GeForce RTX™ 4070 Ti 12GB |

| NVIDIA GeForce RTX™ 4070 SUPER FE 12GB |

| NVIDIA GeForce RTX™ 4070 FE 12GB |

| ASUS TUF OC GeForce RTX™ 4060 Ti 8GB |

| NVIDIA GeForce RTX™ 4060 8GB |

| NVIDIA GeForce RTX™ 3080 Ti 12GB |

| NVIDIA GeForce RTX™ 2080 Ti 11GB |

| EVGA GeForce GTX™ 1080 Ti 11GB |

| AMD Radeon RX 7900 XTX 24GB |

| AMD Radeon RX 6900 XT 16GB |

| Intel Arc A770 16GB |

To test how Resolve performs with different GPUs, we built up a standard testbed intended to minimize the potential for bottlenecks in the system outside of the GPU. We are using the 32-core AMD Ryzen Threadripper PRO 7975WX and 128 GB of DDR5 memory, which should give us the best overall performance in our DaVinci Resolve benchmark suite.

For each GPU, we are using the latest available driver version as of July 1st, 2024 — sticking with the “Studio” drivers for NVIDIA. We ran the full “Extended” benchmark preset, and will largely focus on the Overall, RAW, GPU Effects, and AI scores, as those are the ones most affected by the GPU.

We tested most cards in a single GPU configuration but included up to three NVIDIA GeForce RTX 4090 cards to see how the various aspects of Resolve scale with more GPUs. We are limiting this to three GPUs rather than four as that is what a relatively standard workstation is able to handle due to the high power draw and heat output of modern GPUs. For each GPU, we went through the full “neural engine optimization” process, which we have previously tested and found that it provides a massive performance boost for the AI tests.

Raw Benchmark Results

While we will be going through our testing in detail in the next sections, we often like to provide the raw results for those that want to dig into the details. If there is a specific codec or task you tend to perform in your workflow, examining the raw results will be much more applicable than our more general analysis.

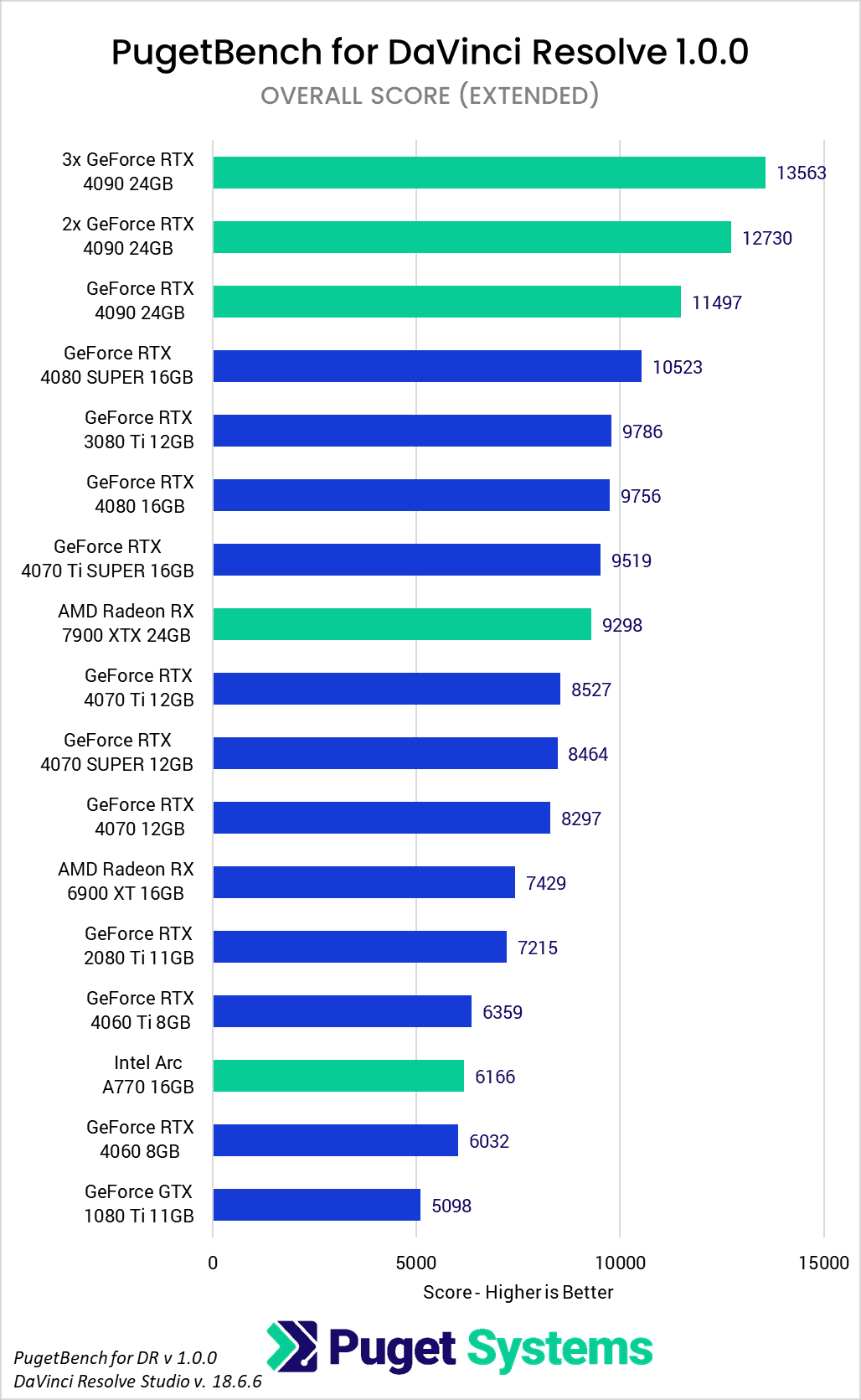

Overall GPU Performance

Before we get into the results, we want to note that since we are testing 15 different GPUs, our charts are quite a bit taller than normal. For anyone on mobile or a smaller screen, we apologize! Also, since we are not focusing on any GPU in particular, we colored the top GPU models from NVIDIA (including the dual and triple configuration), AMD, and Intel in green to help make it obvious how each brand stands in terms of maximum performance.

Starting off, we are going to take a look at the Overall Score from the Extended benchmark preset. This score factors in the results for all the tests, including both those that are GPU- and CPU-focused. Because of that, there isn’t as large of a performance difference between each GPU model as what we will see in some of the following sections, but it is an accurate representation of what kind of overall performance increase you can expect from different GPUs.

There is a LOT of data in here, but there are a handful of primary questions we can answer directly. The first is AMD vs. Intel vs. NVIDIA GPU performance, and it is clear that NVIDIA GeForce gives the highest overall performance in DaVinci Resolve, with the GeForce RTX 4090 topping the chart. In fairness to AMD, the Radeon 7900 XTX is quite a bit less expensive than the RTX 4090, but even at the same price point (Radeon 7900 XTX vs GeForce RTX 4080 SUPER), NVIDIA comes out slightly ahead by about 13%. AMD does have the advantage in terms of VRAM size per dollar, but in terms of straight performance, NVIDIA is the way to go.

The Intel Arc A770 results we initially got were very low, which turned out to be caused by a series of bad Intel GPU drivers (as noted at the top of this article). With Intel’s 5762 driver, however, performance is in line with what we have seen in the past. The A770 may not top any performance charts, but it isn’t intended to as it is a budget-friendly option. In terms of performance, it is right in-line with NVIDIA, slightly beating the less expensive RTX 4060, and slightly behind the more expensive RTX 4060 Ti.

The last thing we want to call out specifically is how multi-GPU scaling works. Going from one RTX 4090 to two increased performance by 11%, while adding a third GPU added an additional 7%. That doesn’t sound like much, but keep in mind that this score includes tasks that don’t use the GPU at all, and there are plenty of GPU-based tasks that don’t scale with multiple cards. We will dive more into this in the following sections.

Fusion GPU Performance

Fusion is not typically something we look at when examining GPU performance, but since we are doing an in-depth analysis, we wanted to go ahead and include it this time around. In general, Fusion doesn’t scale that well with more powerful GPUs, since most of it is actually CPU limited. If you want the best performance possible, NVIDIA does have the overall advantage, although it is fairly small.

What we really want to point out here is how poorly Fusion performs with multiple GPUs. As you can see in the primary chart above, a single NVIDIA GeForce RTX 4090 is at the very top, but the dual and triple configuration of that same GPU is way down at the bottom. The performance is so low that even an RTX 4060 performs on par or faster.

What is interesting is that not everything in Fusion has this issue with multiple GPUs. If you look at chart #2, we split out the results for each test individually and charted it according to how the performance compared to a single GPU configuration. In four of our six Fusion tests, there is a sizable performance loss with multiple cards, while one (3D Lower Thirds, which tests a number of the built-in templates) shows no difference with multiple GPUs. On the other hand, the “Phone Composite UHD” test, which does planar tracking and keying, shows very respectable performance gains with even a triple GPU configuration (although the difference with dual GPUs is oddly modest).

In most cases, multi-GPU configurations are likely to result in a net performance loss for Fusion, but there are specific workflows that could see a benefit. This is good to know if your workflow includes tasks that do scale with more GPUs, but you also work in Fusion a decent amount.

RAW Codec Processing GPU Performance

Next up, we have RAW codec processing. This measures how good your system is when working with RAW codecs like RED, BRAW, ARRIRAW, X-OCN, and Cinema RAW Light. In general, these codecs perform better with a more powerful GPU, although they can start to become CPU-limited at times.

Between NVIDIA, AMD, and Intel, NVIDIA still holds the top spot with the RTX 4090. However, AMD does very well for these codecs with the Radeon 7900 XTX performing a few percent above the RTX 4080 SUPER. And Intel is even better from a price-to-performance standpoint, with the A770 beating the $50 more expensive RTX 4060 Ti.

As far as GPU scaling goes, having more cards does help in general, but only by a limited amount. What is interesting is that this modest improvement is partly due to how Resolve behaves with different types of RAW codecs. If you switch to chart #2, we pulled out the RAW Processing results and plotted them according to the performance with a single RTX 4090 to give you an idea of how GPU scaling behaves with the different types of codecs.

Specifically, RED and Cinema Raw Light don’t see any benefit with multiple GPUs. BRAW does show a very large ~60% performance improvement with two GPUs, but no further benefit with a third card. ARRIRAW and X-OCN, on the other hand, show a modest 10-20% improvement with two GPUs, which increased to about 35-45% faster than a single GPU with a third card.

In other words, while multi-GPU configurations can help when working with RAW footage, the exact benefit varies greatly depending on the exact RAW codec you are working with.

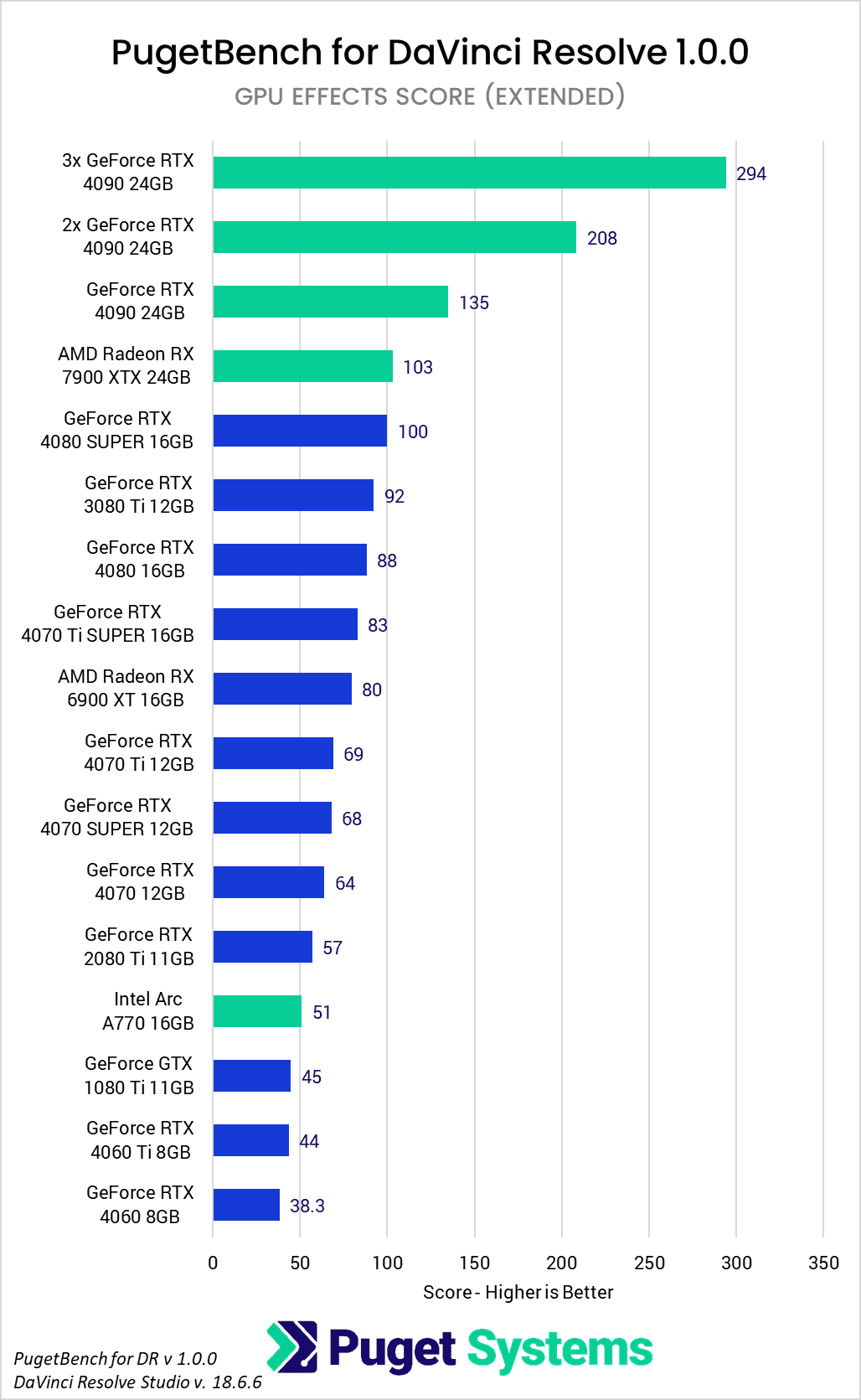

GPU Effects (OpenFX) GPU Performance

Next, we have performance when processing GPU Effects such as Noise Reduction, Optical Flow (Enhanced), Lens Blur, and general Color Correction. This type of workload in Resolve is often used as the poster child for how much a more powerful GPU can impact performance for video editing workflows in general.

Between Intel, AMD and NVIDIA, it is similar to what we saw in the last section. AMD does very well with the Radeon 7900 XTX, slightly beating the RTX 4080 SUPER by a few percent, although the difference is small enough that you likely wouldn’t notice it in the real world. The extra VRAM on the 7900 XTX, however, may push some users to go with AMD at this price point. Intel also manages to be more cost-effective than NVIDIA, although the performance ceiling for Arc is currently fairly modest. At the top end, the NVIDIA GeForce RTX 4090 provides great results, out-performing the RTX 4080 SUPER and Radeon 7900 XTX by about 30%

In addition to the great scaling between individual GPU models, these effects also tend to scale very nicely with multiple GPUs. Across all the GPU Effects tests, we saw a 1.5x performance gain going from one GPU to two, or a 2.2x performance increase when you go from one GPU to three. If you look at chart #2, you can see how the benefits of multi GPUs hold true for all the tests. Some (like Temporal Noise Reduction) show less of a benefit, while others (like Sharpen) show nearly perfect scaling. but all show at least some performance increase.

AI Features GPU Performance

New in our updated PugetBench for DaVinci Resolve 1.0 benchmark, we have added tests looking at a wide range of AI features in DaVinci Resolve. These include those that are processed during playback and export like Super Scale, Person Mask, Depth Map, Relight, and Optical Flow (Speed Warp), as well as many that are what we call “Runtime” which are processed once. These include Audio Transcription, Video Stabilization, Smart Reframe, Magic Mask Tracking, and Scene Cut Detection.

These results are the ones we most looked forward to seeing. For everything else, our new benchmark update has expanded and improved the tests, but we already had a general idea of how different GPUs perform and how well they scale with multiple cards. For these AI tests, however, we have never done in-depth testing, so everything here is new.

First, note that NVIDIA has a pretty large advantage. Even the RTX 4060 Ti and the two-generation-old RTX 2080 Ti perform about on par with AMD’s top Radeon 7900 XTX, and performance only goes up with the 4070, 4080, and 4090 models.

Within NVIDIA, GPU performance is about what you would expect. Higher-end cards provide higher performance, and upgrading offers tangible benefits. For example, the RTX 4080 SUPER is 35% faster than the RTX 4070 SUPER, and the RTX 4090 is 14% faster than the RTX 4080 SUPER.

Multi-GPU scaling is also respectable, although similar to many of the previous sections, it varies based on the individual test. Overall, going from one GPU to two gives a 15% performance boost, while having three GPUs is nearly 30% faster than a single card. If you look at the individual tests (chart #2 above), however, you will notice that many of the AI features do not scale at all with more than a single GPU. In fact, a few (Magic Mask Tracking and Smart Reframe) seem to show a slight loss in performance with multiple GPUs. Others, like Optical Flow (Speed Warp), show no benefit with a second card but oddly show a huge benefit with three cards.

There are obviously still some optimizations to be done with these features, but having multiple GPUs can be beneficial in some cases. Super Scale and Face Refinement are prime examples, with terrific scaling with dual and triple GPU configurations. We have seen some interesting improvements already being made in the public beta for DaVinci Resolve Studio 19, and are looking forward to that version getting released to see how it will affect performance with these GPUs!

What is the Best Consumer GPU for DaVinci Resolve Studio 18.6?

This article covered a lot of ground, and if there is any specific aspect of DaVinci Resolve you are interested in (GPU Effects, AI, RAW Codec processing, etc.), we highly recommend reading that specific section. However, there are some generalities we can make as far as what the best consumer GPU is for DaVinci Resolve Studio.

First, if you are looking for the best performance, you will likely want to stick with the AMD Radeon or NVIDIA GeForce RTX lines. Intel is doing great things with their Arc GPUs, and for their cost, they often stand toe-to-toe with NVIDIA. Since even Intel’s top-end card is a fairly budget-friendly option, however, the performance ceiling is relatively modest. But if you are on a budget, Intel Arc can be an interesting option.

Between AMD and NVIDIA, AMD does very well in many cases, with the Radeon 7900 XTX 24GB performing about on par with the GeForce RTX 4080 SUPER 16GB. NVIDIA does have a sizable performance lead for the new AI-based features in Resolve, but the extra VRAM from AMD can be very enticing if you need it.

On the other hand, in terms of maximum performance, AMD can’t quite keep up with NVIDIA’s GeForce RTX 4090 24GB. This holds true across the board, but the RTX 4090 is especially strong for processing GPU Effects and the newer AI-based features in Resolve.

Some of the most interesting results were multi-GPU scaling with one, two, and three GeForce RTX 4090 GPUs. This is something we have looked at in the past, but the new AI-based tests introduce even more things to examine. In general, the OpenFX (GPU Effects) in Resolve tends to scale nicely with multiple GPUs. Processing of RAW codecs and the AI features, on the other hand, vary depending on the specific test. Some scale well, others not at all. Fusion is the other wrench that gets thrown in, as the majority of the tests in Fusion actually see a loss of performance with multiple cards.

Whether using multiple GPUs is a good idea or not is going to come down to exactly what it is you do. If you work with ARRIRAW and tend to use a lot of OpenFX or want to do Super Scaling, then multiple cards will give you a terrific boost to performance. Doing basic color grading and editing on RED footage, on the other hand… not so much.

If you are looking for a single answer for what consumer GPU is best for DaVinci Resolve Studio 18.6, the NVIDIA GeForce RTX 4000-series is likely to be the best bet for most users. AMD’s Radeon 7000 line also does well, but for most users, the higher overall performance of NVIDIA tips the scale in their favor.

If you need a powerful workstation for content creation, the Puget Systems workstations on our solutions page are tailored to excel in various software packages. If you prefer to take a more hands-on approach, our custom configuration page helps you to configure a workstation that matches your exact needs. Otherwise, if you would like more guidance in configuring a workstation that aligns with your unique workflow, our knowledgeable technology consultants are here to lend their expertise.