SIGGRAPH is the annual conference for CG professionals. Roughly 15,000 digital artists, filmmakers, directors, technologists, and others from around the world come together to share what they have learned and future technologies they are developing. So, it should be no surprise that this year AI was the topic at the forefront of many sessions (not to mention the fireside chat with Jensen Huang and Mark Zuckerberg). Virtually every session I tried to attend that involved AI had a line at least 30 minutes before and would have more attendees than chairs. There were so many sessions involving AI that even with myself and Jon Allman (our AI Industry Analyst) attending different sessions, we couldn’t cover them all. However, I did want to share some of my thoughts on how the 3d industry is approaching AI.

Virtual Humans

One of the big topics discussed was that of “virtual humans”. NVIDIA hosted a 3 part series covering the opportunities and challenges faced with bringing these virtual humans to life. This touched on many industries beyond just graphics. At the most basic level, think chatbot with a 3d avatar. However, they want to extend this to video games and digital actors. We’ve already seen some impressive digital humans, especially with Epic’s Meta Humans. The next step is to merge these digital avatars with a large language model such as ChatGPT.

We’ve already seen impressive results with LLMs, so the desire to have a virtual character makes sense. However, there are a lot of challenges. If this is to be something that a human will interact with, the system will need to be able to take the user’s speech, convert it to text, send that text to an LLM (either locally or in a cloud service), which will generate a text response, and then send that text to a text-to-speech converter. That audio will then go through an audio-to-animation system and then be animated. Even though with modern systems, this entire process only takes a few seconds, that is still long enough to make the conversation not feel natural, which in turn gives a bad experience to the end user.

Beyond latency, the bigger problem is tone and nonverbal communication. For a virtual human to be believable, it will need to convey emotions that match the text. We say so much beyond just the words we speak. While this is a difficult task in itself, it gets compounded by the fact that each culture has its way of speaking and emoting, and those do not always translate between each other.

NVIDIA has a sample digital human available to try here: https://build.nvidia.com/nvidia/digital-humans-virtual-assistant

These challenges, as well as many others discussed, do not yet have solutions. It was refreshing to have these researchers be very forthcoming about AI having potential but that it is not ready yet. This is an area to watch. As mentioned earlier, a lot of industries are invested in virtual humans, so a lot of development will be happening over the next few years.

Personalized AI

While the greater discussions around AI have been LLMs that do everything, the big trend at SIGGRAPH was on smaller, highly personalized models that do one specific task. This was a big part of the fireside chat/Keynote with Jensen. I could have an AI assistant dedicated to writing articles that is trained specifically on how we write here at Puget System. It wouldn’t need to have the full knowledge of ChatGPT, because so much of that information just wouldn’t be useful. However, a smaller model would be faster to train, faster to respond, and format its response exactly how we want it formatted. Another AI assistant could assist with Python scripting and know how to tie any benchmark I create into our automation system. Or maybe one that sorts through benchmark results and flags any anomalies. Because these are small, it is easier for someone to train on their workstation and not on some server. And because its run locally, the data is more secure. This will probably be the next big wave in AI models, and where we’ll start seeing them be incorporated into many existing applications.

AI for 3D models

The area I was most interested in for this year’s Siggraph was how AI handles the creation of 3D models. We’ve seen excellent results with text, really good results with 2d images (still a ways to go with getting granular control), and some ok-ish results with video. However, most AI models I’ve experimented with have left much to be desired. The meshes tend to be very chaotic, with no good edge loops. The characters I generated would be a nightmare to rig and animate, and the textures and UVs would need to be remade entirely.

The first session I attended was from Autodesk. They do not yet have a working model for 3ds Max or Maya, but it is in development. They gave a few reasons for this. As stated above, the results so far aren’t great, and they don’t want to release something if it is not actually useful. But more importantly, they wanted to tackle this from the perspective of a 3D artist. They want their AI tools to be something that supplements an artist’s workflow and not replaces it. This was a very refreshing perspective. Too often we hear from CEO’s that pitch AI as the solution to a problem no one had. While Autodesk didn’t have any sort of timeline, I’m excited to see what they do come up with.

Many other sessions had a similar “we are looking into it, but so far nothing to show” theme. However I did find a few 3D tools that can be used right now that have promising results. The first is from Shutterstock, owner of the 3D asset marketplace Turbosquid. This tool isn’t fully available to the public yet, however, you can test it here. The geometry is much cleaner than any model I’ve tried. The demos they showed had rather simple geometry. One really cool feature is that it is multi-model, meaning you can input images as well as text. So they gave it a concept drawing of a shield as well as text explaining other details and the AI produced a full model. Some of the limitations include that it is limited to the styles they have trained. It also resides on their servers, so data privacy is a concern for those in studios. Currently, there is no way to train your own artistic style, which is something that studio users would want.

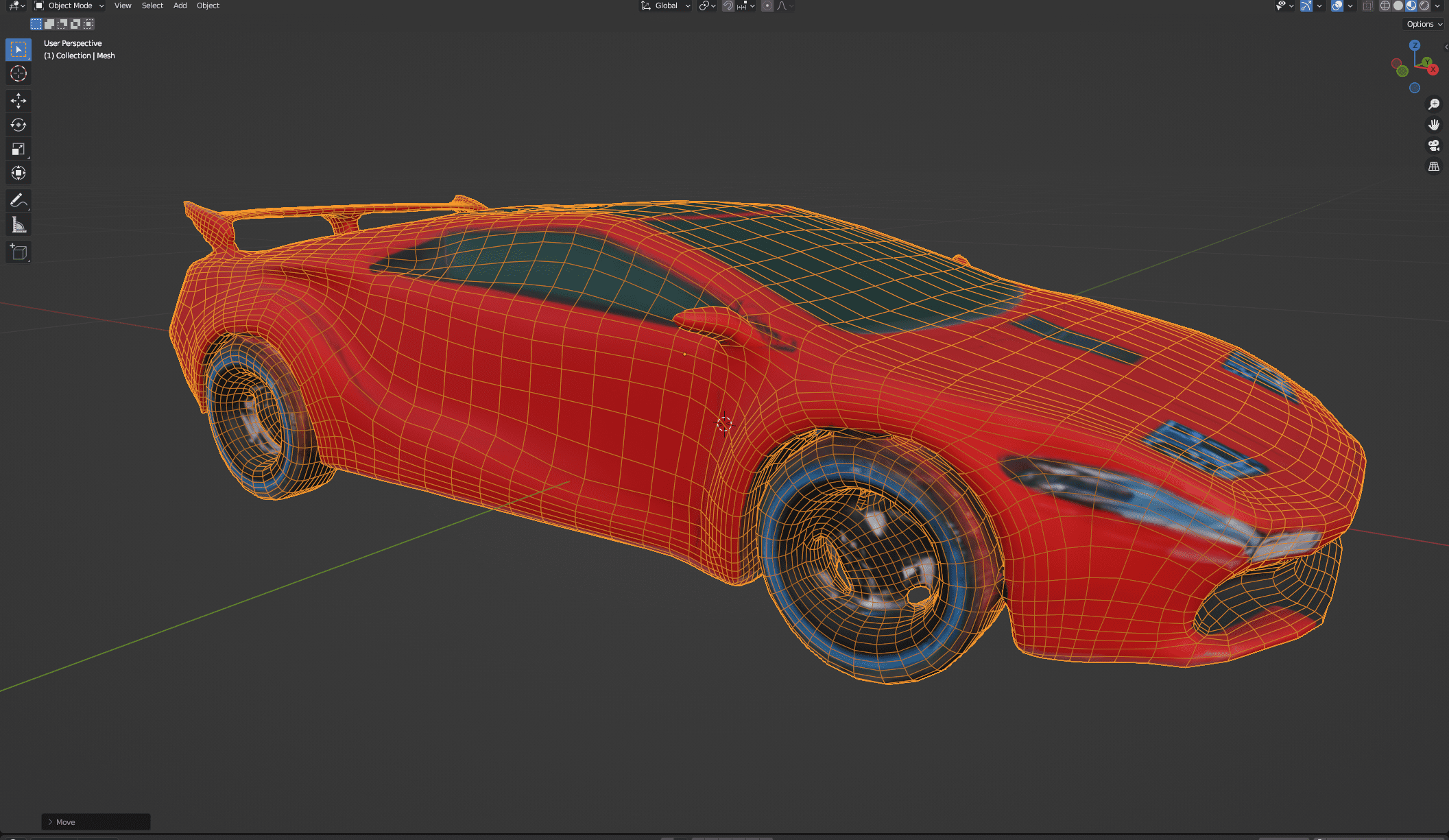

This is an example of what is generated with the prompt “red cyberpunk sports car.” It did miss the “Cyberpunk” part of the prompt, but it was able to produce a decent red sports car. The geometry is good, but it still doesn’t separate things like the tires and wheels. Also, the headlights and windows are texture only when there should be supporting geometry. This isn’t really useable for any sort of production, but it could work for pre-viz or storyboarding, where an artist will create the finished product.

Another tool is Sloyd, available as a web, and soon as a plugin for DCCs such as Blender and 3ds Max. Sloyd takes an interesting approach. Instead of generating all the geometry from scratch, it uses a library of premade sub-objects. This isn’t just kitbashing, as the subobjects have parameters that can be changed to fit the final project. This has similar drawbacks to Shutterstock’s solution, with it being limited to what components are in its library, having no way to run locally, and having no way to add your own components. However, these should be relatively easy to overcome in due time. The good news is that you can try it now for free.

Conclusion

This is just a brief summary of some of the things seen at this year’s SIGGRAPH. The main highlight is the rapid integration of AI into the 3D industry, offering a glimpse into both the immense potential and the significant challenges ahead. From the evolving concept of virtual humans to the nuanced discussions around personalized AI, it is clear that AI is set to redefine many aspects of digital art and content creation. However, while the advancements are impressive, the technology is still in its infancy. The industry’s cautious yet optimistic approach, particularly the emphasis on AI as a tool to enhance rather than replace human creativity, is a promising sign of a balanced future.

As the 3D industry continues to explore AI’s capabilities, the focus seems to be on creating tools that empower artists and technologists rather than overshadow them. The ongoing development of AI for 3D modeling, with companies like Autodesk and startups like Sloyd, reflects a commitment to quality and artist-centric solutions. Though many of these tools are still in development or early stages, the enthusiasm and investment in AI suggest that we are on the brink of significant breakthroughs. The future of AI in 3D is undoubtedly exciting, and as these technologies mature, they will likely become indispensable assets in the creative toolkit, driving innovation across the industry.