Stable Diffusion is seeing more use for professional content creation work. How do NVIDIA GeForce and AMD Radeon cards compare in this workflow?

Stable Diffusion is seeing more use for professional content creation work. How do NVIDIA GeForce and AMD Radeon cards compare in this workflow?

RealityCapture, like other photogrammetry applications, is built to take a batch of photographs and turn them into digital, 3D models. The algorithms used during that process are designed to be “out of core”, meaning that not all of the data has to be loaded into system memory (RAM) at the same time – allowing for full processing without requiring a ton of available memory. Having more RAM can still be beneficial, though, so we decided to test how much impact it has on performance.

Depending on the number of GPU-accelerated effects you use, a higher-end GPU can give you a nice performance boost in Premiere Pro. But what will give you the best performance for your dollar? An NVIDIA GeForce RTX video card, or one of AMD’s Radeon cards?

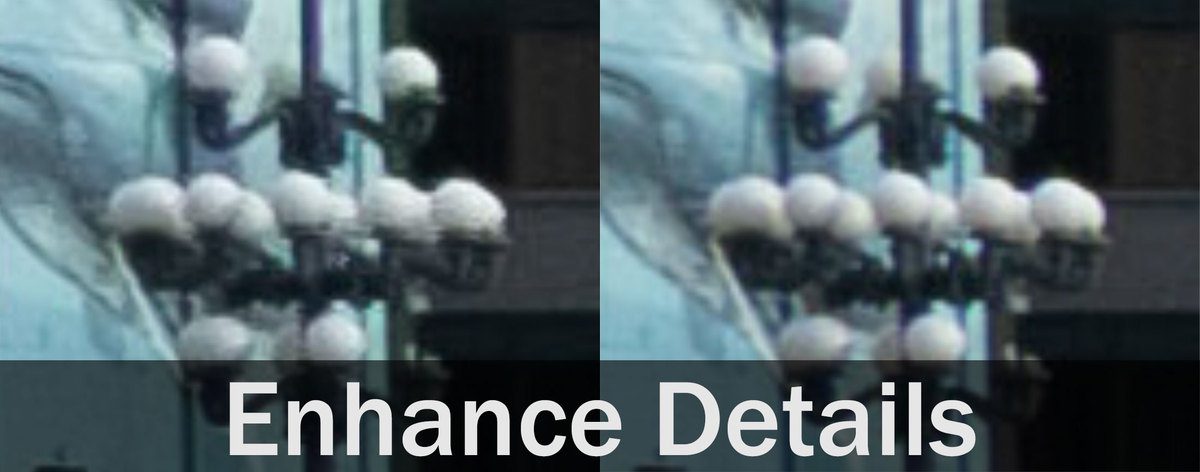

In the latest version of Lightroom Classic CC (8.2), Adobe has added a new featured called “Enhanced Details” which uses machine learning to improve the quality of the debayering process for RAW images. This is very GPU-intensive, so we wanted to see exactly how much faster it can be on a modern, high-end GPU.

Using NVLink requires a physical bridge between two identical NVIDIA graphics cards, but several models of bridges have been sold with different branding. Which versions work with which GeForce, Titan, and Quadro graphics cards?

OctaneRender is a GPU-based rendering engine, utilizing the CUDA programming language on NVIDIA-based graphics cards. An update to their benchmark, OctaneBench 4.00, was recently released – so we gathered most of the current and recent GeForce and Titan series video cards and tested them to see how they perform in this version of OctaneRender.

Redshift is a GPU-based rendering engine, compatible with NVIDIA’s CUDA graphics programming language. We recently saw how GeForce RTX cards perform in this renderer, but now the Titan RTX is out with a staggering 24GB of memory onboard. That sounds great for rendering complex 3D scenes, but how does it actually perform? And are there any caveats?

The new NVIDIA Titan RTX has a stunning 24GB of video memory, making it very attractive for many editors and colorists working with 8K media. But is the extra VRAM all it has going for it or is it also significantly faster than something like the RTX 2080 Ti 11GB?

Pix4D is an advanced photogrammetry application, suited to a wide range of uses, with a focus on handling images captured by drone cameras. Processing of those images into point clouds and 3D meshes / textures is time-consuming, heavily using a computer’s CPU and GPU. A new version, 4.3, was released recently – so we have tested multiple projects across the new GeForce RTX series of video cards, as well as the previous generation, to see which graphics card performs the best.

V-Ray is a hybrid rendering engine that can run on both CPUs and GPUs, depending on the version that is used. The current benchmark only measures CPU and GPU performance separately, though, and while that is not ideal or a perfect match for how the modern V-Ray Next engine performs it can still be helpful to look at when comparing GPU rendering performance. Let’s see how NVIDIA’s new GeForce RTX 2070, 2080, and 2080 Ti cards stack up against the previous generation.