Table of Contents

Introduction

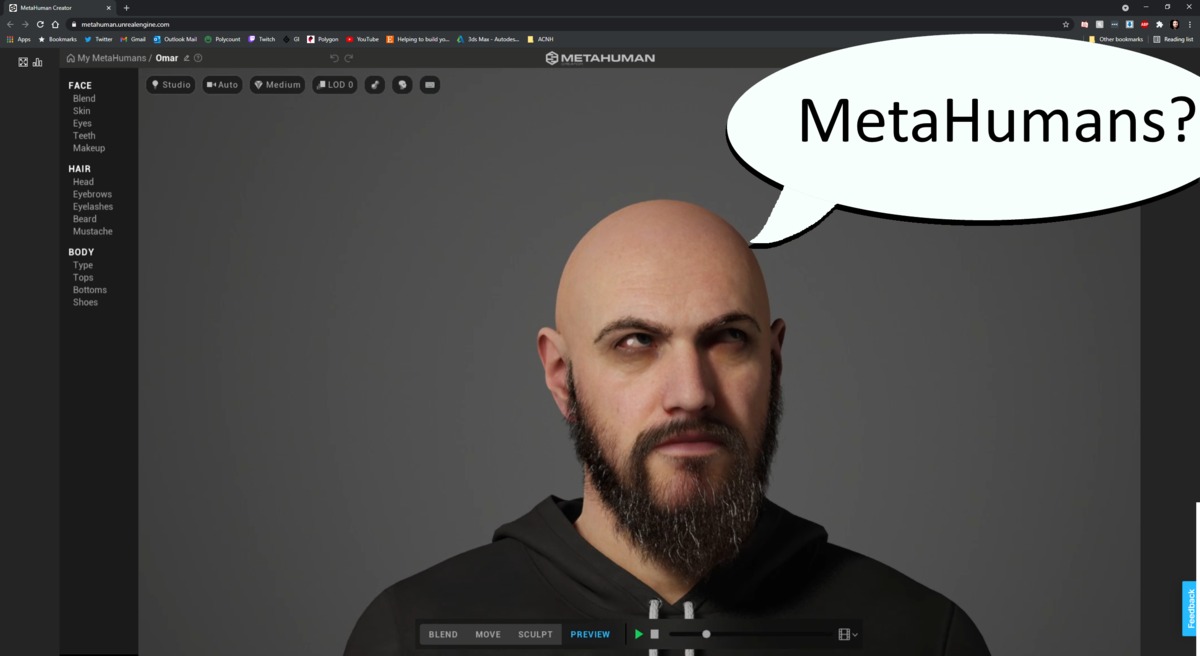

If you are anywhere near any of the Unreal Engine communities on any social media platform, you’ve probably heard the term “MetaHuman” several times a day recently along with photos and videos of people’s creations. So what exactly is a “MetaHuman” and how will they be useful to the Unreal community? Let’s take a look at the tool in its current form, and what Epic’s goals are.

First off, what is this “MetaHuman?” It is a new tool from Epic that allows you to quickly create a digital character for Unreal Engine. This character is fully ready to be animated and has all the necessary prep to work with motion tracking systems. Within a few minutes of getting into the tool, you can have a 100% unique character exported to Unreal Engine ready to be integrated into your project. Here is the statement from Epic’s website, “MetaHuman Creator is a cloud-based app that empowers anyone to create photorealistic digital humans, fully rigged and complete with hair and clothing, in minutes.” There is a lot to unpack from that statement, so let’s dive into some of these points and discuss the strengths and weaknesses.

My attempt at a self-portrait achieved in only a couple hours.

It’s in the Cloud

The first thing you may notice is that it is cloud-based, meaning that nothing needs to be downloaded to the local machine, it all runs in a web browser. As of right now, it is not actually part of Unreal Engine. According to Epic, the reason for this is because there is a significant amount of data that the Creator is pulling from to make these characters. All the data used is from highly detailed 3D scans of real people. As you adjust the controls in the Creator, it is pulling from this database to create something new, generating a high poly mesh and 8k textures. This is also being rendered in real-time with ray tracing. All in all, a lot of processing is going on. Since this is all happening on Epic’s servers and it’s just streaming the results, almost any computer can run the Creator, and it doesn’t need a massive download.

The downside to this is that you are reliant on an internet connection. Any network errors and suddenly you don't have access to the tool. Even once editing had started, a dropped connection kicks you out of the tool and you'll have to wait for the connection to be reestablished before you can continue working. Usually this isn't an issue, but it can be incredibly frustrating to not be able to continue working due to your ISP having an issue.

Realistic, as in Plausible

The second part of Epic’s statement is that this is to create photorealistic digital humans. More specifically, plausible human characters. Because this is all based on 3D scans of real people, none of the sliders in the creator will let you make anything it hasn’t seen. This means that you will get a plausible-looking human no matter what you do with any of the controls. The available controls are fairly intuitive and quite powerful. The flip side is there are no overly exaggerated features, no stylized characters, no fantasy creatures, etc., only photorealistic humans. If you are looking to create an alien species for a sci-fi project, you will need to use a different tool.

Also, when making humans the customization tools are quite robust, you won’t be able to make a 1-to-1 copy of a real person. While there are a lot of adjustments that you can make, they aren’t nearly as granular as sculpting in Zbrush. You also can’t import a custom skin texture, and the included skin options only go so far. This means you won’t be able to make an exact copy of a historical figure or a digital double of a live actor. You may be able to get them to look close enough to pass in a wide shot.

While there are clothing options, in its current stage, they are very limited. Only three tops, three pairs of pants, and three pairs of shoes. You can adjust the color of the clothes, but you can’t import additional textures or custom models from another program. I’m sure this will see some improvements soon, but for the time being, to get any custom clothing, you’ll have to export the MetaHuman to Maya and make them the old-fashioned way.

Application Connections

Speaking of exporting to export, currently, the only programs that the creator can export to natively are Unreal Engine and Maya. This is only because Maya is the software they are using to create the animations, and haven’t taken the time to make any additional exporters. Many people in the community are working on their own plugins for applications like Blender. But it is worth noting that if you aren’t using Maya and want to use the rig that MetaHuman generates, you will need to do some extra work to get it useable. Of course, there is always the ability to animate with motion capture right in Unreal. The various motion capture systems have been updating their plugins to work with MetaHumans. You still need to go through the normal calibration process to map your actor’s face to that of the MetaHuman, but that is to be expected.

Now let’s take a look at the major industries using Unreal Engine and how they may, or may not use MetaHumans.

Game Development

Let’s start with Gaming, after all, Unreal Engine is first and foremost a game engine. One big draw the gaming industry will have with this is that not only does it create a great-looking character, but it also generates several LODs (Level of Detail, think a series of increasingly lower poly count versions of the same character). Generating LODs is often a time-consuming process. I’m not sure how many larger studios will use this for main characters due to the lack of real fine-tuning, or even simple things like putting a scar, or a specific haircut, etc. However, this could be very helpful in creating background characters or filling out random NPCs in a city for example. Some Indie devs may use this as it’s a great time saver especially if they don’t have a dedicated character artist or animator. Again, this is limited to realistic-looking humans, so that limits the potential users.

On another important note, this cannot be used by the player to customize their characters. Because this all lives in the cloud, they do not have any way to integrate the cloud app with a running game. Theoretically, such a service could work, but that is not the intent Epic has, so they have not built that system. Even once a MetaHuman is imported into Unreal, there is still a lot of setup that needs to happen to get the character working. Not all of the default control rig would be needed, any custom clothing or accessories would need to be created beforehand and merged at run time, and on and on. Don’t expect that to happen any time soon.

Architecture

Architects are increasingly looking to Unreal Engine as a rendering option, either for stills, movies, or Virtual walkthroughs. However, I don’t anticipate this industry will jump on the MetaHuman bandwagon too quickly. Often, they don't use any sort of character as they are just trying to sell the environment. When they do use a character in a scene, it is more of a piece of set dressing than anything else. There is already a large library of stock images, videos, or 3d characters all set for this exact use. For most of these users, the work needed to get enough characters into useable poses is just too high when they can turn to any number of services that can get them better results in less time. Epic even offers a free pack of over 100 3D scanned characters, complete with props and poses, ready to be dropped into an environment. These are not animated, but when your end goal is a static image, that’s not too important.

Film/TV industry

Film, TV, Virtual Production, and the like are all excited for MetaHumans. Anyone using Unreal for Pre-Viz will jump on any tool that gives them a better look faster. If they can get a character that is even somewhat close to their actor and can begin blocking out shots and do some basic motion capture work with minimal effort, that would be a huge win. Similar to the game industry, this may not be used for the main character right away, but being able to populate background characters and get them animated with motion capture very quickly will be incredibly useful.

Some studios may take the effort to export the MetaHuman and do additional work in Maya or ZBrush to further customize the character, then reimport to Unreal for motion capture and rendering. As stated before, because the tool currently wouldn’t be able to 100% match a live actor, it probably won’t be too useful as a digital stunt double, at least not without going back through the traditional pipeline.

Final thoughts

MetaHumans is still in early development. By Epic’s admission, it is a relatively small team with a lot of work ahead of them. What they have delivered so far is a fantastic tool. Several existing software packages offer similar features, some like Character Creator 3 offer a lot more. The big draw to MetaHuman is its tight integration with Unreal Engine and ability to have a photorealistic character, rigged and ready to animate with motion capture in minutes. The limitations are keeping the use cases relatively small for now, but as the tool continues to evolve, more and more will adopt it into their workflow. It has only been available for a short time and already the community has done some pretty cool things. Keep your eye on this tool.

If you want to learn more about MetaHumans, including what Epic has planned, I highly recommend checking out the recent live stream they MetaHuman team hosted. It answers a lot of questions about their goals, and what changes they expect in the near future.

Puget Systems offers a range of powerful and reliable systems that are tailor-made for your unique workflow.