Table of Contents

Introduction

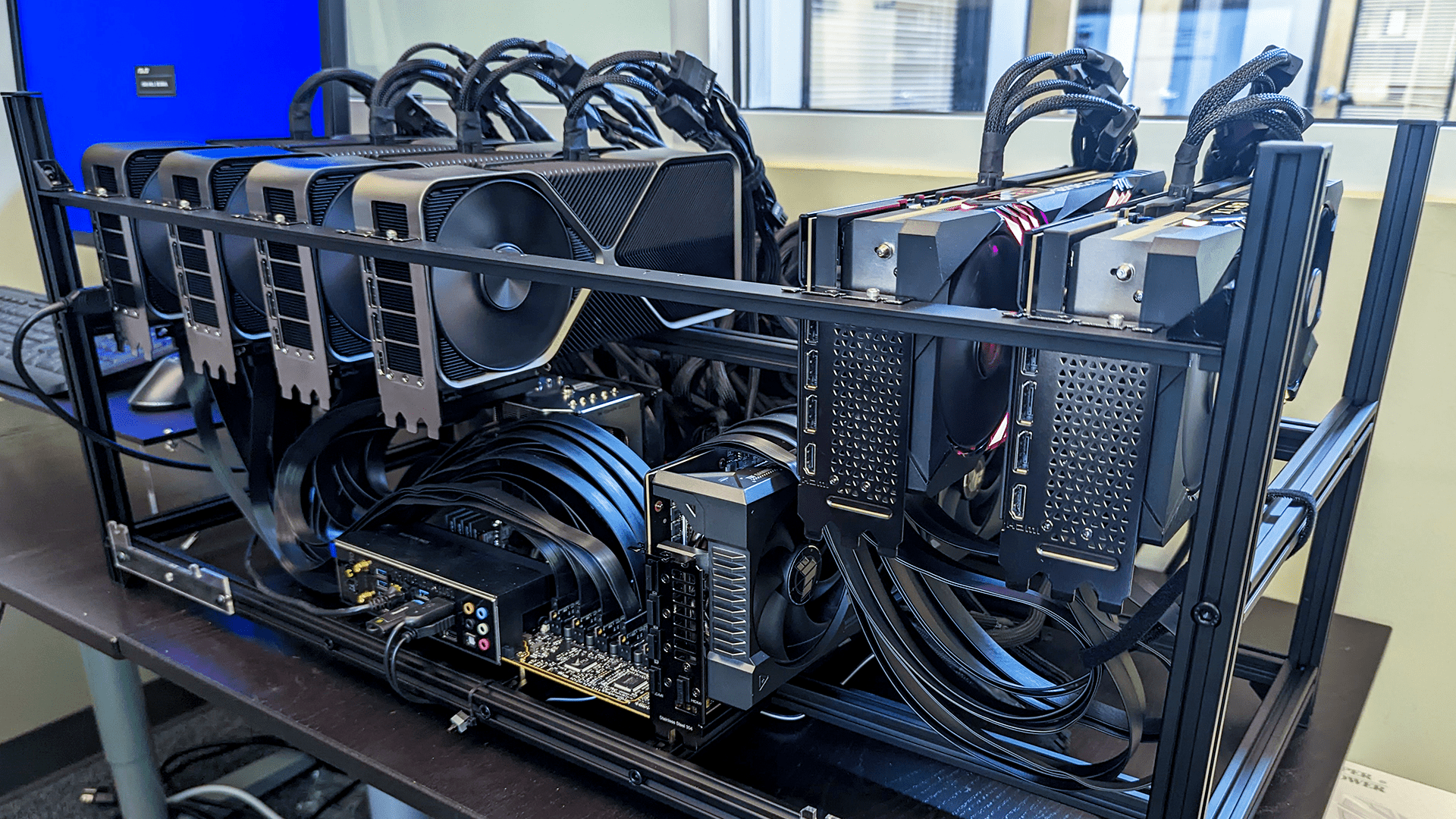

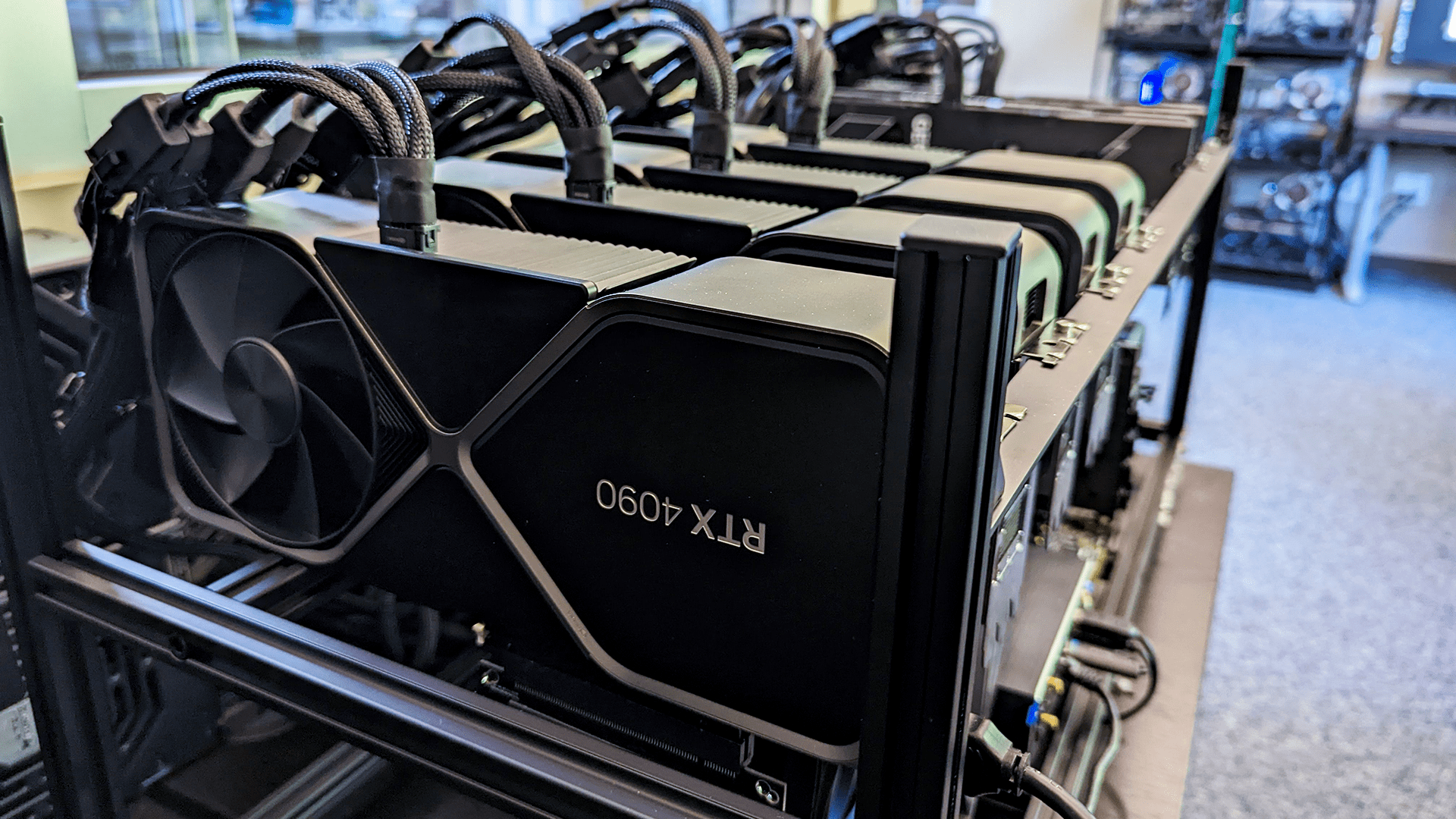

About a month ago, NVIDIA began rolling out their new RTX 40 series GPUs, starting with the GeForce RTX 4090 24GB. The RTX 4090 is an incredibly powerful GPU, and in our content creation review, it easily blew past anything else on the market. In that same article, we included test results in both single and dual GPU configurations, but today, we want to take things to the extreme and see what kind of performance we can get with up to seven of these powerful cards.

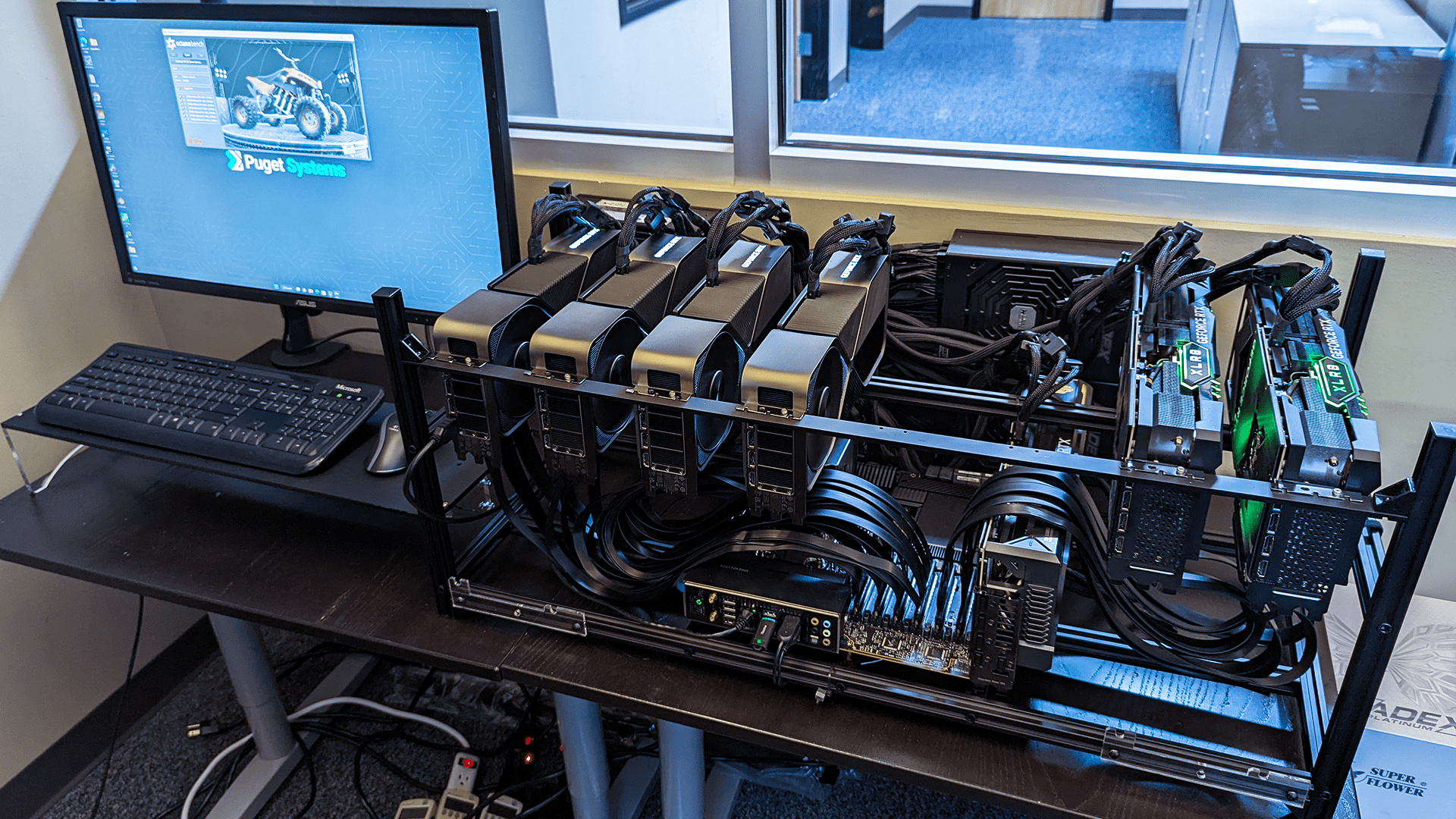

Before we get too far, we want to clarify that here at Puget Systems, we have no intention of selling systems with this many RTX 4090s. These cards are both physically large and demand a ton of power, and we are currently planning on limiting our standard systems to just two RTX 4090s max. Any more than that and you are not only going to have an issue with the physical size of the cards but will also need more power than a standard 15amp circuit is able to provide in the USA.

But sometimes, our curiosity gets the better of us and we can’t help ourselves from testing a configuration that is far beyond what we typically offer to our customers. And after seeing what two RTX 4090 GPUs are capable of, we had to push it to the maximum of what our current Threadripper PRO workstation motherboards are capable of and turn it up to seven

Test Setup

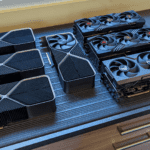

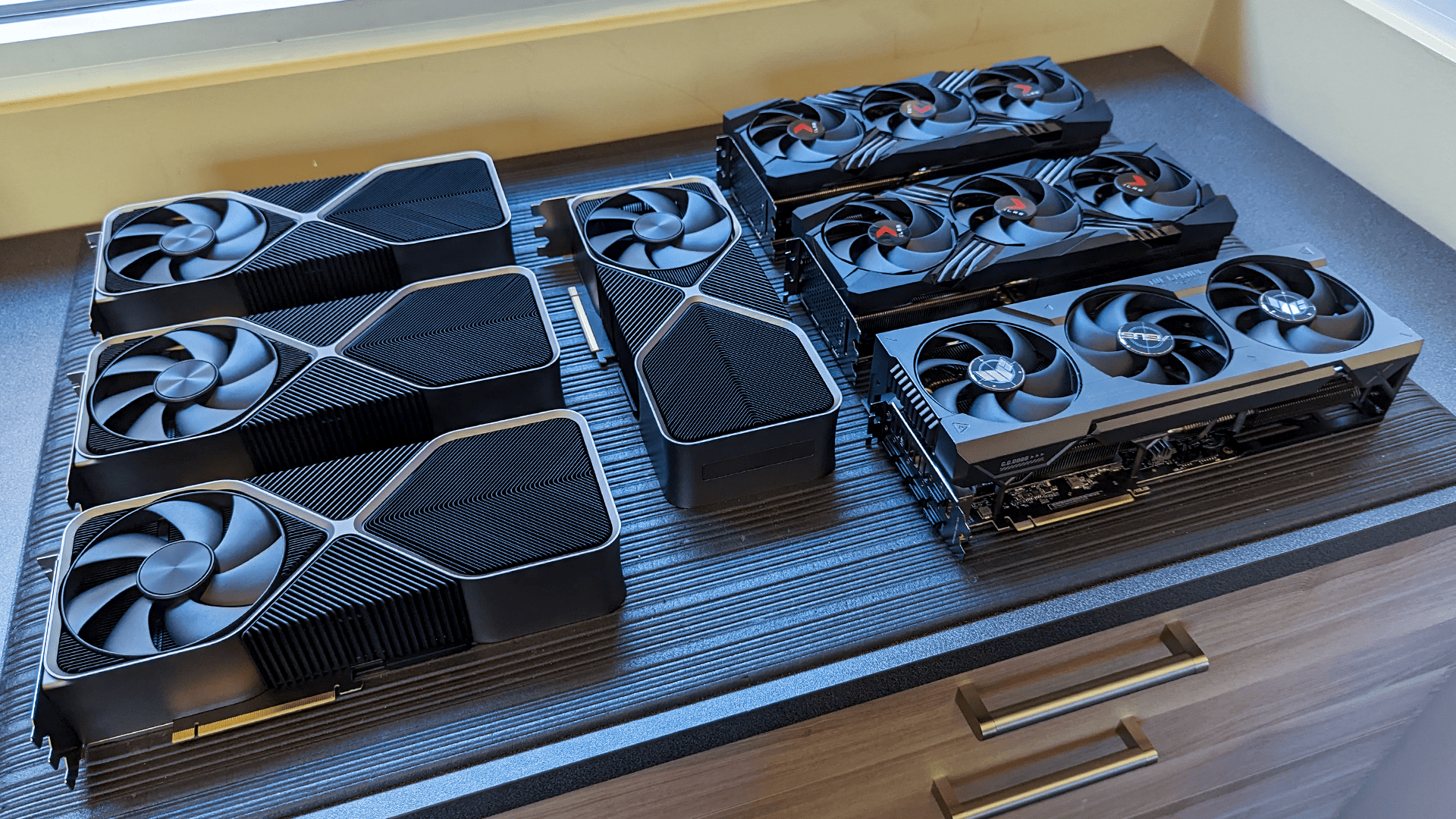

With GPU supply being what it is right now, we, unfortunately, could not do this testing with just a single brand/model of card. Instead, we raided cards from our Labs and R&D teams, borrowing both NVIDIA FE cards, and AIB models. The one requirement we stuck to was that all the cards were not overclocked models (mostly because we didn’t have enough OC cards to do this testing, and didn’t want to mix them).

Test Platform

| CPU: AMD Threadripper PRO 5995WX 64-Core |

| CPU Cooler: Supermicro SNK-P0064AP4 |

| Motherboard: Asus Pro WX WRX80E-SAGE SE WIFI |

| RAM: 8x Micron DDR4-3200 16GB ECC Reg. (128GB total) |

| GPUs: 4x NVIDIA GeForce RTX 4090 24GB FE 2x PNY GeForce RTX 4090 XLR8 24GB 1x Asus GeForce RTX 4090 TUF 24GB |

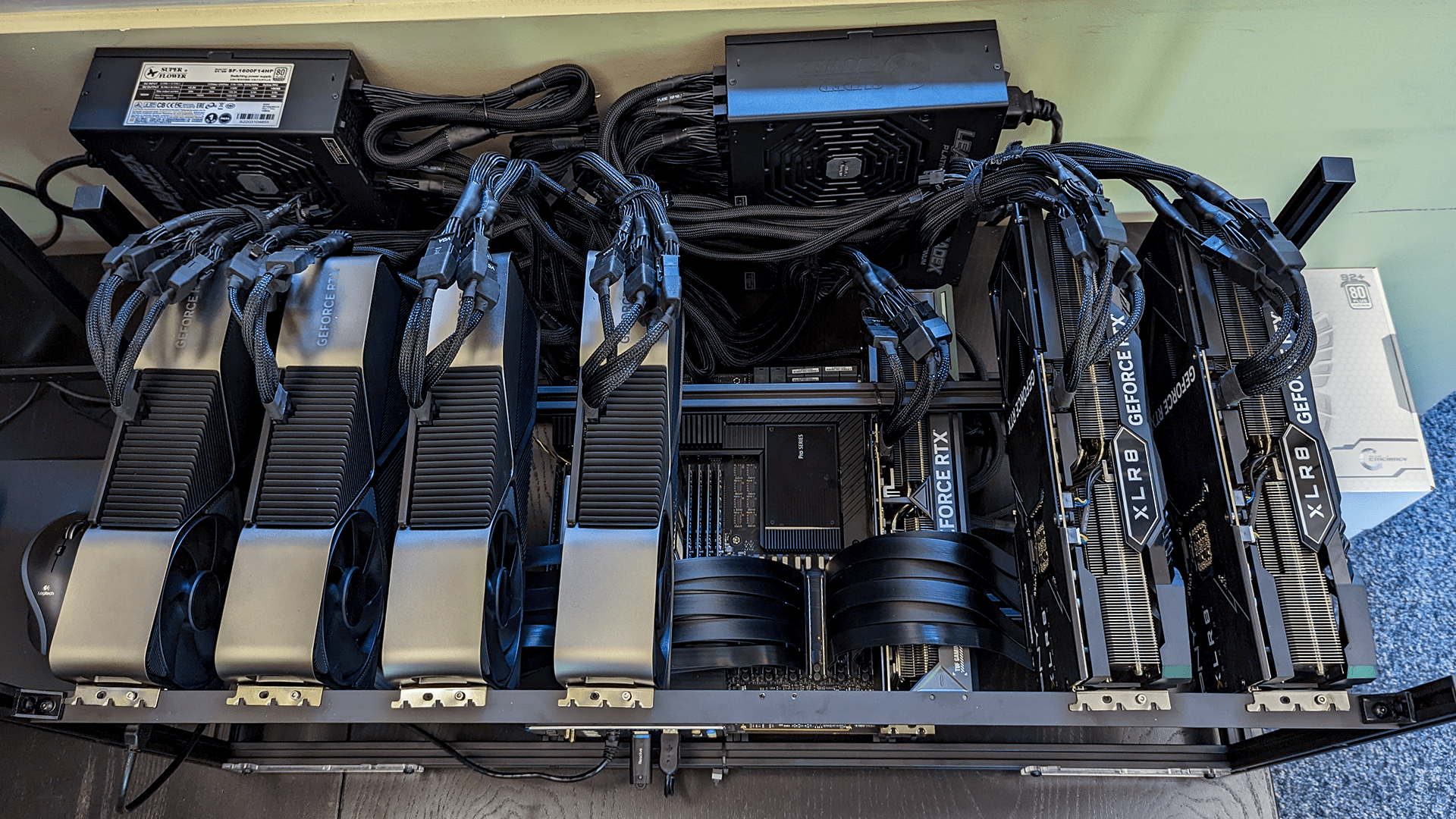

| PSU: 4x Super Flower LEADEX Platinum 1600W |

| Storage: Samsung 980 Pro 2TB |

| OS: Windows 11 Pro 64-bit (2009) |

Benchmark Software

| DaVinci Resolve 18.0.4 PugetBench for DaVinci Resolve 0.93.1 (GPU Effects only) |

| OctaneBench 2020.1.5 |

| RedShift 3.5.09 |

| V-Ray Benchmark 5.02.00 |

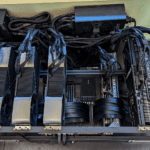

In addition to the base hardware itself, we used an 8 GPU mining rack and 6x Lian Li 600mm PCI-e 4.0 riser cables (PW-PCI-4-60X) in order to get all the GPUs connected to the motherboard. For those that are curious, the total price for this configuration is somewhere in the neighborhood of $28-30k.

Considering we were using a bunch of PCIe riser cables and up to four power supplies, things went remarkably smoothly. The only significant issue we had was that we were unable to get the RTX 4090 cards working properly at Gen4 speeds. Not only would the entire system hang as soon as it tried to boot to the OS drive, but the BIOS was also extremely laggy – taking more than a second to respond to inputs, and often repeating a single input two or three times.

We did not have this problem if we switched to an RTX 30 series GPU (or if we plugged the RTX 4090 straight into the motherboard), but for these 4090s with the PCIe extension cables, we ended up having to set the PCIe mode to Gen3. Luckily, PCIe x16 Gen3 is still excellent, and should only have a minor impact on overall performance for the applications we are testing.

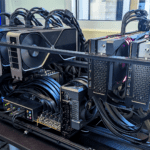

Power Draw: Idle & OctaneBench

Before we get into performance, we wanted to give a little look into the overall power draw of the system. The RTX 4090 is one of the most power-hungry GPUs ever released, and we needed a total of four 1600W power supplies just to provide all the PCIe power cables the cards (and motherboard) required.

The chart above is showing the total power draw from the wall across all power supplies at both idle, and maximum load during OctaneBench. We could have used synthetic tests to get even higher power draw, but we opted to stick to realistic workloads – even if this configuration isn’t exactly realistic itself.

Idle power draw was fairly decent all things considered, and went from 150W with a single GPU, to 353W with all seven. That is only about 30 watts for each GPU added on average, with some variance every couple of cards as we added additional power supplies to the mix.

The power draw under load was where things got a bit out of hand. A single RTX 4090 only had a total system power draw of 510 watts in OctaneBench, but each card we added increased the power draw by about 375 watts. At the very top, we peaked at 2,750 watts, or roughly the equivalent of two standard electric space heaters going at full blast. Our AC was certainly working overtime during these tests!

Technically, in terms of straight wattage, we could have gotten away with just two 1600W power supplies. However, the main limiter was actually the number of PCIe power cables each PSU supported. And with how power-hungry the RTX 4090 can be, we wanted to play it safe and avoid using things like PCIe splitters or pigtails.

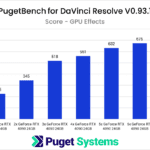

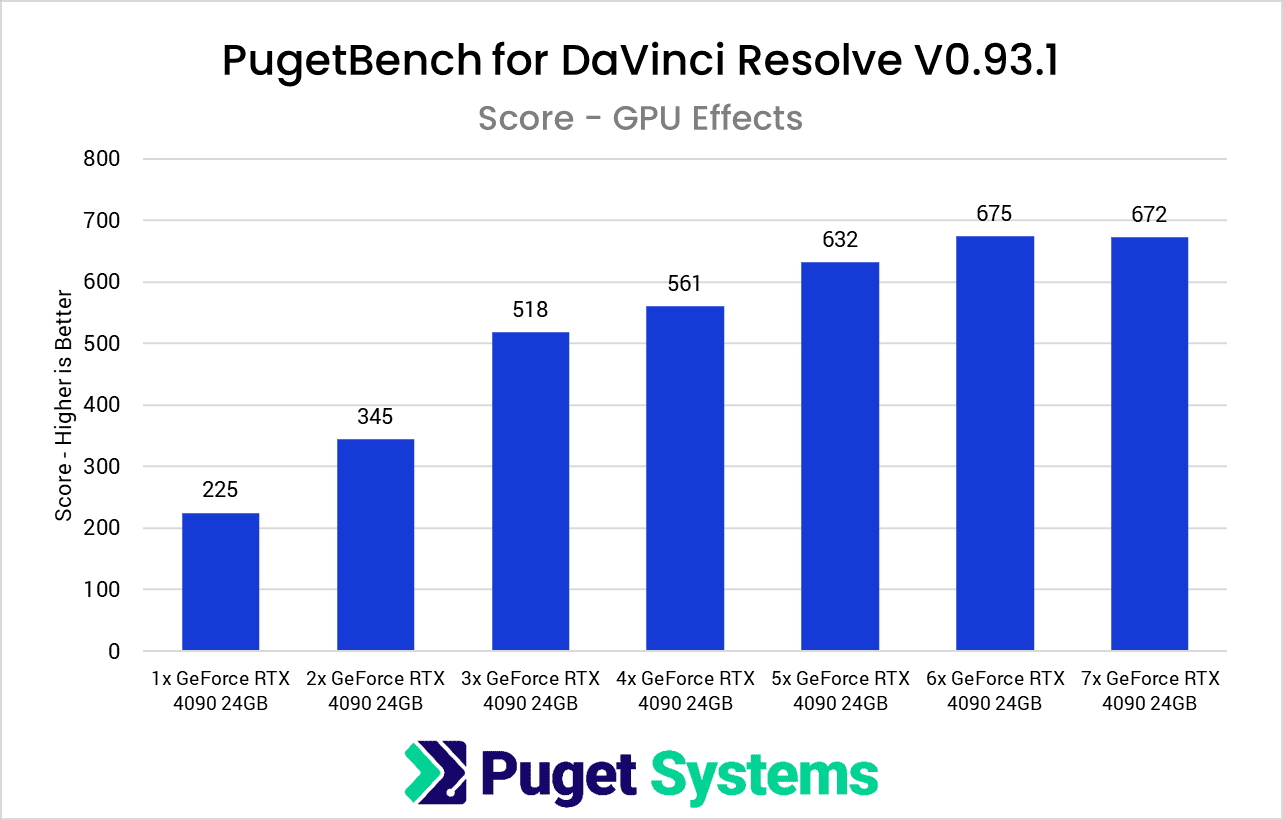

Performance: DaVinci Resolve Studio

To start off our performance testing, we want to look at the “GPU Effects” portion of our DaVinci Resolve benchmark. While DaVinci Resolve Studio doesn’t scale as efficiently as many of the other applications we will be testing, Resolve is known in the video editing world for how well it can utilize multiple GPU configurations for OpenFX and noise reduction.

Going from one card to two, and two cards to three, both result in about a 50% increase in performance. This results in a 3x RTX 4090 configuration performing about 2.3x faster than a single card. After that, however, the gains drop off sharply, and you are only looking at about a 10% performance gain for each additional RTX 4090. And, once you get up to six cards, we saw no performance advantage to adding a seventh card.

At the very peak, with six RTX 4090 GPUs, we saw exactly a 3x increase in performance over a single GPU. That isn’t terrible by any means, but we expect to see even bigger gains when we start looking at GPU rendering engines like Redshift, V-Ray, and Octane.

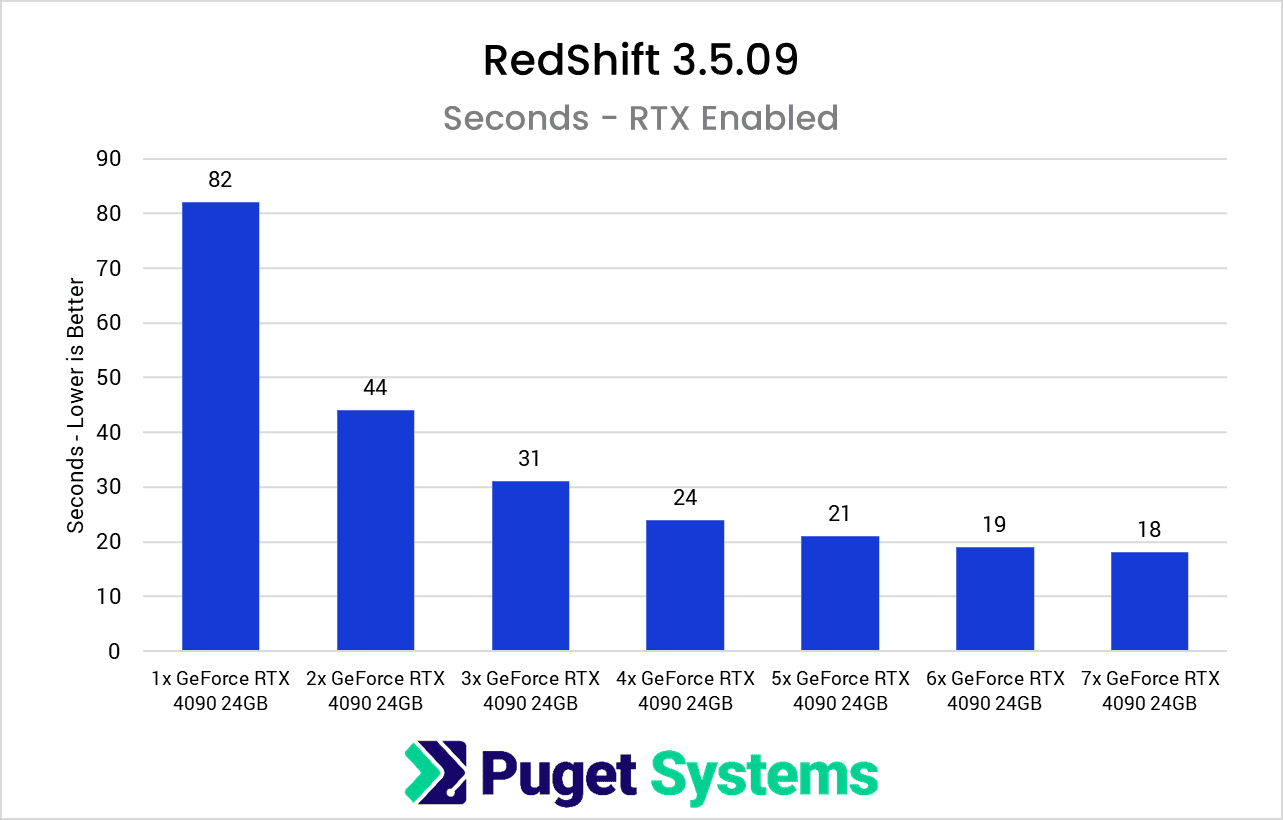

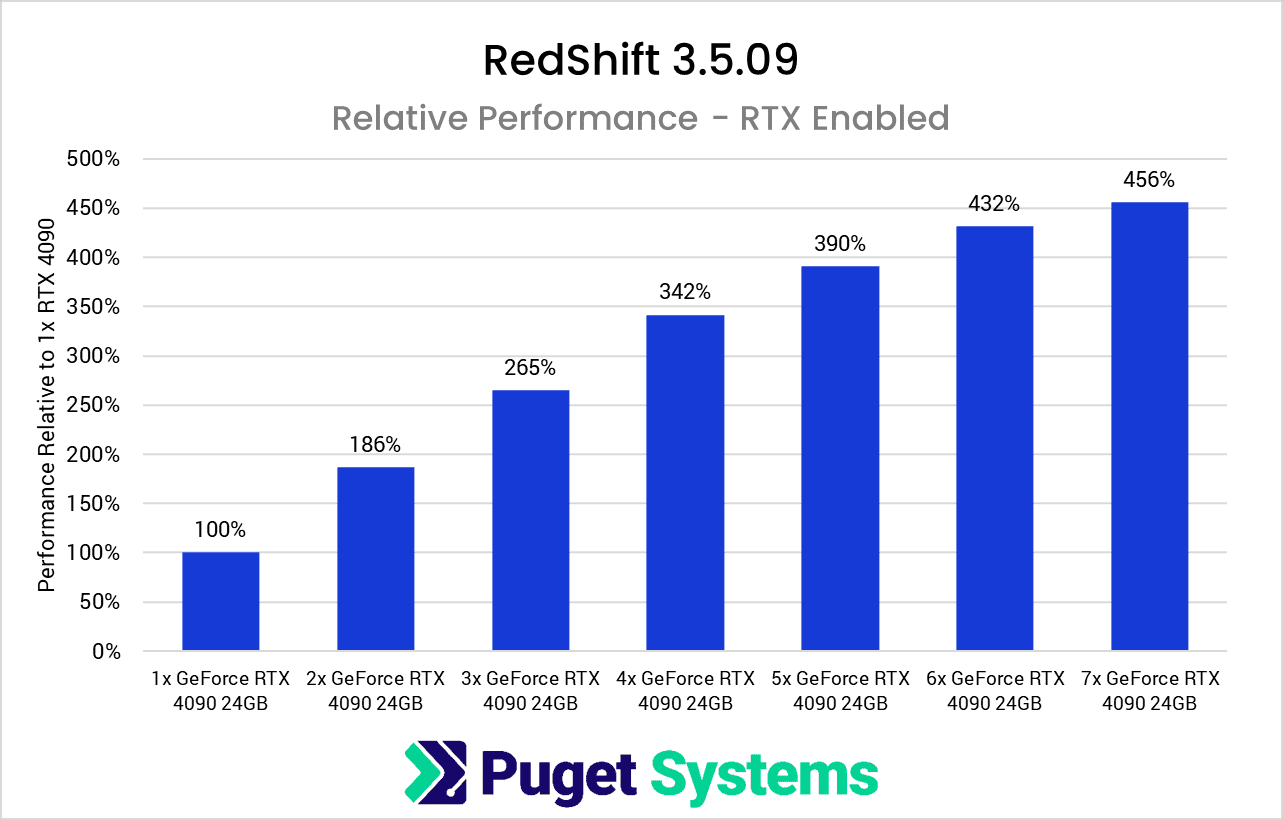

Performance: RedShift

The first GPU rendering engine we want to examine is Redshift. Redshift is different from many other rendering benchmarks as it is timing how long it takes to render a single scene, rather than returning how many samples per second the system can process. The issue with this method is that as hardware gets faster and faster, the test takes less and less time to finish. This can impact the measurement directly (especially since Redshift only reports results in whole seconds rather than fractions) but can make things like scene load time take a bigger portion of the result rather than isolating the rendering portion.

Because of this, we feel that the current Redshift benchmark is a bit misleading when it comes to incredibly powerful configurations like this. The performance scaling was decent up through four RTX 4090 cards, but the benefit per GPU dropped off after that. We still ended up with a peak performance that was 4.5x higher than a single RTX 4090, but we suspect that actual Redshift users rendering large scenes would see a larger benefit in most cases than what this benchmark is currently capable of showing.

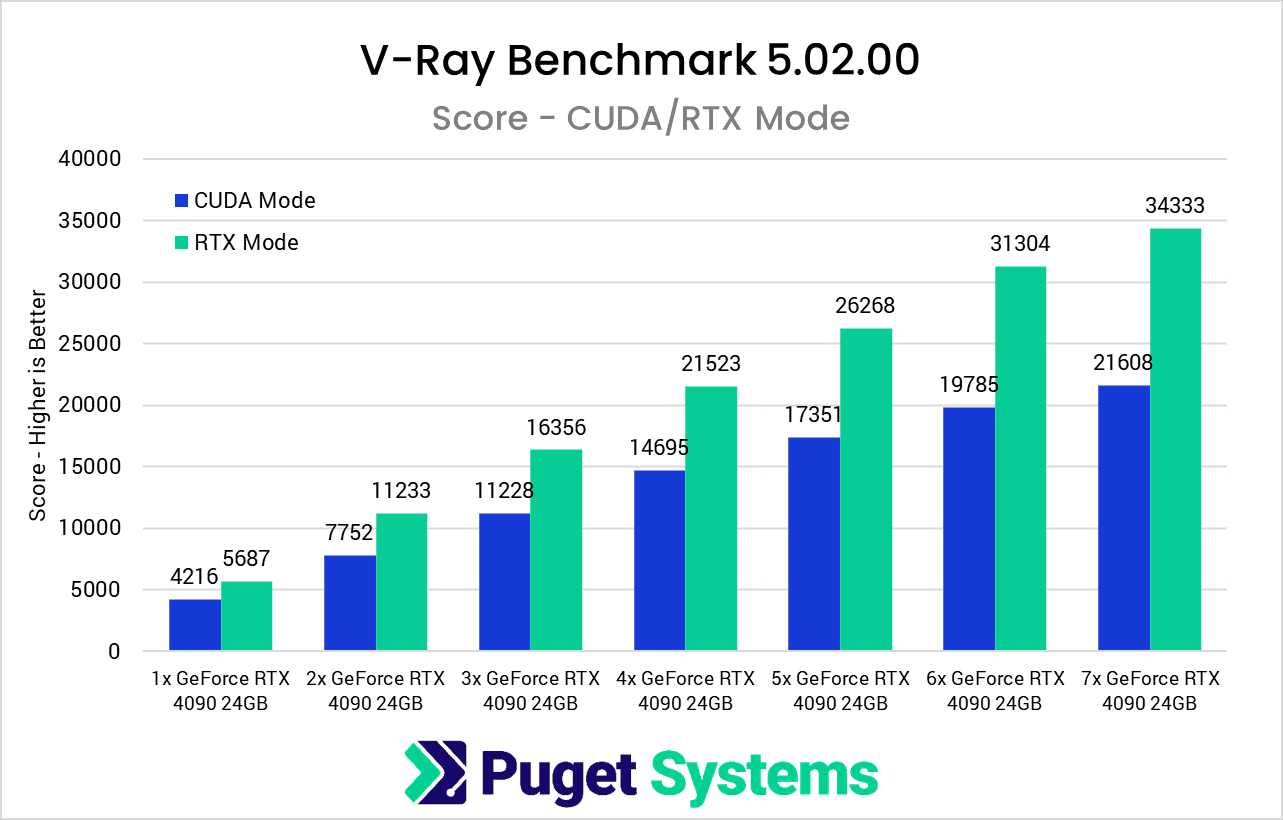

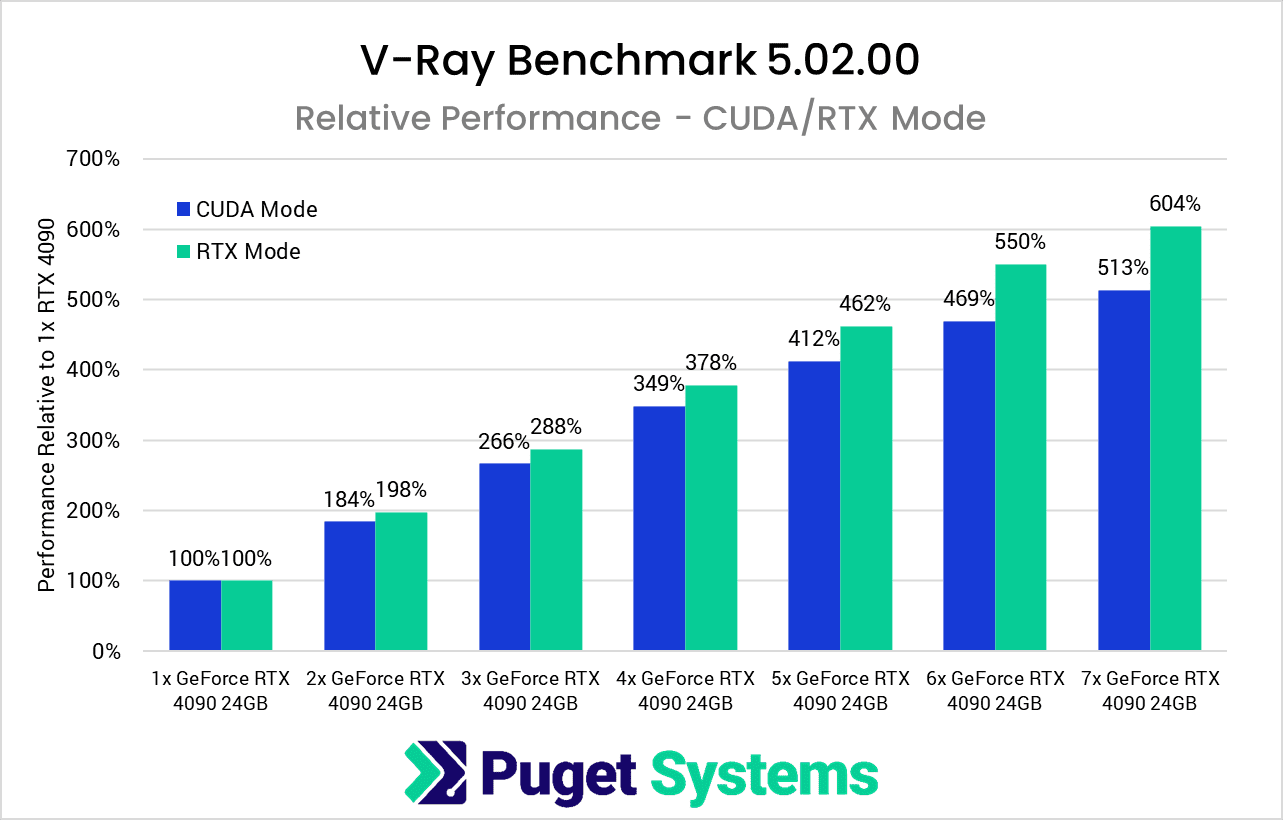

Performance: V-Ray

Next up is V-Ray, which we tested in both CUDA and RTX modes. CUDA mode shows diminishing returns once you get above four RTX 4090s, but RTX mode had terrific scaling all the way up to six cards. It dropped off a bit with the seventh card, but we were still able to end up with a maximum of about 6x faster performance with seven RTX 4090s versus a single RTX 4090.

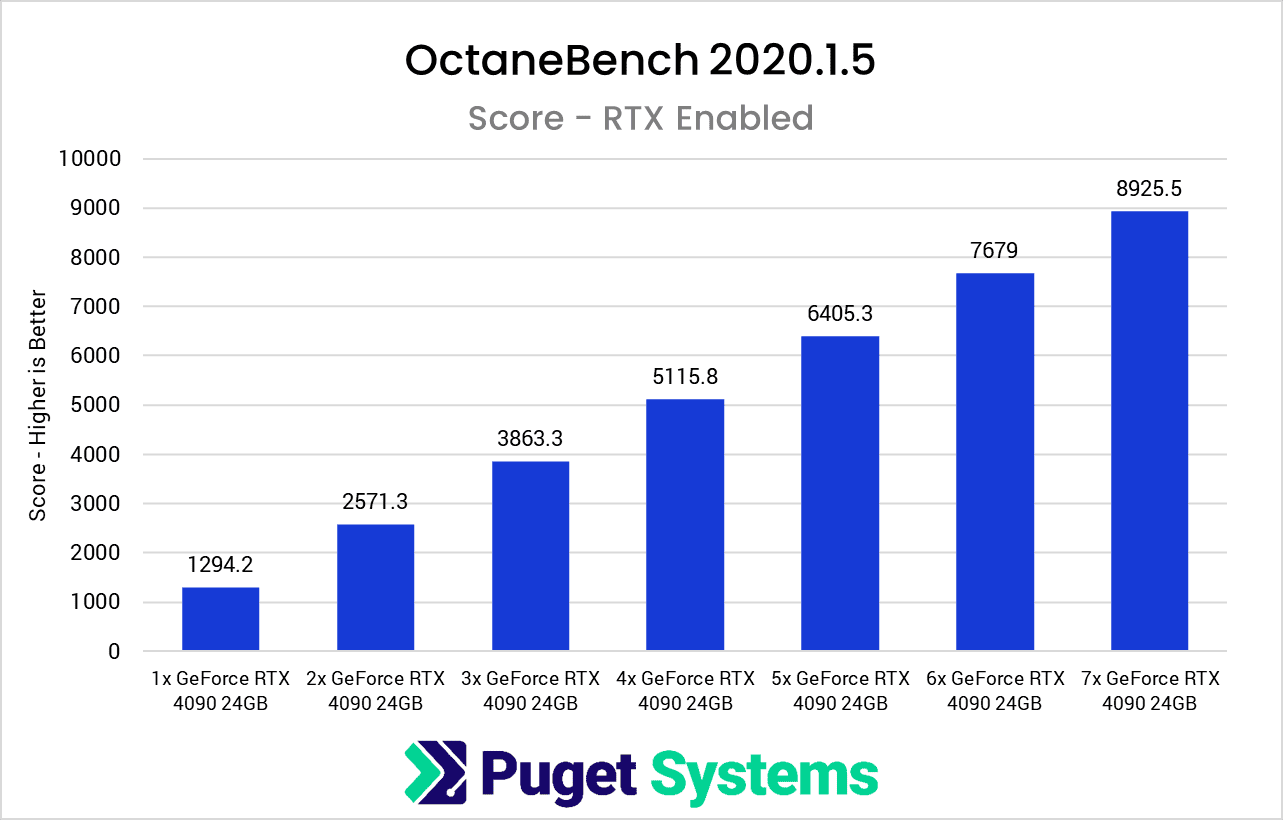

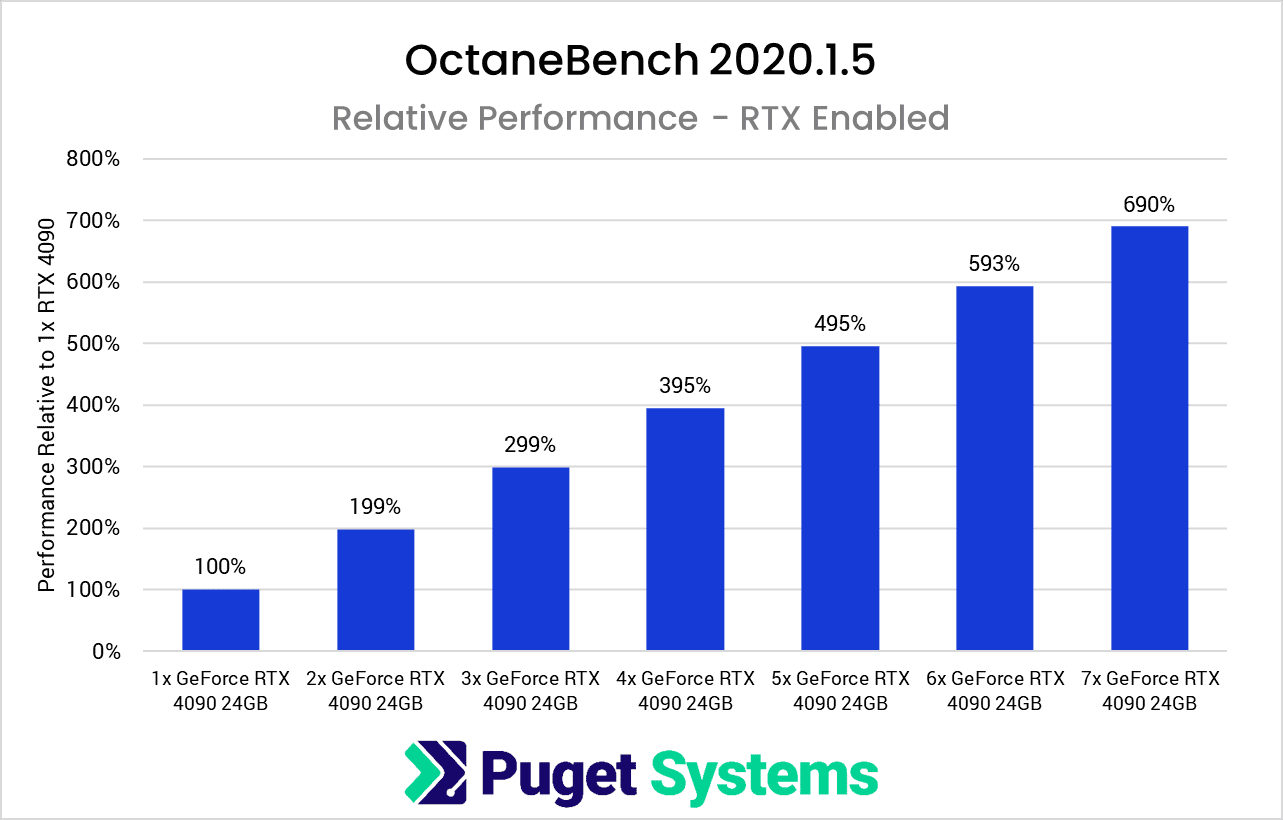

Performance: OctaneBench

Last up is OctaneBench, which typically has the best GPU scaling out of anything we currently test in our standard suite of benchmarks. We weren’t completely sure how it would hold up with this many cards, but it came through just about perfectly. For each card we added to the system, we got within a few percent of perfect scaling.

In fact, with seven RTX 4090 GPUs we ended up with about 6.9x higher performance than a single card. That is about as good as you can ask for and really shows off how well OctaneRender is able to take advantage of multi-GPU configurations.

Conclusion

While the overwhelming majority of users are only going to have one, maybe two, GPUs in their system, taking things to the extreme like this is a great way to see just what is possible with modern, off-the-shelf hardware. While we certainly used some non-standard things like four power supplies, PCIe extension cables, and a GPU mining rack, you could (with deep enough pockets) build this exact same rig yourself.

Now, should you actually build this? Probably not, and we have zero intention of ever selling anything like this to our customers. It was surprisingly stable, with the only real issue being we couldn’t run the RTX 4090s at PCIe Gen4 speeds, but that doesn’t make it a good idea. The better solution if you need this much GPU computing power is likely a small cluster, with only a few GPUs in each system. Sticking with GeForce will limit you to two GPUs in most cases, but with Quadro, you can easily do three, sometimes four, cards per system.

Feasibility aside, however, it is very impressive what this amount of processing power is capable of. Not every application is going to be able to scale well enough to take advantage of this many cards, but GPU rendering engines like OctaneRender in particular can see some incredible performance gains. The NVIDIA GeForce RTX 4090 is an extremely fast GPU in the first place, and when you combine this many of them, the performance (along with the power draw and heat output) goes through the roof.

For example, this exact configuration is currently number 12 on the OctaneBench database, trailing behind configurations that mostly consist of 14+ GPUs. And this is with non-overclocked GPUs; if we wanted to get into that, we would probably be looking at a #11, possibly #10 spot. In fact, someone out there has already tested an 8x RTX 4090 configuration, which is sitting at the #7 spot for OctaneBench!

All in all, this was a very fun project. The build unfortunately had to be torn down almost immediately to return the GPUs to their respective departments, but it is amazing what computers are capable of when you take things to these extremes.